mirror of

https://github.com/songquanpeng/one-api.git

synced 2025-12-27 10:15:58 +08:00

Merge branch 'main' into pr/Laisky/25

This commit is contained in:

3

.env.example

Normal file

3

.env.example

Normal file

@@ -0,0 +1,3 @@

|

||||

PORT=3000

|

||||

DEBUG=false

|

||||

HTTPS_PROXY=http://localhost:7890

|

||||

35

.github/ISSUE_TEMPLATE/bug_report.md

vendored

35

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -1,26 +1,27 @@

|

||||

---

|

||||

name: 报告问题

|

||||

about: 使用简练详细的语言描述你遇到的问题

|

||||

title: ''

|

||||

name: Bug Report

|

||||

about: Use concise and detailed language to describe the issue you encountered

|

||||

title: ""

|

||||

labels: bug

|

||||

assignees: ''

|

||||

|

||||

assignees: ""

|

||||

---

|

||||

|

||||

**例行检查**

|

||||

## Routine Check

|

||||

|

||||

[//]: # (方框内删除已有的空格,填 x 号)

|

||||

+ [ ] 我已确认目前没有类似 issue

|

||||

+ [ ] 我已确认我已升级到最新版本

|

||||

+ [ ] 我已完整查看过项目 README,尤其是常见问题部分

|

||||

+ [ ] 我理解并愿意跟进此 issue,协助测试和提供反馈

|

||||

+ [ ] 我理解并认可上述内容,并理解项目维护者精力有限,**不遵循规则的 issue 可能会被无视或直接关闭**

|

||||

[//]: # "Remove space in brackets and fill with x"

|

||||

|

||||

**问题描述**

|

||||

- [ ] I have confirmed there are no similar issues

|

||||

- [ ] I have confirmed I am using the latest version

|

||||

- [ ] I have thoroughly read the project README, especially the FAQ section

|

||||

- [ ] I understand and am willing to follow up on this issue, assist with testing and provide feedback

|

||||

- [ ] I understand and agree to the above, and understand that maintainers have limited time - **issues not following guidelines may be ignored or closed**

|

||||

|

||||

**复现步骤**

|

||||

## Issue Description

|

||||

|

||||

**预期结果**

|

||||

## Steps to Reproduce

|

||||

|

||||

**相关截图**

|

||||

如果没有的话,请删除此节。

|

||||

## Expected Behavior

|

||||

|

||||

## Screenshots

|

||||

|

||||

Delete this section if not applicable.

|

||||

|

||||

8

.github/ISSUE_TEMPLATE/config.yml

vendored

8

.github/ISSUE_TEMPLATE/config.yml

vendored

@@ -1,8 +0,0 @@

|

||||

blank_issues_enabled: false

|

||||

contact_links:

|

||||

- name: 项目群聊

|

||||

url: https://openai.justsong.cn/

|

||||

about: QQ 群:828520184,自动审核,备注 One API

|

||||

- name: 赞赏支持

|

||||

url: https://iamazing.cn/page/reward

|

||||

about: 请作者喝杯咖啡,以激励作者持续开发

|

||||

28

.github/ISSUE_TEMPLATE/feature_request.md

vendored

28

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -1,21 +1,21 @@

|

||||

---

|

||||

name: 功能请求

|

||||

about: 使用简练详细的语言描述希望加入的新功能

|

||||

title: ''

|

||||

name: Feature Request

|

||||

about: Use concise and detailed language to describe the new feature you'd like to see

|

||||

title: ""

|

||||

labels: enhancement

|

||||

assignees: ''

|

||||

|

||||

assignees: ""

|

||||

---

|

||||

|

||||

**例行检查**

|

||||

## Routine Check

|

||||

|

||||

[//]: # (方框内删除已有的空格,填 x 号)

|

||||

+ [ ] 我已确认目前没有类似 issue

|

||||

+ [ ] 我已确认我已升级到最新版本

|

||||

+ [ ] 我已完整查看过项目 README,已确定现有版本无法满足需求

|

||||

+ [ ] 我理解并愿意跟进此 issue,协助测试和提供反馈

|

||||

+ [ ] 我理解并认可上述内容,并理解项目维护者精力有限,**不遵循规则的 issue 可能会被无视或直接关闭**

|

||||

[//]: # "Remove space in brackets and fill with x"

|

||||

|

||||

**功能描述**

|

||||

- [ ] I have confirmed there are no similar issues

|

||||

- [ ] I have confirmed I am using the latest version

|

||||

- [ ] I have thoroughly read the project README and confirmed existing features cannot meet my needs

|

||||

- [ ] I understand and am willing to follow up on this issue, assist with testing and provide feedback

|

||||

- [ ] I understand and agree to the above, and understand that maintainers have limited time - **issues not following guidelines may be ignored or closed**

|

||||

|

||||

**应用场景**

|

||||

## Feature Description

|

||||

|

||||

## Use Cases

|

||||

|

||||

142

.github/workflows/ci.yml

vendored

Normal file

142

.github/workflows/ci.yml

vendored

Normal file

@@ -0,0 +1,142 @@

|

||||

name: ci

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- "master"

|

||||

- "main"

|

||||

- "test/ci"

|

||||

|

||||

jobs:

|

||||

build_latest:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

|

||||

- name: Login to Docker Hub

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Build and push latest

|

||||

uses: docker/build-push-action@v5

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

tags: ppcelery/one-api:latest

|

||||

cache-from: type=gha

|

||||

# cache-to: type=gha,mode=max

|

||||

|

||||

build_hash:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

|

||||

- name: Login to Docker Hub

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Add SHORT_SHA env property with commit short sha

|

||||

run: echo "SHORT_SHA=`echo ${GITHUB_SHA} | cut -c1-7`" >> $GITHUB_ENV

|

||||

|

||||

- name: Build and push hash label

|

||||

uses: docker/build-push-action@v5

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

tags: ppcelery/one-api:${{ env.SHORT_SHA }}

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

|

||||

deploy:

|

||||

runs-on: ubuntu-latest

|

||||

needs: build_latest

|

||||

steps:

|

||||

- name: executing remote ssh commands using password

|

||||

uses: appleboy/ssh-action@v1.0.3

|

||||

with:

|

||||

host: ${{ secrets.TARGET_HOST }}

|

||||

username: ${{ secrets.TARGET_HOST_USERNAME }}

|

||||

password: ${{ secrets.TARGET_HOST_PASSWORD }}

|

||||

port: ${{ secrets.TARGET_HOST_SSH_PORT }}

|

||||

script: |

|

||||

docker pull ppcelery/one-api:latest

|

||||

cd /home/laisky/repo/VPS

|

||||

docker-compose -f b1-docker-compose.yml up -d --remove-orphans --force-recreate oneapi

|

||||

docker ps

|

||||

|

||||

build_arm64_hash:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

|

||||

- name: Login to Docker Hub

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Add SHORT_SHA env property with commit short sha

|

||||

run: echo "SHORT_SHA=`echo ${GITHUB_SHA} | cut -c1-7`" >> $GITHUB_ENV

|

||||

|

||||

- name: Build and push arm64 hash label

|

||||

uses: docker/build-push-action@v5

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

tags: ppcelery/one-api:arm64-${{ env.SHORT_SHA }}

|

||||

platforms: linux/arm64

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

|

||||

build_arm64_latest:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

|

||||

- name: Login to Docker Hub

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Build and push arm64 latest

|

||||

uses: docker/build-push-action@v5

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

tags: ppcelery/one-api:arm64-latest

|

||||

platforms: linux/arm64

|

||||

cache-from: type=gha

|

||||

# cache-to: type=gha,mode=max

|

||||

49

.github/workflows/docker-image-amd64-en.yml

vendored

49

.github/workflows/docker-image-amd64-en.yml

vendored

@@ -1,49 +0,0 @@

|

||||

name: Publish Docker image (amd64, English)

|

||||

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- '*'

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

name:

|

||||

description: 'reason'

|

||||

required: false

|

||||

jobs:

|

||||

push_to_registries:

|

||||

name: Push Docker image to multiple registries

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

packages: write

|

||||

contents: read

|

||||

steps:

|

||||

- name: Check out the repo

|

||||

uses: actions/checkout@v3

|

||||

|

||||

- name: Save version info

|

||||

run: |

|

||||

git describe --tags > VERSION

|

||||

|

||||

- name: Translate

|

||||

run: |

|

||||

python ./i18n/translate.py --repository_path . --json_file_path ./i18n/en.json

|

||||

- name: Log in to Docker Hub

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Extract metadata (tags, labels) for Docker

|

||||

id: meta

|

||||

uses: docker/metadata-action@v4

|

||||

with:

|

||||

images: |

|

||||

justsong/one-api-en

|

||||

|

||||

- name: Build and push Docker images

|

||||

uses: docker/build-push-action@v3

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

tags: ${{ steps.meta.outputs.tags }}

|

||||

labels: ${{ steps.meta.outputs.labels }}

|

||||

54

.github/workflows/docker-image-amd64.yml

vendored

54

.github/workflows/docker-image-amd64.yml

vendored

@@ -1,54 +0,0 @@

|

||||

name: Publish Docker image (amd64)

|

||||

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- '*'

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

name:

|

||||

description: 'reason'

|

||||

required: false

|

||||

jobs:

|

||||

push_to_registries:

|

||||

name: Push Docker image to multiple registries

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

packages: write

|

||||

contents: read

|

||||

steps:

|

||||

- name: Check out the repo

|

||||

uses: actions/checkout@v3

|

||||

|

||||

- name: Save version info

|

||||

run: |

|

||||

git describe --tags > VERSION

|

||||

|

||||

- name: Log in to Docker Hub

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Log in to the Container registry

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

registry: ghcr.io

|

||||

username: ${{ github.actor }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

- name: Extract metadata (tags, labels) for Docker

|

||||

id: meta

|

||||

uses: docker/metadata-action@v4

|

||||

with:

|

||||

images: |

|

||||

justsong/one-api

|

||||

ghcr.io/${{ github.repository }}

|

||||

|

||||

- name: Build and push Docker images

|

||||

uses: docker/build-push-action@v3

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

tags: ${{ steps.meta.outputs.tags }}

|

||||

labels: ${{ steps.meta.outputs.labels }}

|

||||

62

.github/workflows/docker-image-arm64.yml

vendored

62

.github/workflows/docker-image-arm64.yml

vendored

@@ -1,62 +0,0 @@

|

||||

name: Publish Docker image (arm64)

|

||||

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- '*'

|

||||

- '!*-alpha*'

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

name:

|

||||

description: 'reason'

|

||||

required: false

|

||||

jobs:

|

||||

push_to_registries:

|

||||

name: Push Docker image to multiple registries

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

packages: write

|

||||

contents: read

|

||||

steps:

|

||||

- name: Check out the repo

|

||||

uses: actions/checkout@v3

|

||||

|

||||

- name: Save version info

|

||||

run: |

|

||||

git describe --tags > VERSION

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v2

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v2

|

||||

|

||||

- name: Log in to Docker Hub

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Log in to the Container registry

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

registry: ghcr.io

|

||||

username: ${{ github.actor }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

- name: Extract metadata (tags, labels) for Docker

|

||||

id: meta

|

||||

uses: docker/metadata-action@v4

|

||||

with:

|

||||

images: |

|

||||

justsong/one-api

|

||||

ghcr.io/${{ github.repository }}

|

||||

|

||||

- name: Build and push Docker images

|

||||

uses: docker/build-push-action@v3

|

||||

with:

|

||||

context: .

|

||||

platforms: linux/amd64,linux/arm64

|

||||

push: true

|

||||

tags: ${{ steps.meta.outputs.tags }}

|

||||

labels: ${{ steps.meta.outputs.labels }}

|

||||

53

.github/workflows/lint.yml

vendored

Normal file

53

.github/workflows/lint.yml

vendored

Normal file

@@ -0,0 +1,53 @@

|

||||

name: lint

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- "master"

|

||||

- "main"

|

||||

- "test/ci"

|

||||

|

||||

jobs:

|

||||

unit_tests:

|

||||

name: "Unit tests"

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Setup Go

|

||||

uses: actions/setup-go@v4

|

||||

with:

|

||||

go-version: ^1.23

|

||||

|

||||

- name: Install ffmpeg

|

||||

run: sudo apt-get update && sudo apt-get install -y ffmpeg

|

||||

|

||||

# When you execute your unit tests, make sure to use the "-coverprofile" flag to write a

|

||||

# coverage profile to a file. You will need the name of the file (e.g. "coverage.txt")

|

||||

# in the next step as well as the next job.

|

||||

- name: Test

|

||||

run: go test -cover -coverprofile=coverage.txt ./...

|

||||

|

||||

- name: Archive code coverage results

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: code-coverage

|

||||

path: coverage.txt # Make sure to use the same file name you chose for the "-coverprofile" in the "Test" step

|

||||

|

||||

code_coverage:

|

||||

name: "Code coverage report"

|

||||

if: github.event_name == 'pull_request' # Do not run when workflow is triggered by push to main branch

|

||||

runs-on: ubuntu-latest

|

||||

needs: unit_tests # Depends on the artifact uploaded by the "unit_tests" job

|

||||

steps:

|

||||

- uses: fgrosse/go-coverage-report@v1.0.2 # Consider using a Git revision for maximum security

|

||||

with:

|

||||

coverage-artifact-name: "code-coverage" # can be omitted if you used this default value

|

||||

coverage-file-name: "coverage.txt" # can be omitted if you used this default value

|

||||

|

||||

commit_lint:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: wagoid/commitlint-github-action@v6

|

||||

14

.github/workflows/linux-release.yml

vendored

14

.github/workflows/linux-release.yml

vendored

@@ -5,7 +5,7 @@ permissions:

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- '*'

|

||||

- 'v*.*.*'

|

||||

- '!*-alpha*'

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

@@ -20,10 +20,16 @@ jobs:

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 0

|

||||

- name: Check repository URL

|

||||

run: |

|

||||

REPO_URL=$(git config --get remote.origin.url)

|

||||

if [[ $REPO_URL == *"pro" ]]; then

|

||||

exit 1

|

||||

fi

|

||||

- uses: actions/setup-node@v3

|

||||

with:

|

||||

node-version: 16

|

||||

- name: Build Frontend (theme default)

|

||||

- name: Build Frontend

|

||||

env:

|

||||

CI: ""

|

||||

run: |

|

||||

@@ -38,7 +44,7 @@ jobs:

|

||||

- name: Build Backend (amd64)

|

||||

run: |

|

||||

go mod download

|

||||

go build -ldflags "-s -w -X 'one-api/common.Version=$(git describe --tags)' -extldflags '-static'" -o one-api

|

||||

go build -ldflags "-s -w -X 'github.com/songquanpeng/one-api/common.Version=$(git describe --tags)' -extldflags '-static'" -o one-api

|

||||

|

||||

- name: Build Backend (arm64)

|

||||

run: |

|

||||

@@ -56,4 +62,4 @@ jobs:

|

||||

draft: true

|

||||

generate_release_notes: true

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

12

.github/workflows/macos-release.yml

vendored

12

.github/workflows/macos-release.yml

vendored

@@ -5,7 +5,7 @@ permissions:

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- '*'

|

||||

- 'v*.*.*'

|

||||

- '!*-alpha*'

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

@@ -20,10 +20,16 @@ jobs:

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 0

|

||||

- name: Check repository URL

|

||||

run: |

|

||||

REPO_URL=$(git config --get remote.origin.url)

|

||||

if [[ $REPO_URL == *"pro" ]]; then

|

||||

exit 1

|

||||

fi

|

||||

- uses: actions/setup-node@v3

|

||||

with:

|

||||

node-version: 16

|

||||

- name: Build Frontend (theme default)

|

||||

- name: Build Frontend

|

||||

env:

|

||||

CI: ""

|

||||

run: |

|

||||

@@ -38,7 +44,7 @@ jobs:

|

||||

- name: Build Backend

|

||||

run: |

|

||||

go mod download

|

||||

go build -ldflags "-X 'one-api/common.Version=$(git describe --tags)'" -o one-api-macos

|

||||

go build -ldflags "-X 'github.com/songquanpeng/one-api/common.Version=$(git describe --tags)'" -o one-api-macos

|

||||

- name: Release

|

||||

uses: softprops/action-gh-release@v1

|

||||

if: startsWith(github.ref, 'refs/tags/')

|

||||

|

||||

35

.github/workflows/pr.yml

vendored

Normal file

35

.github/workflows/pr.yml

vendored

Normal file

@@ -0,0 +1,35 @@

|

||||

name: pr-check

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- "master"

|

||||

- "main"

|

||||

|

||||

jobs:

|

||||

build_latest:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

|

||||

- name: Login to Docker Hub

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: try to build

|

||||

uses: docker/build-push-action@v5

|

||||

with:

|

||||

context: .

|

||||

push: false

|

||||

tags: ppcelery/one-api:pr

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

14

.github/workflows/windows-release.yml

vendored

14

.github/workflows/windows-release.yml

vendored

@@ -5,7 +5,7 @@ permissions:

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- '*'

|

||||

- 'v*.*.*'

|

||||

- '!*-alpha*'

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

@@ -23,10 +23,16 @@ jobs:

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 0

|

||||

- name: Check repository URL

|

||||

run: |

|

||||

REPO_URL=$(git config --get remote.origin.url)

|

||||

if [[ $REPO_URL == *"pro" ]]; then

|

||||

exit 1

|

||||

fi

|

||||

- uses: actions/setup-node@v3

|

||||

with:

|

||||

node-version: 16

|

||||

- name: Build Frontend (theme default)

|

||||

- name: Build Frontend

|

||||

env:

|

||||

CI: ""

|

||||

run: |

|

||||

@@ -41,7 +47,7 @@ jobs:

|

||||

- name: Build Backend

|

||||

run: |

|

||||

go mod download

|

||||

go build -ldflags "-s -w -X 'one-api/common.Version=$(git describe --tags)'" -o one-api.exe

|

||||

go build -ldflags "-s -w -X 'github.com/songquanpeng/one-api/common.Version=$(git describe --tags)'" -o one-api.exe

|

||||

- name: Release

|

||||

uses: softprops/action-gh-release@v1

|

||||

if: startsWith(github.ref, 'refs/tags/')

|

||||

@@ -50,4 +56,4 @@ jobs:

|

||||

draft: true

|

||||

generate_release_notes: true

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

7

.gitignore

vendored

7

.gitignore

vendored

@@ -6,4 +6,9 @@ upload

|

||||

build

|

||||

*.db-journal

|

||||

logs

|

||||

data

|

||||

data

|

||||

node_modules

|

||||

/web/node_modules

|

||||

cmd.md

|

||||

.env

|

||||

/one-api

|

||||

|

||||

31

Dockerfile

31

Dockerfile

@@ -1,4 +1,4 @@

|

||||

FROM node:16 as builder

|

||||

FROM node:18 as builder

|

||||

|

||||

WORKDIR /web

|

||||

COPY ./VERSION .

|

||||

@@ -6,13 +6,22 @@ COPY ./web .

|

||||

|

||||

WORKDIR /web/default

|

||||

RUN npm install

|

||||

RUN DISABLE_ESLINT_PLUGIN='true' REACT_APP_VERSION=$(cat VERSION) npm run build

|

||||

RUN DISABLE_ESLINT_PLUGIN='true' REACT_APP_VERSION=$(cat ../VERSION) npm run build

|

||||

|

||||

WORKDIR /web/berry

|

||||

RUN npm install

|

||||

RUN DISABLE_ESLINT_PLUGIN='true' REACT_APP_VERSION=$(cat VERSION) npm run build

|

||||

RUN DISABLE_ESLINT_PLUGIN='true' REACT_APP_VERSION=$(cat ../VERSION) npm run build

|

||||

|

||||

FROM golang AS builder2

|

||||

WORKDIR /web/air

|

||||

RUN npm install

|

||||

RUN DISABLE_ESLINT_PLUGIN='true' REACT_APP_VERSION=$(cat ../VERSION) npm run build

|

||||

|

||||

FROM golang:1.23.3-bullseye AS builder2

|

||||

|

||||

RUN apt-get update

|

||||

RUN apt-get install -y --no-install-recommends g++ make gcc git build-essential ca-certificates \

|

||||

&& update-ca-certificates 2>/dev/null || true \

|

||||

&& rm -rf /var/lib/apt/lists/*

|

||||

|

||||

ENV GO111MODULE=on \

|

||||

CGO_ENABLED=1 \

|

||||

@@ -23,16 +32,16 @@ ADD go.mod go.sum ./

|

||||

RUN go mod download

|

||||

COPY . .

|

||||

COPY --from=builder /web/build ./web/build

|

||||

RUN go build -ldflags "-s -w -X 'one-api/common.Version=$(cat VERSION)' -extldflags '-static'" -o one-api

|

||||

RUN go build -trimpath -ldflags "-s -w -X 'github.com/songquanpeng/one-api/common.Version=$(cat VERSION)' -extldflags '-static'" -o one-api

|

||||

|

||||

FROM alpine

|

||||

FROM debian:bullseye

|

||||

|

||||

RUN apk update \

|

||||

&& apk upgrade \

|

||||

&& apk add --no-cache ca-certificates tzdata \

|

||||

&& update-ca-certificates 2>/dev/null || true

|

||||

RUN apt-get update

|

||||

RUN apt-get install -y --no-install-recommends ca-certificates haveged tzdata ffmpeg \

|

||||

&& update-ca-certificates 2>/dev/null || true \

|

||||

&& rm -rf /var/lib/apt/lists/*

|

||||

|

||||

COPY --from=builder2 /build/one-api /

|

||||

EXPOSE 3000

|

||||

WORKDIR /data

|

||||

ENTRYPOINT ["/one-api"]

|

||||

ENTRYPOINT ["/one-api"]

|

||||

|

||||

53

README.en.md

53

README.en.md

@@ -101,7 +101,7 @@ Nginx reference configuration:

|

||||

```

|

||||

server{

|

||||

server_name openai.justsong.cn; # Modify your domain name accordingly

|

||||

|

||||

|

||||

location / {

|

||||

client_max_body_size 64m;

|

||||

proxy_http_version 1.1;

|

||||

@@ -132,14 +132,14 @@ The initial account username is `root` and password is `123456`.

|

||||

1. Download the executable file from [GitHub Releases](https://github.com/songquanpeng/one-api/releases/latest) or compile from source:

|

||||

```shell

|

||||

git clone https://github.com/songquanpeng/one-api.git

|

||||

|

||||

|

||||

# Build the frontend

|

||||

cd one-api/web

|

||||

cd one-api/web/default

|

||||

npm install

|

||||

npm run build

|

||||

|

||||

|

||||

# Build the backend

|

||||

cd ..

|

||||

cd ../..

|

||||

go mod download

|

||||

go build -ldflags "-s -w" -o one-api

|

||||

```

|

||||

@@ -241,18 +241,45 @@ If the channel ID is not provided, load balancing will be used to distribute the

|

||||

+ Example: `SESSION_SECRET=random_string`

|

||||

3. `SQL_DSN`: When set, the specified database will be used instead of SQLite. Please use MySQL version 8.0.

|

||||

+ Example: `SQL_DSN=root:123456@tcp(localhost:3306)/oneapi`

|

||||

4. `FRONTEND_BASE_URL`: When set, the specified frontend address will be used instead of the backend address.

|

||||

4. `LOG_SQL_DSN`: When set, a separate database will be used for the `logs` table; please use MySQL or PostgreSQL.

|

||||

+ Example: `LOG_SQL_DSN=root:123456@tcp(localhost:3306)/oneapi-logs`

|

||||

5. `FRONTEND_BASE_URL`: When set, the specified frontend address will be used instead of the backend address.

|

||||

+ Example: `FRONTEND_BASE_URL=https://openai.justsong.cn`

|

||||

5. `SYNC_FREQUENCY`: When set, the system will periodically sync configurations from the database, with the unit in seconds. If not set, no sync will happen.

|

||||

6. 'MEMORY_CACHE_ENABLED': Enabling memory caching can cause a certain delay in updating user quotas, with optional values of 'true' and 'false'. If not set, it defaults to 'false'.

|

||||

7. `SYNC_FREQUENCY`: When set, the system will periodically sync configurations from the database, with the unit in seconds. If not set, no sync will happen.

|

||||

+ Example: `SYNC_FREQUENCY=60`

|

||||

6. `NODE_TYPE`: When set, specifies the node type. Valid values are `master` and `slave`. If not set, it defaults to `master`.

|

||||

8. `NODE_TYPE`: When set, specifies the node type. Valid values are `master` and `slave`. If not set, it defaults to `master`.

|

||||

+ Example: `NODE_TYPE=slave`

|

||||

7. `CHANNEL_UPDATE_FREQUENCY`: When set, it periodically updates the channel balances, with the unit in minutes. If not set, no update will happen.

|

||||

9. `CHANNEL_UPDATE_FREQUENCY`: When set, it periodically updates the channel balances, with the unit in minutes. If not set, no update will happen.

|

||||

+ Example: `CHANNEL_UPDATE_FREQUENCY=1440`

|

||||

8. `CHANNEL_TEST_FREQUENCY`: When set, it periodically tests the channels, with the unit in minutes. If not set, no test will happen.

|

||||

10. `CHANNEL_TEST_FREQUENCY`: When set, it periodically tests the channels, with the unit in minutes. If not set, no test will happen.

|

||||

+ Example: `CHANNEL_TEST_FREQUENCY=1440`

|

||||

9. `POLLING_INTERVAL`: The time interval (in seconds) between requests when updating channel balances and testing channel availability. Default is no interval.

|

||||

11. `POLLING_INTERVAL`: The time interval (in seconds) between requests when updating channel balances and testing channel availability. Default is no interval.

|

||||

+ Example: `POLLING_INTERVAL=5`

|

||||

12. `BATCH_UPDATE_ENABLED`: Enabling batch database update aggregation can cause a certain delay in updating user quotas. The optional values are 'true' and 'false', but if not set, it defaults to 'false'.

|

||||

+Example: ` BATCH_UPDATE_ENABLED=true`

|

||||

+If you encounter an issue with too many database connections, you can try enabling this option.

|

||||

13. `BATCH_UPDATE_INTERVAL=5`: The time interval for batch updating aggregates, measured in seconds, defaults to '5'.

|

||||

+Example: ` BATCH_UPDATE_INTERVAL=5`

|

||||

14. Request frequency limit:

|

||||

+ `GLOBAL_API_RATE_LIMIT`: Global API rate limit (excluding relay requests), the maximum number of requests within three minutes per IP, default to 180.

|

||||

+ `GLOBAL_WEL_RATE_LIMIT`: Global web speed limit, the maximum number of requests within three minutes per IP, default to 60.

|

||||

15. Encoder cache settings:

|

||||

+`TIKTOKEN_CACHE_DIR`: By default, when the program starts, it will download the encoding of some common word elements online, such as' gpt-3.5 turbo '. In some unstable network environments or offline situations, it may cause startup problems. This directory can be configured to cache data and can be migrated to an offline environment.

|

||||

+`DATA_GYM_CACHE_DIR`: Currently, this configuration has the same function as' TIKTOKEN-CACHE-DIR ', but its priority is not as high as it.

|

||||

16. `RELAY_TIMEOUT`: Relay timeout setting, measured in seconds, with no default timeout time set.

|

||||

17. `RELAY_PROXY`: After setting up, use this proxy to request APIs.

|

||||

18. `USER_CONTENT_REQUEST_TIMEOUT`: The timeout period for users to upload and download content, measured in seconds.

|

||||

19. `USER_CONTENT_REQUEST_PROXY`: After setting up, use this agent to request content uploaded by users, such as images.

|

||||

20. `SQLITE_BUSY_TIMEOUT`: SQLite lock wait timeout setting, measured in milliseconds, default to '3000'.

|

||||

21. `GEMINI_SAFETY_SETTING`: Gemini's security settings are set to 'BLOCK-NONE' by default.

|

||||

22. `GEMINI_VERSION`: The Gemini version used by the One API, which defaults to 'v1'.

|

||||

23. `THE`: The system's theme setting, default to 'default', specific optional values refer to [here] (./web/README. md).

|

||||

24. `ENABLE_METRIC`: Whether to disable channels based on request success rate, default not enabled, optional values are 'true' and 'false'.

|

||||

25. `METRIC_QUEUE_SIZE`: Request success rate statistics queue size, default to '10'.

|

||||

26. `METRIC_SUCCESS_RATE_THRESHOLD`: Request success rate threshold, default to '0.8'.

|

||||

27. `INITIAL_ROOT_TOKEN`: If this value is set, a root user token with the value of the environment variable will be automatically created when the system starts for the first time.

|

||||

28. `INITIAL_ROOT_ACCESS_TOKEN`: If this value is set, a system management token will be automatically created for the root user with a value of the environment variable when the system starts for the first time.

|

||||

|

||||

### Command Line Parameters

|

||||

1. `--port <port_number>`: Specifies the port number on which the server listens. Defaults to `3000`.

|

||||

@@ -285,7 +312,9 @@ If the channel ID is not provided, load balancing will be used to distribute the

|

||||

+ Double-check that your interface address and API Key are correct.

|

||||

|

||||

## Related Projects

|

||||

[FastGPT](https://github.com/labring/FastGPT): Knowledge question answering system based on the LLM

|

||||

* [FastGPT](https://github.com/labring/FastGPT): Knowledge question answering system based on the LLM

|

||||

* [VChart](https://github.com/VisActor/VChart): More than just a cross-platform charting library, but also an expressive data storyteller.

|

||||

* [VMind](https://github.com/VisActor/VMind): Not just automatic, but also fantastic. Open-source solution for intelligent visualization.

|

||||

|

||||

## Note

|

||||

This project is an open-source project. Please use it in compliance with OpenAI's [Terms of Use](https://openai.com/policies/terms-of-use) and **applicable laws and regulations**. It must not be used for illegal purposes.

|

||||

|

||||

17

README.ja.md

17

README.ja.md

@@ -135,12 +135,12 @@ sudo service nginx restart

|

||||

git clone https://github.com/songquanpeng/one-api.git

|

||||

|

||||

# フロントエンドのビルド

|

||||

cd one-api/web

|

||||

cd one-api/web/default

|

||||

npm install

|

||||

npm run build

|

||||

|

||||

# バックエンドのビルド

|

||||

cd ..

|

||||

cd ../..

|

||||

go mod download

|

||||

go build -ldflags "-s -w" -o one-api

|

||||

```

|

||||

@@ -242,17 +242,18 @@ graph LR

|

||||

+ 例: `SESSION_SECRET=random_string`

|

||||

3. `SQL_DSN`: 設定すると、SQLite の代わりに指定したデータベースが使用されます。MySQL バージョン 8.0 を使用してください。

|

||||

+ 例: `SQL_DSN=root:123456@tcp(localhost:3306)/oneapi`

|

||||

4. `FRONTEND_BASE_URL`: 設定されると、バックエンドアドレスではなく、指定されたフロントエンドアドレスが使われる。

|

||||

4. `LOG_SQL_DSN`: を設定すると、`logs`テーブルには独立したデータベースが使用されます。MySQLまたはPostgreSQLを使用してください。

|

||||

5. `FRONTEND_BASE_URL`: 設定されると、バックエンドアドレスではなく、指定されたフロントエンドアドレスが使われる。

|

||||

+ 例: `FRONTEND_BASE_URL=https://openai.justsong.cn`

|

||||

5. `SYNC_FREQUENCY`: 設定された場合、システムは定期的にデータベースからコンフィグを秒単位で同期する。設定されていない場合、同期は行われません。

|

||||

6. `SYNC_FREQUENCY`: 設定された場合、システムは定期的にデータベースからコンフィグを秒単位で同期する。設定されていない場合、同期は行われません。

|

||||

+ 例: `SYNC_FREQUENCY=60`

|

||||

6. `NODE_TYPE`: 設定すると、ノードのタイプを指定する。有効な値は `master` と `slave` である。設定されていない場合、デフォルトは `master`。

|

||||

7. `NODE_TYPE`: 設定すると、ノードのタイプを指定する。有効な値は `master` と `slave` である。設定されていない場合、デフォルトは `master`。

|

||||

+ 例: `NODE_TYPE=slave`

|

||||

7. `CHANNEL_UPDATE_FREQUENCY`: 設定すると、チャンネル残高を分単位で定期的に更新する。設定されていない場合、更新は行われません。

|

||||

8. `CHANNEL_UPDATE_FREQUENCY`: 設定すると、チャンネル残高を分単位で定期的に更新する。設定されていない場合、更新は行われません。

|

||||

+ 例: `CHANNEL_UPDATE_FREQUENCY=1440`

|

||||

8. `CHANNEL_TEST_FREQUENCY`: 設定すると、チャンネルを定期的にテストする。設定されていない場合、テストは行われません。

|

||||

9. `CHANNEL_TEST_FREQUENCY`: 設定すると、チャンネルを定期的にテストする。設定されていない場合、テストは行われません。

|

||||

+ 例: `CHANNEL_TEST_FREQUENCY=1440`

|

||||

9. `POLLING_INTERVAL`: チャネル残高の更新とチャネルの可用性をテストするときのリクエスト間の時間間隔 (秒)。デフォルトは間隔なし。

|

||||

10. `POLLING_INTERVAL`: チャネル残高の更新とチャネルの可用性をテストするときのリクエスト間の時間間隔 (秒)。デフォルトは間隔なし。

|

||||

+ 例: `POLLING_INTERVAL=5`

|

||||

|

||||

### コマンドラインパラメータ

|

||||

|

||||

483

README.md

483

README.md

@@ -1,428 +1,117 @@

|

||||

<p align="right">

|

||||

<strong>中文</strong> | <a href="./README.en.md">English</a> | <a href="./README.ja.md">日本語</a>

|

||||

</p>

|

||||

|

||||

|

||||

<p align="center">

|

||||

<a href="https://github.com/songquanpeng/one-api"><img src="https://raw.githubusercontent.com/songquanpeng/one-api/main/web/default/public/logo.png" width="150" height="150" alt="one-api logo"></a>

|

||||

</p>

|

||||

|

||||

<div align="center">

|

||||

|

||||

# One API

|

||||

|

||||

_✨ 通过标准的 OpenAI API 格式访问所有的大模型,开箱即用 ✨_

|

||||

The original author of one-api has not been active for a long time, resulting in a backlog of PRs that cannot be updated. Therefore, I forked the code and merged some PRs that I consider important. I also welcome everyone to submit PRs, and I will respond and handle them actively and quickly.

|

||||

|

||||

</div>

|

||||

Fully compatible with the upstream version, can be used directly by replacing the container image, docker images:

|

||||

|

||||

<p align="center">

|

||||

<a href="https://raw.githubusercontent.com/songquanpeng/one-api/main/LICENSE">

|

||||

<img src="https://img.shields.io/github/license/songquanpeng/one-api?color=brightgreen" alt="license">

|

||||

</a>

|

||||

<a href="https://github.com/songquanpeng/one-api/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/songquanpeng/one-api?color=brightgreen&include_prereleases" alt="release">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/repository/docker/justsong/one-api">

|

||||

<img src="https://img.shields.io/docker/pulls/justsong/one-api?color=brightgreen" alt="docker pull">

|

||||

</a>

|

||||

<a href="https://github.com/songquanpeng/one-api/releases/latest">

|

||||

<img src="https://img.shields.io/github/downloads/songquanpeng/one-api/total?color=brightgreen&include_prereleases" alt="release">

|

||||

</a>

|

||||

<a href="https://goreportcard.com/report/github.com/songquanpeng/one-api">

|

||||

<img src="https://goreportcard.com/badge/github.com/songquanpeng/one-api" alt="GoReportCard">

|

||||

</a>

|

||||

</p>

|

||||

- `ppcelery/one-api:latest`

|

||||

- `ppcelery/one-api:arm64-latest`

|

||||

|

||||

<p align="center">

|

||||

<a href="https://github.com/songquanpeng/one-api#部署">部署教程</a>

|

||||

·

|

||||

<a href="https://github.com/songquanpeng/one-api#使用方法">使用方法</a>

|

||||

·

|

||||

<a href="https://github.com/songquanpeng/one-api/issues">意见反馈</a>

|

||||

·

|

||||

<a href="https://github.com/songquanpeng/one-api#截图展示">截图展示</a>

|

||||

·

|

||||

<a href="https://openai.justsong.cn/">在线演示</a>

|

||||

·

|

||||

<a href="https://github.com/songquanpeng/one-api#常见问题">常见问题</a>

|

||||

·

|

||||

<a href="https://github.com/songquanpeng/one-api#相关项目">相关项目</a>

|

||||

·

|

||||

<a href="https://iamazing.cn/page/reward">赞赏支持</a>

|

||||

</p>

|

||||

## Menu

|

||||

|

||||

> [!NOTE]

|

||||

> 本项目为开源项目,使用者必须在遵循 OpenAI 的[使用条款](https://openai.com/policies/terms-of-use)以及**法律法规**的情况下使用,不得用于非法用途。

|

||||

>

|

||||

> 根据[《生成式人工智能服务管理暂行办法》](http://www.cac.gov.cn/2023-07/13/c_1690898327029107.htm)的要求,请勿对中国地区公众提供一切未经备案的生成式人工智能服务。

|

||||

- [One API](#one-api)

|

||||

- [Menu](#menu)

|

||||

- [New Features](#new-features)

|

||||

- [(Merged) Support gpt-vision](#merged-support-gpt-vision)

|

||||

- [Support update user's remained quota](#support-update-users-remained-quota)

|

||||

- [(Merged) Support aws claude](#merged-support-aws-claude)

|

||||

- [Support openai images edits](#support-openai-images-edits)

|

||||

- [Support gemini-2.0-flash-exp](#support-gemini-20-flash-exp)

|

||||

- [Support replicate flux \& remix](#support-replicate-flux--remix)

|

||||

- [Support replicate chat models](#support-replicate-chat-models)

|

||||

- [Support OpenAI O1/O1-mini/O1-preview](#support-openai-o1o1-minio1-preview)

|

||||

- [Get request's cost](#get-requests-cost)

|

||||

- [Support Vertex Imagen3](#support-vertex-imagen3)

|

||||

- [Support gpt-4o-audio](#support-gpt-4o-audio)

|

||||

- [Bug fix](#bug-fix)

|

||||

- [The token balance cannot be edited](#the-token-balance-cannot-be-edited)

|

||||

- [Whisper's transcription only charges for the length of the input audio](#whispers-transcription-only-charges-for-the-length-of-the-input-audio)

|

||||

|

||||

> [!WARNING]

|

||||

> 使用 Docker 拉取的最新镜像可能是 `alpha` 版本,如果追求稳定性请手动指定版本。

|

||||

## New Features

|

||||

|

||||

> [!WARNING]

|

||||

> 使用 root 用户初次登录系统后,务必修改默认密码 `123456`!

|

||||

### (Merged) Support gpt-vision

|

||||

|

||||

## 功能

|

||||

1. 支持多种大模型:

|

||||

+ [x] [OpenAI ChatGPT 系列模型](https://platform.openai.com/docs/guides/gpt/chat-completions-api)(支持 [Azure OpenAI API](https://learn.microsoft.com/en-us/azure/ai-services/openai/reference))

|

||||

+ [x] [Anthropic Claude 系列模型](https://anthropic.com)

|

||||

+ [x] [Google PaLM2/Gemini 系列模型](https://developers.generativeai.google)

|

||||

+ [x] [百度文心一言系列模型](https://cloud.baidu.com/doc/WENXINWORKSHOP/index.html)

|

||||

+ [x] [阿里通义千问系列模型](https://help.aliyun.com/document_detail/2400395.html)

|

||||

+ [x] [讯飞星火认知大模型](https://www.xfyun.cn/doc/spark/Web.html)

|

||||

+ [x] [智谱 ChatGLM 系列模型](https://bigmodel.cn)

|

||||

+ [x] [360 智脑](https://ai.360.cn)

|

||||

+ [x] [腾讯混元大模型](https://cloud.tencent.com/document/product/1729)

|

||||

2. 支持配置镜像以及众多[第三方代理服务](https://iamazing.cn/page/openai-api-third-party-services)。

|

||||

3. 支持通过**负载均衡**的方式访问多个渠道。

|

||||

4. 支持 **stream 模式**,可以通过流式传输实现打字机效果。

|

||||

5. 支持**多机部署**,[详见此处](#多机部署)。

|

||||

6. 支持**令牌管理**,设置令牌的过期时间和额度。

|

||||

7. 支持**兑换码管理**,支持批量生成和导出兑换码,可使用兑换码为账户进行充值。

|

||||

8. 支持**通道管理**,批量创建通道。

|

||||

9. 支持**用户分组**以及**渠道分组**,支持为不同分组设置不同的倍率。

|

||||

10. 支持渠道**设置模型列表**。

|

||||

11. 支持**查看额度明细**。

|

||||

12. 支持**用户邀请奖励**。

|

||||

13. 支持以美元为单位显示额度。

|

||||

14. 支持发布公告,设置充值链接,设置新用户初始额度。

|

||||

15. 支持模型映射,重定向用户的请求模型,如无必要请不要设置,设置之后会导致请求体被重新构造而非直接透传,会导致部分还未正式支持的字段无法传递成功。

|

||||

16. 支持失败自动重试。

|

||||

17. 支持绘图接口。

|

||||

18. 支持 [Cloudflare AI Gateway](https://developers.cloudflare.com/ai-gateway/providers/openai/),渠道设置的代理部分填写 `https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/openai` 即可。

|

||||

19. 支持丰富的**自定义**设置,

|

||||

1. 支持自定义系统名称,logo 以及页脚。

|

||||

2. 支持自定义首页和关于页面,可以选择使用 HTML & Markdown 代码进行自定义,或者使用一个单独的网页通过 iframe 嵌入。

|

||||

20. 支持通过系统访问令牌访问管理 API(bearer token,用以替代 cookie,你可以自行抓包来查看 API 的用法)。

|

||||

21. 支持 Cloudflare Turnstile 用户校验。

|

||||

22. 支持用户管理,支持**多种用户登录注册方式**:

|

||||

+ 邮箱登录注册(支持注册邮箱白名单)以及通过邮箱进行密码重置。

|

||||

+ [GitHub 开放授权](https://github.com/settings/applications/new)。

|

||||

+ 微信公众号授权(需要额外部署 [WeChat Server](https://github.com/songquanpeng/wechat-server))。

|

||||

23. 支持主题切换,设置环境变量 `THEME` 即可,默认为 `default`,欢迎 PR 更多主题,具体参考[此处](./web/README.md)。

|

||||

### Support update user's remained quota

|

||||

|

||||

## 部署

|

||||

### 基于 Docker 进行部署

|

||||

```shell

|

||||

# 使用 SQLite 的部署命令:

|

||||

docker run --name one-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api

|

||||

# 使用 MySQL 的部署命令,在上面的基础上添加 `-e SQL_DSN="root:123456@tcp(localhost:3306)/oneapi"`,请自行修改数据库连接参数,不清楚如何修改请参见下面环境变量一节。

|

||||

# 例如:

|

||||

docker run --name one-api -d --restart always -p 3000:3000 -e SQL_DSN="root:123456@tcp(localhost:3306)/oneapi" -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api

|

||||

```

|

||||

You can update the used quota using the API key of any token, allowing other consumption to be aggregated into the one-api for centralized management.

|

||||

|

||||

其中,`-p 3000:3000` 中的第一个 `3000` 是宿主机的端口,可以根据需要进行修改。

|

||||

|

||||

|

||||

数据和日志将会保存在宿主机的 `/home/ubuntu/data/one-api` 目录,请确保该目录存在且具有写入权限,或者更改为合适的目录。

|

||||

### (Merged) Support aws claude

|

||||

|

||||

如果启动失败,请添加 `--privileged=true`,具体参考 https://github.com/songquanpeng/one-api/issues/482 。

|

||||

- [feat: support aws bedrockruntime claude3 #1328](https://github.com/songquanpeng/one-api/pull/1328)

|

||||

- [feat: add new claude models #1910](https://github.com/songquanpeng/one-api/pull/1910)

|

||||

|

||||

如果上面的镜像无法拉取,可以尝试使用 GitHub 的 Docker 镜像,将上面的 `justsong/one-api` 替换为 `ghcr.io/songquanpeng/one-api` 即可。

|

||||

|

||||

|

||||

如果你的并发量较大,**务必**设置 `SQL_DSN`,详见下面[环境变量](#环境变量)一节。

|

||||

### Support openai images edits

|

||||

|

||||

更新命令:`docker run --rm -v /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower -cR`

|

||||

- [feat: support openai images edits api #1369](https://github.com/songquanpeng/one-api/pull/1369)

|

||||

|

||||

Nginx 的参考配置:

|

||||

```

|

||||

server{

|

||||

server_name openai.justsong.cn; # 请根据实际情况修改你的域名

|

||||

|

||||

location / {

|

||||

client_max_body_size 64m;

|

||||

proxy_http_version 1.1;

|

||||

proxy_pass http://localhost:3000; # 请根据实际情况修改你的端口

|

||||

proxy_set_header Host $host;

|

||||

proxy_set_header X-Forwarded-For $remote_addr;

|

||||

proxy_cache_bypass $http_upgrade;

|

||||

proxy_set_header Accept-Encoding gzip;

|

||||

proxy_read_timeout 300s; # GPT-4 需要较长的超时时间,请自行调整

|

||||

}

|

||||

|

||||

|

||||

### Support gemini-2.0-flash-exp

|

||||

|

||||

- [feat: add gemini-2.0-flash-exp #1983](https://github.com/songquanpeng/one-api/pull/1983)

|

||||

|

||||

|

||||

|

||||

### Support replicate flux & remix

|

||||

|

||||

- [feature: 支持 replicate 的绘图 #1954](https://github.com/songquanpeng/one-api/pull/1954)

|

||||

- [feat: image edits/inpaiting 支持 replicate 的 flux remix #1986](https://github.com/songquanpeng/one-api/pull/1986)

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### Support replicate chat models

|

||||

|

||||

- [feat: 支持 replicate chat models #1989](https://github.com/songquanpeng/one-api/pull/1989)

|

||||

|

||||

### Support OpenAI O1/O1-mini/O1-preview

|

||||

|

||||

- [feat: add openai o1 #1990](https://github.com/songquanpeng/one-api/pull/1990)

|

||||

|

||||

### Get request's cost

|

||||

|

||||

Each chat completion request will include a `X-Oneapi-Request-Id` in the returned headers. You can use this request id to request `GET /api/cost/request/:request_id` to get the cost of this request.

|

||||

|

||||

The returned structure is:

|

||||

|

||||

```go

|

||||

type UserRequestCost struct {

|

||||

Id int `json:"id"`

|

||||

CreatedTime int64 `json:"created_time" gorm:"bigint"`

|

||||

UserID int `json:"user_id"`

|

||||

RequestID string `json:"request_id"`

|

||||

Quota int64 `json:"quota"`

|

||||

CostUSD float64 `json:"cost_usd" gorm:"-"`

|

||||

}

|

||||

```

|

||||

|

||||

之后使用 Let's Encrypt 的 certbot 配置 HTTPS:

|

||||

```bash

|

||||

# Ubuntu 安装 certbot:

|

||||

sudo snap install --classic certbot

|

||||

sudo ln -s /snap/bin/certbot /usr/bin/certbot

|

||||

# 生成证书 & 修改 Nginx 配置

|

||||

sudo certbot --nginx

|

||||

# 根据指示进行操作

|

||||

# 重启 Nginx

|

||||

sudo service nginx restart

|

||||

```

|

||||

### Support Vertex Imagen3

|

||||

|

||||

初始账号用户名为 `root`,密码为 `123456`。

|

||||

- [feat: support vertex imagen3 #2030](https://github.com/songquanpeng/one-api/pull/2030)

|

||||

|

||||

|

||||

|

||||

### 基于 Docker Compose 进行部署

|

||||

### Support gpt-4o-audio

|

||||

|

||||

> 仅启动方式不同,参数设置不变,请参考基于 Docker 部署部分

|

||||

- [feat: support gpt-4o-audio #2032](https://github.com/songquanpeng/one-api/pull/2032)

|

||||

|

||||

```shell

|

||||

# 目前支持 MySQL 启动,数据存储在 ./data/mysql 文件夹内

|

||||

docker-compose up -d

|

||||

|

||||

|

||||

# 查看部署状态

|

||||

docker-compose ps

|

||||

```

|

||||

|

||||

|

||||

### 手动部署

|

||||

1. 从 [GitHub Releases](https://github.com/songquanpeng/one-api/releases/latest) 下载可执行文件或者从源码编译:

|

||||

```shell

|

||||

git clone https://github.com/songquanpeng/one-api.git

|

||||

|

||||

# 构建前端

|

||||

cd one-api/web

|

||||

npm install

|

||||

npm run build

|

||||

|

||||

# 构建后端

|

||||

cd ..

|

||||

go mod download

|

||||

go build -ldflags "-s -w" -o one-api

|

||||

````

|

||||

2. 运行:

|

||||

```shell

|

||||

chmod u+x one-api

|

||||

./one-api --port 3000 --log-dir ./logs

|

||||

```

|

||||

3. 访问 [http://localhost:3000/](http://localhost:3000/) 并登录。初始账号用户名为 `root`,密码为 `123456`。

|

||||

## Bug fix

|

||||

|

||||

更加详细的部署教程[参见此处](https://iamazing.cn/page/how-to-deploy-a-website)。

|

||||

### The token balance cannot be edited

|

||||

|

||||

### 多机部署

|

||||

1. 所有服务器 `SESSION_SECRET` 设置一样的值。

|

||||

2. 必须设置 `SQL_DSN`,使用 MySQL 数据库而非 SQLite,所有服务器连接同一个数据库。

|

||||

3. 所有从服务器必须设置 `NODE_TYPE` 为 `slave`,不设置则默认为主服务器。

|

||||

4. 设置 `SYNC_FREQUENCY` 后服务器将定期从数据库同步配置,在使用远程数据库的情况下,推荐设置该项并启用 Redis,无论主从。

|

||||

5. 从服务器可以选择设置 `FRONTEND_BASE_URL`,以重定向页面请求到主服务器。

|

||||

6. 从服务器上**分别**装好 Redis,设置好 `REDIS_CONN_STRING`,这样可以做到在缓存未过期的情况下数据库零访问,可以减少延迟。

|

||||

7. 如果主服务器访问数据库延迟也比较高,则也需要启用 Redis,并设置 `SYNC_FREQUENCY`,以定期从数据库同步配置。

|

||||

- [BUGFIX: 更新令牌时的一些问题 #1933](https://github.com/songquanpeng/one-api/pull/1933)

|

||||

|

||||

环境变量的具体使用方法详见[此处](#环境变量)。

|

||||

### Whisper's transcription only charges for the length of the input audio

|

||||

|

||||

### 宝塔部署教程

|

||||

|

||||

详见 [#175](https://github.com/songquanpeng/one-api/issues/175)。

|

||||

|

||||

如果部署后访问出现空白页面,详见 [#97](https://github.com/songquanpeng/one-api/issues/97)。

|

||||

|

||||

### 部署第三方服务配合 One API 使用

|

||||

> 欢迎 PR 添加更多示例。

|

||||

|

||||

#### ChatGPT Next Web

|

||||

项目主页:https://github.com/Yidadaa/ChatGPT-Next-Web

|

||||

|

||||

```bash

|

||||

docker run --name chat-next-web -d -p 3001:3000 yidadaa/chatgpt-next-web

|

||||

```

|

||||

|

||||

注意修改端口号,之后在页面上设置接口地址(例如:https://openai.justsong.cn/ )和 API Key 即可。

|

||||

|

||||

#### ChatGPT Web

|

||||

项目主页:https://github.com/Chanzhaoyu/chatgpt-web

|

||||

|

||||

```bash

|

||||

docker run --name chatgpt-web -d -p 3002:3002 -e OPENAI_API_BASE_URL=https://openai.justsong.cn -e OPENAI_API_KEY=sk-xxx chenzhaoyu94/chatgpt-web

|

||||

```

|

||||

|

||||

注意修改端口号、`OPENAI_API_BASE_URL` 和 `OPENAI_API_KEY`。

|

||||

|

||||

#### QChatGPT - QQ机器人

|

||||

项目主页:https://github.com/RockChinQ/QChatGPT

|

||||

|

||||

根据文档完成部署后,在`config.py`设置配置项`openai_config`的`reverse_proxy`为 One API 后端地址,设置`api_key`为 One API 生成的key,并在配置项`completion_api_params`的`model`参数设置为 One API 支持的模型名称。

|

||||

|

||||

可安装 [Switcher 插件](https://github.com/RockChinQ/Switcher)在运行时切换所使用的模型。

|

||||

|

||||

### 部署到第三方平台

|

||||

<details>

|

||||

<summary><strong>部署到 Sealos </strong></summary>

|

||||

<div>

|

||||

|

||||

> Sealos 的服务器在国外,不需要额外处理网络问题,支持高并发 & 动态伸缩。

|

||||

|

||||

点击以下按钮一键部署(部署后访问出现 404 请等待 3~5 分钟):

|

||||

|

||||

[](https://cloud.sealos.io/?openapp=system-fastdeploy?templateName=one-api)

|

||||

|

||||

</div>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary><strong>部署到 Zeabur</strong></summary>

|

||||

<div>

|

||||

|

||||

> Zeabur 的服务器在国外,自动解决了网络的问题,同时免费的额度也足够个人使用

|

||||

|

||||

[](https://zeabur.com/templates/7Q0KO3)

|

||||

|

||||

1. 首先 fork 一份代码。

|

||||

2. 进入 [Zeabur](https://zeabur.com?referralCode=songquanpeng),登录,进入控制台。

|

||||

3. 新建一个 Project,在 Service -> Add Service 选择 Marketplace,选择 MySQL,并记下连接参数(用户名、密码、地址、端口)。

|

||||

4. 复制链接参数,运行 ```create database `one-api` ``` 创建数据库。

|

||||

5. 然后在 Service -> Add Service,选择 Git(第一次使用需要先授权),选择你 fork 的仓库。

|

||||

6. Deploy 会自动开始,先取消。进入下方 Variable,添加一个 `PORT`,值为 `3000`,再添加一个 `SQL_DSN`,值为 `<username>:<password>@tcp(<addr>:<port>)/one-api` ,然后保存。 注意如果不填写 `SQL_DSN`,数据将无法持久化,重新部署后数据会丢失。

|

||||

7. 选择 Redeploy。

|

||||

8. 进入下方 Domains,选择一个合适的域名前缀,如 "my-one-api",最终域名为 "my-one-api.zeabur.app",也可以 CNAME 自己的域名。

|

||||

9. 等待部署完成,点击生成的域名进入 One API。

|

||||

|

||||

</div>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary><strong>部署到 Render</strong></summary>

|

||||

<div>

|

||||

|

||||

> Render 提供免费额度,绑卡后可以进一步提升额度

|

||||

|

||||

Render 可以直接部署 docker 镜像,不需要 fork 仓库:https://dashboard.render.com

|

||||

|

||||

</div>

|

||||

</details>

|

||||

|

||||

## 配置

|

||||

系统本身开箱即用。

|

||||

|

||||

你可以通过设置环境变量或者命令行参数进行配置。

|

||||

|

||||

等到系统启动后,使用 `root` 用户登录系统并做进一步的配置。

|

||||

|

||||

**Note**:如果你不知道某个配置项的含义,可以临时删掉值以看到进一步的提示文字。

|

||||

|

||||

## 使用方法

|

||||

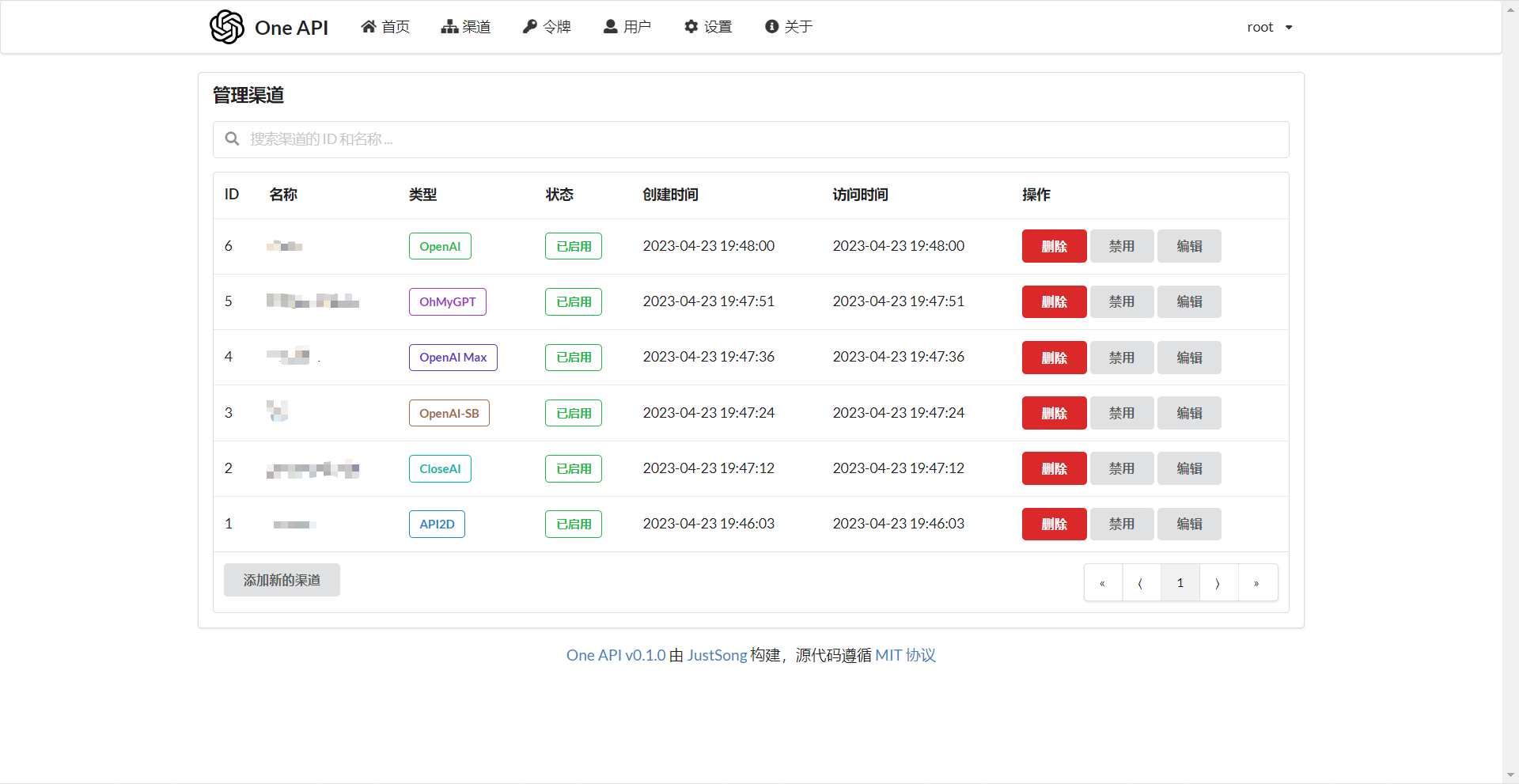

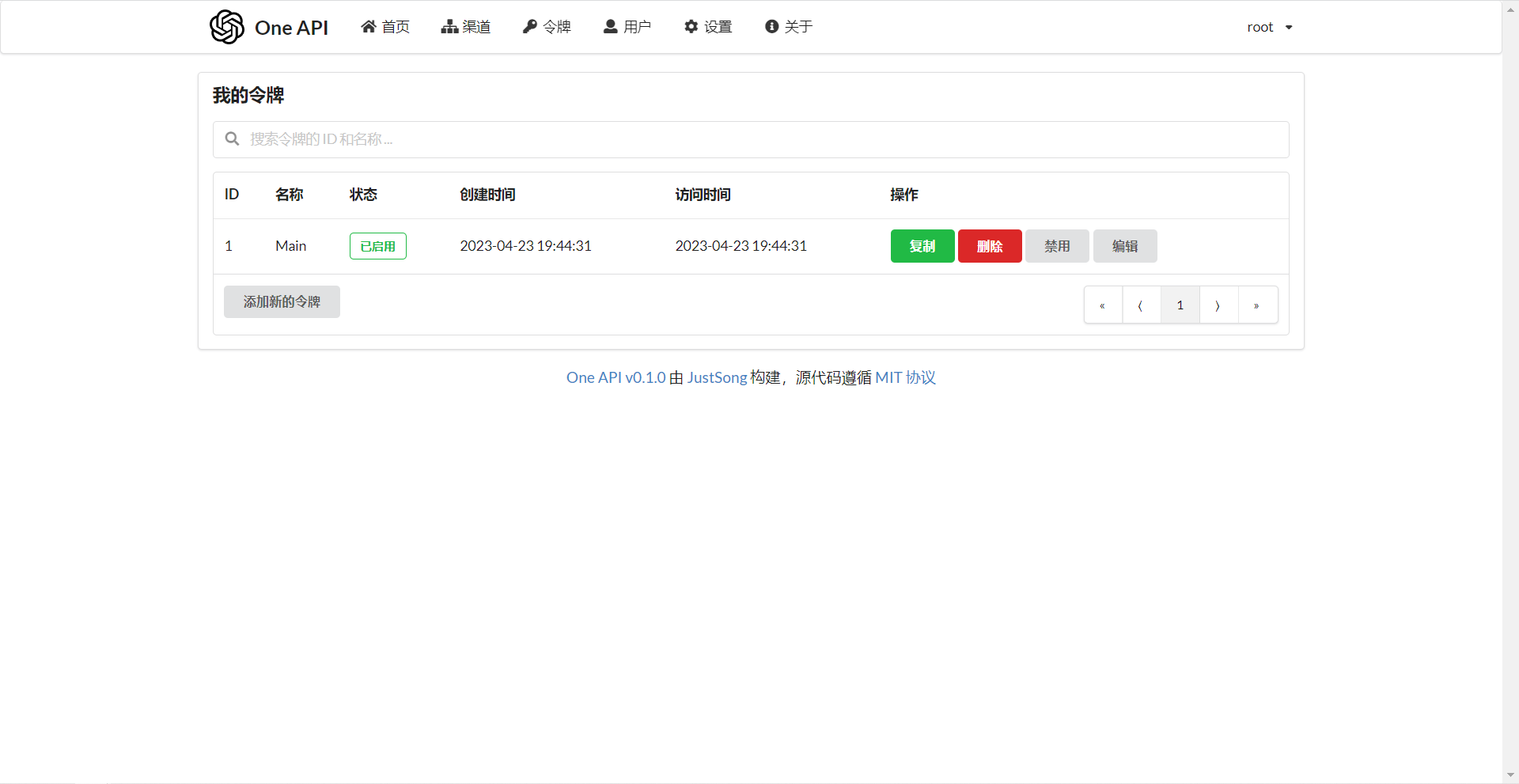

在`渠道`页面中添加你的 API Key,之后在`令牌`页面中新增访问令牌。

|

||||

|

||||

之后就可以使用你的令牌访问 One API 了,使用方式与 [OpenAI API](https://platform.openai.com/docs/api-reference/introduction) 一致。

|

||||

|

||||

你需要在各种用到 OpenAI API 的地方设置 API Base 为你的 One API 的部署地址,例如:`https://openai.justsong.cn`,API Key 则为你在 One API 中生成的令牌。

|

||||

|

||||

注意,具体的 API Base 的格式取决于你所使用的客户端。

|

||||

|

||||

例如对于 OpenAI 的官方库:

|

||||

```bash

|

||||

OPENAI_API_KEY="sk-xxxxxx"

|

||||

OPENAI_API_BASE="https://<HOST>:<PORT>/v1"

|

||||

```

|

||||

|

||||

```mermaid

|

||||

graph LR

|

||||

A(用户)

|

||||

A --->|使用 One API 分发的 key 进行请求| B(One API)

|

||||

B -->|中继请求| C(OpenAI)

|

||||

B -->|中继请求| D(Azure)

|

||||

B -->|中继请求| E(其他 OpenAI API 格式下游渠道)

|

||||

B -->|中继并修改请求体和返回体| F(非 OpenAI API 格式下游渠道)

|

||||

```

|

||||

|

||||

可以通过在令牌后面添加渠道 ID 的方式指定使用哪一个渠道处理本次请求,例如:`Authorization: Bearer ONE_API_KEY-CHANNEL_ID`。

|

||||

注意,需要是管理员用户创建的令牌才能指定渠道 ID。

|

||||

|

||||

不加的话将会使用负载均衡的方式使用多个渠道。

|

||||

|

||||

### 环境变量

|

||||

1. `REDIS_CONN_STRING`:设置之后将使用 Redis 作为缓存使用。

|

||||

+ 例子:`REDIS_CONN_STRING=redis://default:redispw@localhost:49153`

|

||||

+ 如果数据库访问延迟很低,没有必要启用 Redis,启用后反而会出现数据滞后的问题。

|

||||

2. `SESSION_SECRET`:设置之后将使用固定的会话密钥,这样系统重新启动后已登录用户的 cookie 将依旧有效。

|

||||

+ 例子:`SESSION_SECRET=random_string`

|

||||

3. `SQL_DSN`:设置之后将使用指定数据库而非 SQLite,请使用 MySQL 或 PostgreSQL。

|

||||

+ 例子:

|

||||

+ MySQL:`SQL_DSN=root:123456@tcp(localhost:3306)/oneapi`

|

||||

+ PostgreSQL:`SQL_DSN=postgres://postgres:123456@localhost:5432/oneapi`(适配中,欢迎反馈)

|

||||

+ 注意需要提前建立数据库 `oneapi`,无需手动建表,程序将自动建表。

|

||||

+ 如果使用本地数据库:部署命令可添加 `--network="host"` 以使得容器内的程序可以访问到宿主机上的 MySQL。

|

||||

+ 如果使用云数据库:如果云服务器需要验证身份,需要在连接参数中添加 `?tls=skip-verify`。

|

||||

+ 请根据你的数据库配置修改下列参数(或者保持默认值):

|

||||

+ `SQL_MAX_IDLE_CONNS`:最大空闲连接数,默认为 `100`。

|

||||

+ `SQL_MAX_OPEN_CONNS`:最大打开连接数,默认为 `1000`。

|

||||

+ 如果报错 `Error 1040: Too many connections`,请适当减小该值。

|

||||

+ `SQL_CONN_MAX_LIFETIME`:连接的最大生命周期,默认为 `60`,单位分钟。

|

||||

4. `FRONTEND_BASE_URL`:设置之后将重定向页面请求到指定的地址,仅限从服务器设置。

|

||||

+ 例子:`FRONTEND_BASE_URL=https://openai.justsong.cn`

|

||||

5. `MEMORY_CACHE_ENABLED`:启用内存缓存,会导致用户额度的更新存在一定的延迟,可选值为 `true` 和 `false`,未设置则默认为 `false`。

|

||||

+ 例子:`MEMORY_CACHE_ENABLED=true`

|

||||

6. `SYNC_FREQUENCY`:在启用缓存的情况下与数据库同步配置的频率,单位为秒,默认为 `600` 秒。

|

||||

+ 例子:`SYNC_FREQUENCY=60`

|

||||

7. `NODE_TYPE`:设置之后将指定节点类型,可选值为 `master` 和 `slave`,未设置则默认为 `master`。

|

||||

+ 例子:`NODE_TYPE=slave`

|

||||

8. `CHANNEL_UPDATE_FREQUENCY`:设置之后将定期更新渠道余额,单位为分钟,未设置则不进行更新。

|

||||

+ 例子:`CHANNEL_UPDATE_FREQUENCY=1440`

|

||||

9. `CHANNEL_TEST_FREQUENCY`:设置之后将定期检查渠道,单位为分钟,未设置则不进行检查。

|

||||

+ 例子:`CHANNEL_TEST_FREQUENCY=1440`

|

||||

10. `POLLING_INTERVAL`:批量更新渠道余额以及测试可用性时的请求间隔,单位为秒,默认无间隔。

|

||||

+ 例子:`POLLING_INTERVAL=5`

|

||||

11. `BATCH_UPDATE_ENABLED`:启用数据库批量更新聚合,会导致用户额度的更新存在一定的延迟可选值为 `true` 和 `false`,未设置则默认为 `false`。

|

||||

+ 例子:`BATCH_UPDATE_ENABLED=true`

|

||||

+ 如果你遇到了数据库连接数过多的问题,可以尝试启用该选项。

|

||||

12. `BATCH_UPDATE_INTERVAL=5`:批量更新聚合的时间间隔,单位为秒,默认为 `5`。

|

||||

+ 例子:`BATCH_UPDATE_INTERVAL=5`

|

||||

13. 请求频率限制:

|

||||

+ `GLOBAL_API_RATE_LIMIT`:全局 API 速率限制(除中继请求外),单 ip 三分钟内的最大请求数,默认为 `180`。

|

||||

+ `GLOBAL_WEB_RATE_LIMIT`:全局 Web 速率限制,单 ip 三分钟内的最大请求数,默认为 `60`。

|

||||

14. 编码器缓存设置:

|

||||

+ `TIKTOKEN_CACHE_DIR`:默认程序启动时会联网下载一些通用的词元的编码,如:`gpt-3.5-turbo`,在一些网络环境不稳定,或者离线情况,可能会导致启动有问题,可以配置此目录缓存数据,可迁移到离线环境。

|

||||

+ `DATA_GYM_CACHE_DIR`:目前该配置作用与 `TIKTOKEN_CACHE_DIR` 一致,但是优先级没有它高。

|

||||

15. `RELAY_TIMEOUT`:中继超时设置,单位为秒,默认不设置超时时间。

|

||||

16. `SQLITE_BUSY_TIMEOUT`:SQLite 锁等待超时设置,单位为毫秒,默认 `3000`。

|

||||

17. `GEMINI_SAFETY_SETTING`:Gemini 的安全设置,默认 `BLOCK_NONE`。

|

||||

18. `THEME`:系统的主题设置,默认为 `default`,具体可选值参考[此处](./web/README.md)。

|

||||

|

||||

### 命令行参数

|

||||

1. `--port <port_number>`: 指定服务器监听的端口号,默认为 `3000`。

|

||||

+ 例子:`--port 3000`

|

||||

2. `--log-dir <log_dir>`: 指定日志文件夹,如果没有设置,默认保存至工作目录的 `logs` 文件夹下。

|

||||

+ 例子:`--log-dir ./logs`

|

||||

3. `--version`: 打印系统版本号并退出。

|

||||

4. `--help`: 查看命令的使用帮助和参数说明。

|

||||

|

||||

## 演示

|

||||

### 在线演示

|

||||

注意,该演示站不提供对外服务:

|

||||

https://openai.justsong.cn

|

||||

|

||||

### 截图展示

|

||||

|

||||

|

||||

|

||||

## 常见问题

|

||||

1. 额度是什么?怎么计算的?One API 的额度计算有问题?

|

||||

+ 额度 = 分组倍率 * 模型倍率 * (提示 token 数 + 补全 token 数 * 补全倍率)

|

||||

+ 其中补全倍率对于 GPT3.5 固定为 1.33,GPT4 为 2,与官方保持一致。

|

||||

+ 如果是非流模式,官方接口会返回消耗的总 token,但是你要注意提示和补全的消耗倍率不一样。

|

||||

+ 注意,One API 的默认倍率就是官方倍率,是已经调整过的。

|

||||

2. 账户额度足够为什么提示额度不足?

|

||||

+ 请检查你的令牌额度是否足够,这个和账户额度是分开的。

|

||||

+ 令牌额度仅供用户设置最大使用量,用户可自由设置。

|

||||

3. 提示无可用渠道?

|

||||

+ 请检查的用户分组和渠道分组设置。

|

||||

+ 以及渠道的模型设置。

|

||||

4. 渠道测试报错:`invalid character '<' looking for beginning of value`

|

||||

+ 这是因为返回值不是合法的 JSON,而是一个 HTML 页面。

|

||||

+ 大概率是你的部署站的 IP 或代理的节点被 CloudFlare 封禁了。

|

||||

5. ChatGPT Next Web 报错:`Failed to fetch`

|

||||

+ 部署的时候不要设置 `BASE_URL`。

|

||||

+ 检查你的接口地址和 API Key 有没有填对。

|

||||

+ 检查是否启用了 HTTPS,浏览器会拦截 HTTPS 域名下的 HTTP 请求。

|

||||

6. 报错:`当前分组负载已饱和,请稍后再试`

|

||||

+ 上游通道 429 了。

|

||||

7. 升级之后我的数据会丢失吗?

|

||||

+ 如果使用 MySQL,不会。

|

||||

+ 如果使用 SQLite,需要按照我所给的部署命令挂载 volume 持久化 one-api.db 数据库文件,否则容器重启后数据会丢失。

|

||||

8. 升级之前数据库需要做变更吗?

|

||||

+ 一般情况下不需要,系统将在初始化的时候自动调整。

|

||||

+ 如果需要的话,我会在更新日志中说明,并给出脚本。

|

||||

|

||||

## 相关项目

|

||||

* [FastGPT](https://github.com/labring/FastGPT): 基于 LLM 大语言模型的知识库问答系统

|

||||

* [ChatGPT Next Web](https://github.com/Yidadaa/ChatGPT-Next-Web): 一键拥有你自己的跨平台 ChatGPT 应用

|

||||

|

||||

## 注意

|

||||

|

||||

本项目使用 MIT 协议进行开源,**在此基础上**,必须在页面底部保留署名以及指向本项目的链接。如果不想保留署名,必须首先获得授权。

|

||||

|

||||

同样适用于基于本项目的二开项目。

|

||||

|

||||

依据 MIT 协议,使用者需自行承担使用本项目的风险与责任,本开源项目开发者与此无关。

|

||||

- [feat(audio): count whisper-1 quota by audio duration #2022](https://github.com/songquanpeng/one-api/pull/2022)

|

||||

|

||||

29

common/blacklist/main.go

Normal file

29

common/blacklist/main.go

Normal file

@@ -0,0 +1,29 @@

|

||||

package blacklist

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"sync"

|

||||

)

|

||||

|

||||

var blackList sync.Map

|

||||

|

||||

func init() {

|

||||

blackList = sync.Map{}

|

||||

}

|

||||

|

||||

func userId2Key(id int) string {

|

||||

return fmt.Sprintf("userid_%d", id)

|

||||

}

|

||||

|

||||

func BanUser(id int) {

|

||||

blackList.Store(userId2Key(id), true)

|

||||

}

|

||||

|

||||

func UnbanUser(id int) {

|

||||

blackList.Delete(userId2Key(id))

|

||||

}

|

||||

|

||||

func IsUserBanned(id int) bool {

|

||||

_, ok := blackList.Load(userId2Key(id))