mirror of

https://github.com/songquanpeng/one-api.git

synced 2025-10-23 01:43:42 +08:00

Compare commits

103 Commits

v0.4.7-alp

...

v0.5.0-alp

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

dccd66b852 | ||

|

|

2fcd6852e0 | ||

|

|

9b4d1964d4 | ||

|

|

806bf8241c | ||

|

|

ce93c9b6b2 | ||

|

|

4ec4289565 | ||

|

|

3dc5a0f91d | ||

|

|

80a846673a | ||

|

|

26c6719ea3 | ||

|

|

c87e05bfc2 | ||

|

|

e6938bd236 | ||

|

|

8f721d67a5 | ||

|

|

fcc1e2d568 | ||

|

|

9a1db61675 | ||

|

|

3c940113ab | ||

|

|

0495b9a0d7 | ||

|

|

12a0e7105e | ||

|

|

e628b643cd | ||

|

|

675847bf98 | ||

|

|

2ff15baf66 | ||

|

|

4139a7036f | ||

|

|

02da0b51f8 | ||

|

|

35cfebee12 | ||

|

|

0e088f7c3e | ||

|

|

f61d326721 | ||

|

|

74b06b643a | ||

|

|

ccf7709e23 | ||

|

|

d592e2c8b8 | ||

|

|

b520b54625 | ||

|

|

81c5901123 | ||

|

|

abc53cb208 | ||

|

|

2b17bb8dd7 | ||

|

|

ea73201b6f | ||

|

|

6215d2e71c | ||

|

|

d17bdc40a7 | ||

|

|

280df27705 | ||

|

|

991f5bf4ee | ||

|

|

701aaba191 | ||

|

|

3bab5b48bf | ||

|

|

f3bccee3b5 | ||

|

|

d84b0b0f5d | ||

|

|

d383302e8a | ||

|

|

04f40def2f | ||

|

|

c48b7bc0f5 | ||

|

|

b09daf5ec1 | ||

|

|

c90c0ecef4 | ||

|

|

1ab5fb7d2d | ||

|

|

f769711c19 | ||

|

|

edc5156693 | ||

|

|

9ec6506c32 | ||

|

|

f387cc5ead | ||

|

|

569b68c43b | ||

|

|

f0c40a6cd0 | ||

|

|

0cea9e6a6f | ||

|

|

b1b3651e84 | ||

|

|

8f6bd51f58 | ||

|

|

bddbf57104 | ||

|

|

9a16b0f9e5 | ||

|

|

3530309a31 | ||

|

|

733ebc067b | ||

|

|

6a8567ac14 | ||

|

|

aabc546691 | ||

|

|

1c82b06f35 | ||

|

|

9e4109672a | ||

|

|

64c35334e6 | ||

|

|

0ce572b405 | ||

|

|

a326ac4b28 | ||

|

|

05b0e77839 | ||

|

|

51f19470bc | ||

|

|

737672fb0b | ||

|

|

0941e294bf | ||

|

|

431d505f79 | ||

|

|

f0dc7f3f06 | ||

|

|

99fed1f850 | ||

|

|

4dc5388a80 | ||

|

|

f81f4c60b2 | ||

|

|

c613d8b6b2 | ||

|

|

7adac1c09c | ||

|

|

6f05128368 | ||

|

|

9b178a28a3 | ||

|

|

4a6a7f4635 | ||

|

|

6b1a24d650 | ||

|

|

94ba3dd024 | ||

|

|

f6eb4e5628 | ||

|

|

57bd907f83 | ||

|

|

dd8e8d5ee8 | ||

|

|

1ca1aa0cdc | ||

|

|

f2ba0c0300 | ||

|

|

f5c1fcd3c3 | ||

|

|

5fdf670a19 | ||

|

|

3ce982d8ee | ||

|

|

a515f9284e | ||

|

|

cccf5e4a07 | ||

|

|

b0bfb9c9a1 | ||

|

|

3aff61a973 | ||

|

|

0fd1ff4d9e | ||

|

|

e2777bf73e | ||

|

|

77a16e6415 | ||

|

|

827942c8a9 | ||

|

|

604ff20541 | ||

|

|

25017219f5 | ||

|

|

2dd4ad0e06 | ||

|

|

61dc117da7 |

4

.github/ISSUE_TEMPLATE/bug_report.md

vendored

4

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -8,11 +8,13 @@ assignees: ''

|

||||

---

|

||||

|

||||

**例行检查**

|

||||

|

||||

[//]: # (方框内删除已有的空格,填 x 号)

|

||||

+ [ ] 我已确认目前没有类似 issue

|

||||

+ [ ] 我已确认我已升级到最新版本

|

||||

+ [ ] 我已完整查看过项目 README,尤其是常见问题部分

|

||||

+ [ ] 我理解并愿意跟进此 issue,协助测试和提供反馈

|

||||

+ [ ] 我理解并认可上述内容,并理解项目维护者精力有限,不遵循规则的 issue 可能会被无视或直接关闭

|

||||

+ [ ] 我理解并认可上述内容,并理解项目维护者精力有限,**不遵循规则的 issue 可能会被无视或直接关闭**

|

||||

|

||||

**问题描述**

|

||||

|

||||

|

||||

3

.github/ISSUE_TEMPLATE/config.yml

vendored

3

.github/ISSUE_TEMPLATE/config.yml

vendored

@@ -6,6 +6,3 @@ contact_links:

|

||||

- name: 赞赏支持

|

||||

url: https://iamazing.cn/page/reward

|

||||

about: 请作者喝杯咖啡,以激励作者持续开发

|

||||

- name: 付费部署或定制功能

|

||||

url: https://openai.justsong.cn/

|

||||

about: 加群后联系群主

|

||||

|

||||

5

.github/ISSUE_TEMPLATE/feature_request.md

vendored

5

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -8,10 +8,13 @@ assignees: ''

|

||||

---

|

||||

|

||||

**例行检查**

|

||||

|

||||

[//]: # (方框内删除已有的空格,填 x 号)

|

||||

+ [ ] 我已确认目前没有类似 issue

|

||||

+ [ ] 我已确认我已升级到最新版本

|

||||

+ [ ] 我已完整查看过项目 README,已确定现有版本无法满足需求

|

||||

+ [ ] 我理解并愿意跟进此 issue,协助测试和提供反馈

|

||||

+ [ ] 我理解并认可上述内容,并理解项目维护者精力有限,不遵循规则的 issue 可能会被无视或直接关闭

|

||||

+ [ ] 我理解并认可上述内容,并理解项目维护者精力有限,**不遵循规则的 issue 可能会被无视或直接关闭**

|

||||

|

||||

**功能描述**

|

||||

|

||||

|

||||

10

.github/workflows/docker-image-amd64-en.yml

vendored

10

.github/workflows/docker-image-amd64-en.yml

vendored

@@ -1,4 +1,4 @@

|

||||

name: Publish Docker image (amd64)

|

||||

name: Publish Docker image (amd64, English)

|

||||

|

||||

on:

|

||||

push:

|

||||

@@ -33,20 +33,12 @@ jobs:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Log in to the Container registry

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

registry: ghcr.io

|

||||

username: ${{ github.actor }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

- name: Extract metadata (tags, labels) for Docker

|

||||

id: meta

|

||||

uses: docker/metadata-action@v4

|

||||

with:

|

||||

images: |

|

||||

justsong/one-api-en

|

||||

ghcr.io/one-api-en

|

||||

|

||||

- name: Build and push Docker images

|

||||

uses: docker/build-push-action@v3

|

||||

|

||||

294

README.en.md

Normal file

294

README.en.md

Normal file

@@ -0,0 +1,294 @@

|

||||

<p align="right">

|

||||

<a href="./README.md">中文</a> | <strong>English</strong>

|

||||

</p>

|

||||

|

||||

<p align="center">

|

||||

<a href="https://github.com/songquanpeng/one-api"><img src="https://raw.githubusercontent.com/songquanpeng/one-api/main/web/public/logo.png" width="150" height="150" alt="one-api logo"></a>

|

||||

</p>

|

||||

|

||||

<div align="center">

|

||||

|

||||

# One API

|

||||

|

||||

_✨ Access all LLM through the standard OpenAI API format, easy to deploy & use ✨_

|

||||

|

||||

</div>

|

||||

|

||||

<p align="center">

|

||||

<a href="https://raw.githubusercontent.com/songquanpeng/one-api/main/LICENSE">

|

||||

<img src="https://img.shields.io/github/license/songquanpeng/one-api?color=brightgreen" alt="license">

|

||||

</a>

|

||||

<a href="https://github.com/songquanpeng/one-api/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/songquanpeng/one-api?color=brightgreen&include_prereleases" alt="release">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/repository/docker/justsong/one-api">

|

||||

<img src="https://img.shields.io/docker/pulls/justsong/one-api?color=brightgreen" alt="docker pull">

|

||||

</a>

|

||||

<a href="https://github.com/songquanpeng/one-api/releases/latest">

|

||||

<img src="https://img.shields.io/github/downloads/songquanpeng/one-api/total?color=brightgreen&include_prereleases" alt="release">

|

||||

</a>

|

||||

<a href="https://goreportcard.com/report/github.com/songquanpeng/one-api">

|

||||

<img src="https://goreportcard.com/badge/github.com/songquanpeng/one-api" alt="GoReportCard">

|

||||

</a>

|

||||

</p>

|

||||

|

||||

<p align="center">

|

||||

<a href="#deployment">Deployment Tutorial</a>

|

||||

·

|

||||

<a href="#usage">Usage</a>

|

||||

·

|

||||

<a href="https://github.com/songquanpeng/one-api/issues">Feedback</a>

|

||||

·

|

||||

<a href="#screenshots">Screenshots</a>

|

||||

·

|

||||

<a href="https://openai.justsong.cn/">Live Demo</a>

|

||||

·

|

||||

<a href="#faq">FAQ</a>

|

||||

·

|

||||

<a href="#related-projects">Related Projects</a>

|

||||

·

|

||||

<a href="https://iamazing.cn/page/reward">Donate</a>

|

||||

</p>

|

||||

|

||||

> **Warning**: This README is translated by ChatGPT. Please feel free to submit a PR if you find any translation errors.

|

||||

|

||||

> **Warning**: The Docker image for English version is `justsong/one-api-en`.

|

||||

|

||||

> **Note**: The latest image pulled from Docker may be an `alpha` release. Specify the version manually if you require stability.

|

||||

|

||||

## Features

|

||||

1. Supports multiple API access channels:

|

||||

+ [x] Official OpenAI channel (support proxy configuration)

|

||||

+ [x] **Azure OpenAI API**

|

||||

+ [x] [API Distribute](https://api.gptjk.top/register?aff=QGxj)

|

||||

+ [x] [OpenAI-SB](https://openai-sb.com)

|

||||

+ [x] [API2D](https://api2d.com/r/197971)

|

||||

+ [x] [OhMyGPT](https://aigptx.top?aff=uFpUl2Kf)

|

||||

+ [x] [AI Proxy](https://aiproxy.io/?i=OneAPI) (invitation code: `OneAPI`)

|

||||

+ [x] Custom channel: Various third-party proxy services not included in the list

|

||||

2. Supports access to multiple channels through **load balancing**.

|

||||

3. Supports **stream mode** that enables typewriter-like effect through stream transmission.

|

||||

4. Supports **multi-machine deployment**. [See here](#multi-machine-deployment) for more details.

|

||||

5. Supports **token management** that allows setting token expiration time and usage count.

|

||||

6. Supports **voucher management** that enables batch generation and export of vouchers. Vouchers can be used for account balance replenishment.

|

||||

7. Supports **channel management** that allows bulk creation of channels.

|

||||

8. Supports **user grouping** and **channel grouping** for setting different rates for different groups.

|

||||

9. Supports channel **model list configuration**.

|

||||

10. Supports **quota details checking**.

|

||||

11. Supports **user invite rewards**.

|

||||

12. Allows display of balance in USD.

|

||||

13. Supports announcement publishing, recharge link setting, and initial balance setting for new users.

|

||||

14. Offers rich **customization** options:

|

||||

1. Supports customization of system name, logo, and footer.

|

||||

2. Supports customization of homepage and about page using HTML & Markdown code, or embedding a standalone webpage through iframe.

|

||||

15. Supports management API access through system access tokens.

|

||||

16. Supports Cloudflare Turnstile user verification.

|

||||

17. Supports user management and multiple user login/registration methods:

|

||||

+ Email login/registration and password reset via email.

|

||||

+ [GitHub OAuth](https://github.com/settings/applications/new).

|

||||

+ WeChat Official Account authorization (requires additional deployment of [WeChat Server](https://github.com/songquanpeng/wechat-server)).

|

||||

18. Immediate support and encapsulation of other major model APIs as they become available.

|

||||

|

||||

## Deployment

|

||||

### Docker Deployment

|

||||

Deployment command: `docker run --name one-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api-en`

|

||||

|

||||

Update command: `docker run --rm -v /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower -cR`

|

||||

|

||||

The first `3000` in `-p 3000:3000` is the port of the host, which can be modified as needed.

|

||||

|

||||

Data will be saved in the `/home/ubuntu/data/one-api` directory on the host. Ensure that the directory exists and has write permissions, or change it to a suitable directory.

|

||||

|

||||

Nginx reference configuration:

|

||||

```

|

||||

server{

|

||||

server_name openai.justsong.cn; # Modify your domain name accordingly

|

||||

|

||||

location / {

|

||||

client_max_body_size 64m;

|

||||

proxy_http_version 1.1;

|

||||

proxy_pass http://localhost:3000; # Modify your port accordingly

|

||||

proxy_set_header Host $host;

|

||||

proxy_set_header X-Forwarded-For $remote_addr;

|

||||

proxy_cache_bypass $http_upgrade;

|

||||

proxy_set_header Accept-Encoding gzip;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Next, configure HTTPS with Let's Encrypt certbot:

|

||||

```bash

|

||||

# Install certbot on Ubuntu:

|

||||

sudo snap install --classic certbot

|

||||

sudo ln -s /snap/bin/certbot /usr/bin/certbot

|

||||

# Generate certificates & modify Nginx configuration

|

||||

sudo certbot --nginx

|

||||

# Follow the prompts

|

||||

# Restart Nginx

|

||||

sudo service nginx restart

|

||||

```

|

||||

|

||||

The initial account username is `root` and password is `123456`.

|

||||

|

||||

### Manual Deployment

|

||||

1. Download the executable file from [GitHub Releases](https://github.com/songquanpeng/one-api/releases/latest) or compile from source:

|

||||

```shell

|

||||

git clone https://github.com/songquanpeng/one-api.git

|

||||

|

||||

# Build the frontend

|

||||

cd one-api/web

|

||||

npm install

|

||||

npm run build

|

||||

|

||||

# Build the backend

|

||||

cd ..

|

||||

go mod download

|

||||

go build -ldflags "-s -w" -o one-api

|

||||

```

|

||||

2. Run:

|

||||

```shell

|

||||

chmod u+x one-api

|

||||

./one-api --port 3000 --log-dir ./logs

|

||||

```

|

||||

3. Access [http://localhost:3000/](http://localhost:3000/) and log in. The initial account username is `root` and password is `123456`.

|

||||

|

||||

For more detailed deployment tutorials, please refer to [this page](https://iamazing.cn/page/how-to-deploy-a-website).

|

||||

|

||||

### Multi-machine Deployment

|

||||

1. Set the same `SESSION_SECRET` for all servers.

|

||||

2. Set `SQL_DSN` and use MySQL instead of SQLite. All servers should connect to the same database.

|

||||

3. Set the `NODE_TYPE` for all non-master nodes to `slave`.

|

||||

4. Set `SYNC_FREQUENCY` for servers to periodically sync configurations from the database.

|

||||

5. Non-master nodes can optionally set `FRONTEND_BASE_URL` to redirect page requests to the master server.

|

||||

6. Install Redis separately on non-master nodes, and configure `REDIS_CONN_STRING` so that the database can be accessed with zero latency when the cache has not expired.

|

||||

7. If the main server also has high latency accessing the database, Redis must be enabled and `SYNC_FREQUENCY` must be set to periodically sync configurations from the database.

|

||||

|

||||

Please refer to the [environment variables](#environment-variables) section for details on using environment variables.

|

||||

|

||||

### Deployment on Control Panels (e.g., Baota)

|

||||

Refer to [#175](https://github.com/songquanpeng/one-api/issues/175) for detailed instructions.

|

||||

|

||||

If you encounter a blank page after deployment, refer to [#97](https://github.com/songquanpeng/one-api/issues/97) for possible solutions.

|

||||

|

||||

### Deployment on Third-Party Platforms

|

||||

<details>

|

||||

<summary><strong>Deploy on Sealos</strong></summary>

|

||||

<div>

|

||||

|

||||

Please refer to [this tutorial](https://github.com/c121914yu/FastGPT/blob/main/docs/deploy/one-api/sealos.md).

|

||||

|

||||

</div>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary><strong>Deployment on Zeabur</strong></summary>

|

||||

<div>

|

||||

|

||||

> Zeabur's servers are located overseas, automatically solving network issues, and the free quota is sufficient for personal usage.

|

||||

|

||||

1. First, fork the code.

|

||||

2. Go to [Zeabur](https://zeabur.com/), log in, and enter the console.

|

||||

3. Create a new project. In Service -> Add Service, select Marketplace, and choose MySQL. Note down the connection parameters (username, password, address, and port).

|

||||

4. Copy the connection parameters and run ```create database `one-api` ``` to create the database.

|

||||

5. Then, in Service -> Add Service, select Git (authorization is required for the first use) and choose your forked repository.

|

||||

6. Automatic deployment will start, but please cancel it for now. Go to the Variable tab, add a `PORT` with a value of `3000`, and then add a `SQL_DSN` with a value of `<username>:<password>@tcp(<addr>:<port>)/one-api`. Save the changes. Please note that if `SQL_DSN` is not set, data will not be persisted, and the data will be lost after redeployment.

|

||||

7. Select Redeploy.

|

||||

8. In the Domains tab, select a suitable domain name prefix, such as "my-one-api". The final domain name will be "my-one-api.zeabur.app". You can also CNAME your own domain name.

|

||||

9. Wait for the deployment to complete, and click on the generated domain name to access One API.

|

||||

|

||||

</div>

|

||||

</details>

|

||||

|

||||

## Configuration

|

||||

The system is ready to use out of the box.

|

||||

|

||||

You can configure it by setting environment variables or command line parameters.

|

||||

|

||||

After the system starts, log in as the `root` user to further configure the system.

|

||||

|

||||

## Usage

|

||||

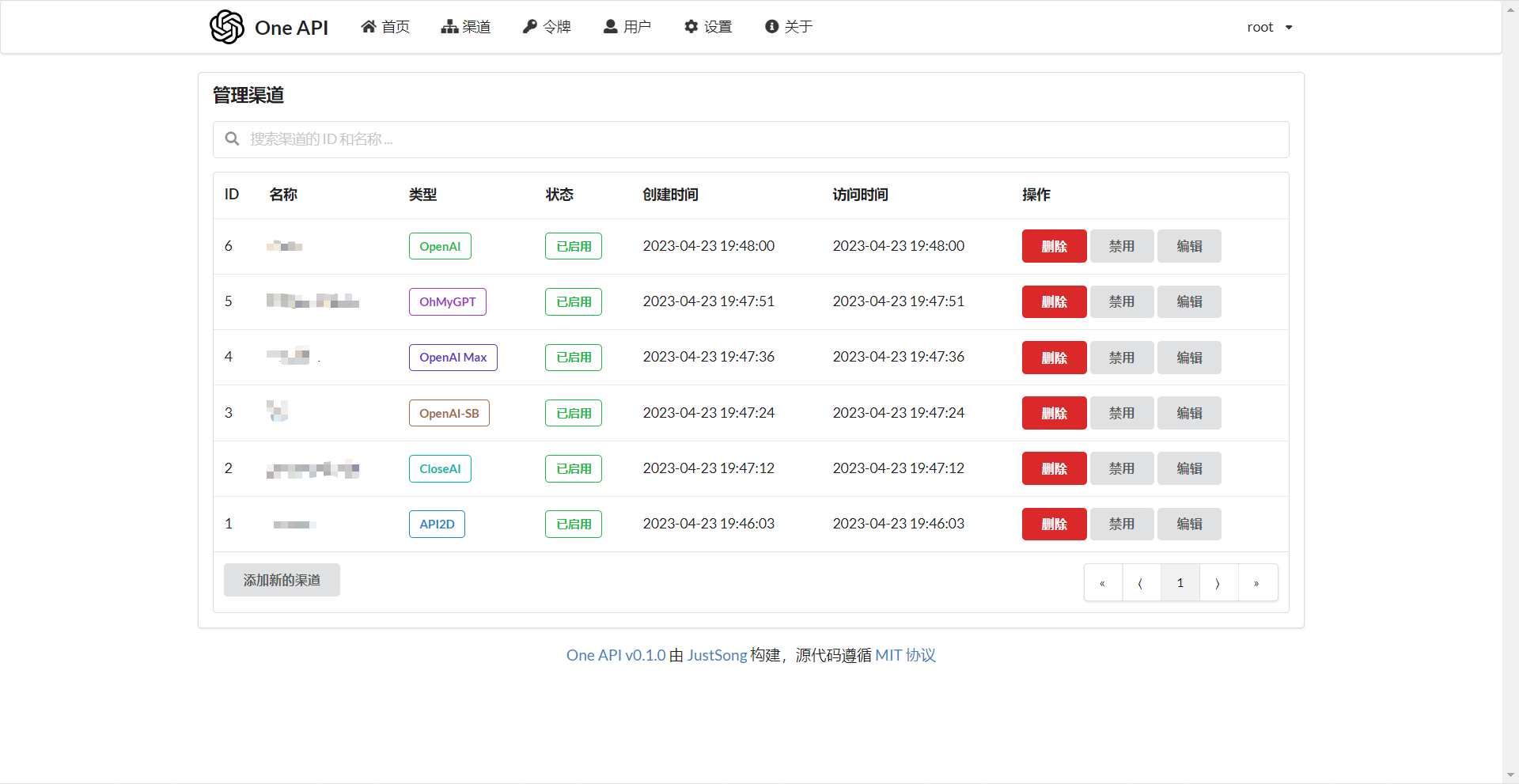

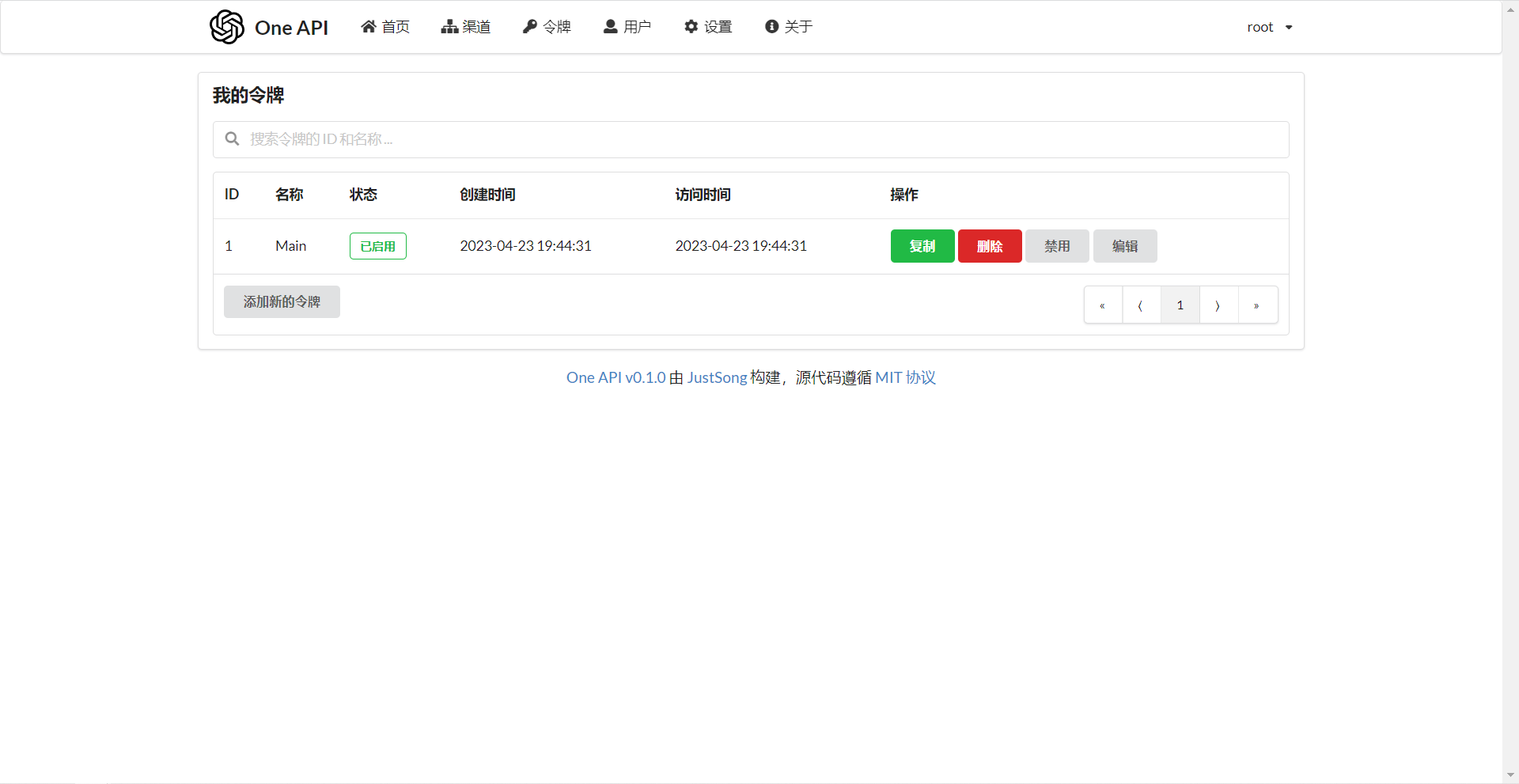

Add your API Key on the `Channels` page, and then add an access token on the `Tokens` page.

|

||||

|

||||

You can then use your access token to access One API. The usage is consistent with the [OpenAI API](https://platform.openai.com/docs/api-reference/introduction).

|

||||

|

||||

In places where the OpenAI API is used, remember to set the API Base to your One API deployment address, for example: `https://openai.justsong.cn`. The API Key should be the token generated in One API.

|

||||

|

||||

Note that the specific API Base format depends on the client you are using.

|

||||

|

||||

```mermaid

|

||||

graph LR

|

||||

A(User)

|

||||

A --->|Request| B(One API)

|

||||

B -->|Relay Request| C(OpenAI)

|

||||

B -->|Relay Request| D(Azure)

|

||||

B -->|Relay Request| E(Other downstream channels)

|

||||

```

|

||||

|

||||

To specify which channel to use for the current request, you can add the channel ID after the token, for example: `Authorization: Bearer ONE_API_KEY-CHANNEL_ID`.

|

||||

Note that the token needs to be created by an administrator to specify the channel ID.

|

||||

|

||||

If the channel ID is not provided, load balancing will be used to distribute the requests to multiple channels.

|

||||

|

||||

### Environment Variables

|

||||

1. `REDIS_CONN_STRING`: When set, Redis will be used as the storage for request rate limiting instead of memory.

|

||||

+ Example: `REDIS_CONN_STRING=redis://default:redispw@localhost:49153`

|

||||

2. `SESSION_SECRET`: When set, a fixed session key will be used to ensure that cookies of logged-in users are still valid after the system restarts.

|

||||

+ Example: `SESSION_SECRET=random_string`

|

||||

3. `SQL_DSN`: When set, the specified database will be used instead of SQLite. Please use MySQL version 8.0.

|

||||

+ Example: `SQL_DSN=root:123456@tcp(localhost:3306)/oneapi`

|

||||

4. `FRONTEND_BASE_URL`: When set, the specified frontend address will be used instead of the backend address.

|

||||

+ Example: `FRONTEND_BASE_URL=https://openai.justsong.cn`

|

||||

5. `SYNC_FREQUENCY`: When set, the system will periodically sync configurations from the database, with the unit in seconds. If not set, no sync will happen.

|

||||

+ Example: `SYNC_FREQUENCY=60`

|

||||

6. `NODE_TYPE`: When set, specifies the node type. Valid values are `master` and `slave`. If not set, it defaults to `master`.

|

||||

+ Example: `NODE_TYPE=slave`

|

||||

7. `CHANNEL_UPDATE_FREQUENCY`: When set, it periodically updates the channel balances, with the unit in minutes. If not set, no update will happen.

|

||||

+ Example: `CHANNEL_UPDATE_FREQUENCY=1440`

|

||||

8. `CHANNEL_TEST_FREQUENCY`: When set, it periodically tests the channels, with the unit in minutes. If not set, no test will happen.

|

||||

+ Example: `CHANNEL_TEST_FREQUENCY=1440`

|

||||

9. `POLLING_INTERVAL`: The time interval (in seconds) between requests when updating channel balances and testing channel availability. Default is no interval.

|

||||

+ Example: `POLLING_INTERVAL=5`

|

||||

|

||||

### Command Line Parameters

|

||||

1. `--port <port_number>`: Specifies the port number on which the server listens. Defaults to `3000`.

|

||||

+ Example: `--port 3000`

|

||||

2. `--log-dir <log_dir>`: Specifies the log directory. If not set, the logs will not be saved.

|

||||

+ Example: `--log-dir ./logs`

|

||||

3. `--version`: Prints the system version number and exits.

|

||||

4. `--help`: Displays the command usage help and parameter descriptions.

|

||||

|

||||

## Screenshots

|

||||

|

||||

|

||||

|

||||

## FAQ

|

||||

1. What is quota? How is it calculated? Does One API have quota calculation issues?

|

||||

+ Quota = Group multiplier * Model multiplier * (number of prompt tokens + number of completion tokens * completion multiplier)

|

||||

+ The completion multiplier is fixed at 1.33 for GPT3.5 and 2 for GPT4, consistent with the official definition.

|

||||

+ If it is not a stream mode, the official API will return the total number of tokens consumed. However, please note that the consumption multipliers for prompts and completions are different.

|

||||

2. Why does it prompt "insufficient quota" even though my account balance is sufficient?

|

||||

+ Please check if your token quota is sufficient. It is separate from the account balance.

|

||||

+ The token quota is used to set the maximum usage and can be freely set by the user.

|

||||

3. It says "No available channels" when trying to use a channel. What should I do?

|

||||

+ Please check the user and channel group settings.

|

||||

+ Also check the channel model settings.

|

||||

4. Channel testing reports an error: "invalid character '<' looking for beginning of value"

|

||||

+ This error occurs when the returned value is not valid JSON but an HTML page.

|

||||

+ Most likely, the IP of your deployment site or the node of the proxy has been blocked by CloudFlare.

|

||||

5. ChatGPT Next Web reports an error: "Failed to fetch"

|

||||

+ Do not set `BASE_URL` during deployment.

|

||||

+ Double-check that your interface address and API Key are correct.

|

||||

|

||||

## Related Projects

|

||||

[FastGPT](https://github.com/c121914yu/FastGPT): Build an AI knowledge base in three minutes

|

||||

|

||||

## Note

|

||||

This project is an open-source project. Please use it in compliance with OpenAI's [Terms of Use](https://openai.com/policies/terms-of-use) and **applicable laws and regulations**. It must not be used for illegal purposes.

|

||||

|

||||

This project is released under the MIT license. Based on this, attribution and a link to this project must be included at the bottom of the page.

|

||||

|

||||

The same applies to derivative projects based on this project.

|

||||

|

||||

If you do not wish to include attribution, prior authorization must be obtained.

|

||||

|

||||

According to the MIT license, users should bear the risk and responsibility of using this project, and the developer of this open-source project is not responsible for this.

|

||||

97

README.md

97

README.md

@@ -1,3 +1,8 @@

|

||||

<p align="right">

|

||||

<strong>中文</strong> | <a href="./README.en.md">English</a>

|

||||

</p>

|

||||

|

||||

|

||||

<p align="center">

|

||||

<a href="https://github.com/songquanpeng/one-api"><img src="https://raw.githubusercontent.com/songquanpeng/one-api/main/web/public/logo.png" width="150" height="150" alt="one-api logo"></a>

|

||||

</p>

|

||||

@@ -6,7 +11,7 @@

|

||||

|

||||

# One API

|

||||

|

||||

_✨ All in one 的 OpenAI 接口,整合各种 API 访问方式,开箱即用✨_

|

||||

_✨ 通过标准的 OpenAI API 格式访问所有的大模型,开箱即用 ✨_

|

||||

|

||||

</div>

|

||||

|

||||

@@ -46,50 +51,60 @@ _✨ All in one 的 OpenAI 接口,整合各种 API 访问方式,开箱即用

|

||||

<a href="https://iamazing.cn/page/reward">赞赏支持</a>

|

||||

</p>

|

||||

|

||||

> **Note**:本项目为开源项目,使用者必须在遵循 OpenAI 的[使用条款](https://openai.com/policies/terms-of-use)以及**法律法规**的情况下使用,不得用于非法用途。

|

||||

|

||||

> **Note**:使用 Docker 拉取的最新镜像可能是 `alpha` 版本,如果追求稳定性请手动指定版本。

|

||||

|

||||

> **Warning**:从 `v0.3` 版本升级到 `v0.4` 版本需要手动迁移数据库,请手动执行[数据库迁移脚本](./bin/migration_v0.3-v0.4.sql)。

|

||||

|

||||

## 功能

|

||||

1. 支持多种 API 访问渠道,欢迎 PR 或提 issue 添加更多渠道:

|

||||

+ [x] OpenAI 官方通道(支持配置代理)

|

||||

+ [x] **Azure OpenAI API**

|

||||

1. 支持多种大模型:

|

||||

+ [x] [OpenAI ChatGPT 系列模型](https://platform.openai.com/docs/guides/gpt/chat-completions-api)(支持 [Azure OpenAI API](https://learn.microsoft.com/en-us/azure/ai-services/openai/reference))

|

||||

+ [x] [Anthropic Claude 系列模型](https://anthropic.com)

|

||||

+ [x] [Google PaLM2 系列模型](https://developers.generativeai.google)

|

||||

+ [x] [百度文心一言系列模型](https://cloud.baidu.com/doc/WENXINWORKSHOP/index.html)

|

||||

+ [x] [智谱 ChatGLM 系列模型](https://bigmodel.cn)

|

||||

2. 支持配置镜像以及众多第三方代理服务:

|

||||

+ [x] [API Distribute](https://api.gptjk.top/register?aff=QGxj)

|

||||

+ [x] [OpenAI-SB](https://openai-sb.com)

|

||||

+ [x] [API2D](https://api2d.com/r/197971)

|

||||

+ [x] [OhMyGPT](https://aigptx.top?aff=uFpUl2Kf)

|

||||

+ [x] [AI Proxy](https://aiproxy.io/?i=OneAPI) (邀请码:`OneAPI`)

|

||||

+ [x] [API2GPT](http://console.api2gpt.com/m/00002S)

|

||||

+ [x] [CloseAI](https://console.closeai-asia.com/r/2412)

|

||||

+ [x] [AI.LS](https://ai.ls)

|

||||

+ [x] [OpenAI Max](https://openaimax.com)

|

||||

+ [x] 自定义渠道:例如各种未收录的第三方代理服务

|

||||

2. 支持通过**负载均衡**的方式访问多个渠道。

|

||||

3. 支持 **stream 模式**,可以通过流式传输实现打字机效果。

|

||||

4. 支持**多机部署**,[详见此处](#多机部署)。

|

||||

5. 支持**令牌管理**,设置令牌的过期时间和使用次数。

|

||||

6. 支持**兑换码管理**,支持批量生成和导出兑换码,可使用兑换码为账户进行充值。

|

||||

7. 支持**通道管理**,批量创建通道。

|

||||

8. 支持**用户分组**以及**渠道分组**,支持为不同分组设置不同的倍率。

|

||||

9. 支持渠道**设置模型列表**。

|

||||

10. 支持**查看额度明细**。

|

||||

11. 支持**用户邀请奖励**。

|

||||

12. 支持以美元为单位显示额度。

|

||||

13. 支持发布公告,设置充值链接,设置新用户初始额度。

|

||||

14. 支持丰富的**自定义**设置,

|

||||

3. 支持通过**负载均衡**的方式访问多个渠道。

|

||||

4. 支持 **stream 模式**,可以通过流式传输实现打字机效果。

|

||||

5. 支持**多机部署**,[详见此处](#多机部署)。

|

||||

6. 支持**令牌管理**,设置令牌的过期时间和额度。

|

||||

7. 支持**兑换码管理**,支持批量生成和导出兑换码,可使用兑换码为账户进行充值。

|

||||

8. 支持**通道管理**,批量创建通道。

|

||||

9. 支持**用户分组**以及**渠道分组**,支持为不同分组设置不同的倍率。

|

||||

10. 支持渠道**设置模型列表**。

|

||||

11. 支持**查看额度明细**。

|

||||

12. 支持**用户邀请奖励**。

|

||||

13. 支持以美元为单位显示额度。

|

||||

14. 支持发布公告,设置充值链接,设置新用户初始额度。

|

||||

15. 支持模型映射,重定向用户的请求模型。

|

||||

16. 支持失败自动重试。

|

||||

17. 支持绘图接口。

|

||||

18. 支持丰富的**自定义**设置,

|

||||

1. 支持自定义系统名称,logo 以及页脚。

|

||||

2. 支持自定义首页和关于页面,可以选择使用 HTML & Markdown 代码进行自定义,或者使用一个单独的网页通过 iframe 嵌入。

|

||||

15. 支持通过系统访问令牌访问管理 API。

|

||||

16. 支持 Cloudflare Turnstile 用户校验。

|

||||

17. 支持用户管理,支持**多种用户登录注册方式**:

|

||||

19. 支持通过系统访问令牌访问管理 API。

|

||||

20. 支持 Cloudflare Turnstile 用户校验。

|

||||

21. 支持用户管理,支持**多种用户登录注册方式**:

|

||||

+ 邮箱登录注册以及通过邮箱进行密码重置。

|

||||

+ [GitHub 开放授权](https://github.com/settings/applications/new)。

|

||||

+ 微信公众号授权(需要额外部署 [WeChat Server](https://github.com/songquanpeng/wechat-server))。

|

||||

18. 未来其他大模型开放 API 后,将第一时间支持,并将其封装成同样的 API 访问方式。

|

||||

|

||||

## 部署

|

||||

### 基于 Docker 进行部署

|

||||

部署命令:`docker run --name one-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api`

|

||||

|

||||

如果上面的镜像无法拉取,可以尝试使用 GitHub 的 Docker 镜像,将上面的 `justsong/one-api` 替换为 `ghcr.io/songquanpeng/one-api` 即可。

|

||||

|

||||

如果你的并发量较大,推荐设置 `SQL_DSN`,详见下面[环境变量](#环境变量)一节。

|

||||

|

||||

更新命令:`docker run --rm -v /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower -cR`

|

||||

|

||||

`-p 3000:3000` 中的第一个 `3000` 是宿主机的端口,可以根据需要进行修改。

|

||||

@@ -109,6 +124,7 @@ server{

|

||||

proxy_set_header X-Forwarded-For $remote_addr;

|

||||

proxy_cache_bypass $http_upgrade;

|

||||

proxy_set_header Accept-Encoding gzip;

|

||||

proxy_read_timeout 300s; # GPT-4 需要较长的超时时间,请自行调整

|

||||

}

|

||||

}

|

||||

```

|

||||

@@ -154,8 +170,8 @@ sudo service nginx restart

|

||||

### 多机部署

|

||||

1. 所有服务器 `SESSION_SECRET` 设置一样的值。

|

||||

2. 必须设置 `SQL_DSN`,使用 MySQL 数据库而非 SQLite,所有服务器连接同一个数据库。

|

||||

3. 所有从服务器必须设置 `NODE_TYPE` 为 `slave`。

|

||||

4. 设置 `SYNC_FREQUENCY` 后服务器将定期从数据库同步配置。

|

||||

3. 所有从服务器必须设置 `NODE_TYPE` 为 `slave`,不设置则默认为主服务器。

|

||||

4. 设置 `SYNC_FREQUENCY` 后服务器将定期从数据库同步配置,在使用远程数据库的情况下,推荐设置该项并启用 Redis,无论主从。

|

||||

5. 从服务器可以选择设置 `FRONTEND_BASE_URL`,以重定向页面请求到主服务器。

|

||||

6. 从服务器上**分别**装好 Redis,设置好 `REDIS_CONN_STRING`,这样可以做到在缓存未过期的情况下数据库零访问,可以减少延迟。

|

||||

7. 如果主服务器访问数据库延迟也比较高,则也需要启用 Redis,并设置 `SYNC_FREQUENCY`,以定期从数据库同步配置。

|

||||

@@ -178,7 +194,7 @@ sudo service nginx restart

|

||||

docker run --name chat-next-web -d -p 3001:3000 yidadaa/chatgpt-next-web

|

||||

```

|

||||

|

||||

注意修改端口号和 `BASE_URL`。

|

||||

注意修改端口号,之后在页面上设置接口地址(例如:https://openai.justsong.cn/ )和 API Key 即可。

|

||||

|

||||

#### ChatGPT Web

|

||||

项目主页:https://github.com/Chanzhaoyu/chatgpt-web

|

||||

@@ -190,6 +206,17 @@ docker run --name chatgpt-web -d -p 3002:3002 -e OPENAI_API_BASE_URL=https://ope

|

||||

注意修改端口号、`OPENAI_API_BASE_URL` 和 `OPENAI_API_KEY`。

|

||||

|

||||

### 部署到第三方平台

|

||||

<details>

|

||||

<summary><strong>部署到 Sealos </strong></summary>

|

||||

<div>

|

||||

|

||||

> Sealos 可视化部署,仅需 1 分钟。

|

||||

|

||||

参考这个[教程](https://github.com/c121914yu/FastGPT/blob/main/docs/deploy/one-api/sealos.md)中 1~5 步。

|

||||

|

||||

</div>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary><strong>部署到 Zeabur</strong></summary>

|

||||

<div>

|

||||

@@ -216,6 +243,8 @@ docker run --name chatgpt-web -d -p 3002:3002 -e OPENAI_API_BASE_URL=https://ope

|

||||

|

||||

等到系统启动后,使用 `root` 用户登录系统并做进一步的配置。

|

||||

|

||||

**Note**:如果你不知道某个配置项的含义,可以临时删掉值以看到进一步的提示文字。

|

||||

|

||||

## 使用方法

|

||||

在`渠道`页面中添加你的 API Key,之后在`令牌`页面中新增访问令牌。

|

||||

|

||||

@@ -246,7 +275,10 @@ graph LR

|

||||

+ 例子:`SESSION_SECRET=random_string`

|

||||

3. `SQL_DSN`:设置之后将使用指定数据库而非 SQLite,请使用 MySQL 8.0 版本。

|

||||

+ 例子:`SQL_DSN=root:123456@tcp(localhost:3306)/oneapi`

|

||||

4. `FRONTEND_BASE_URL`:设置之后将使用指定的前端地址,而非后端地址。

|

||||

+ 注意需要提前建立数据库 `oneapi`,无需手动建表,程序将自动建表。

|

||||

+ 如果使用本地数据库:部署命令可添加 `--network="host"` 以使得容器内的程序可以访问到宿主机上的 MySQL。

|

||||

+ 如果使用云数据库:如果云服务器需要验证身份,需要在连接参数中添加 `?tls=skip-verify`。

|

||||

4. `FRONTEND_BASE_URL`:设置之后将重定向页面请求到指定的地址,仅限从服务器设置。

|

||||

+ 例子:`FRONTEND_BASE_URL=https://openai.justsong.cn`

|

||||

5. `SYNC_FREQUENCY`:设置之后将定期与数据库同步配置,单位为秒,未设置则不进行同步。

|

||||

+ 例子:`SYNC_FREQUENCY=60`

|

||||

@@ -256,7 +288,7 @@ graph LR

|

||||

+ 例子:`CHANNEL_UPDATE_FREQUENCY=1440`

|

||||

8. `CHANNEL_TEST_FREQUENCY`:设置之后将定期检查渠道,单位为分钟,未设置则不进行检查。

|

||||

+ 例子:`CHANNEL_TEST_FREQUENCY=1440`

|

||||

9. `REQUEST_INTERVAL`:批量更新渠道余额以及测试可用性时的请求间隔,单位为秒,默认无间隔。

|

||||

9. `POLLING_INTERVAL`:批量更新渠道余额以及测试可用性时的请求间隔,单位为秒,默认无间隔。

|

||||

+ 例子:`POLLING_INTERVAL=5`

|

||||

|

||||

### 命令行参数

|

||||

@@ -293,13 +325,16 @@ https://openai.justsong.cn

|

||||

5. ChatGPT Next Web 报错:`Failed to fetch`

|

||||

+ 部署的时候不要设置 `BASE_URL`。

|

||||

+ 检查你的接口地址和 API Key 有没有填对。

|

||||

6. 报错:`当前分组负载已饱和,请稍后再试`

|

||||

+ 上游通道 429 了。

|

||||

|

||||

## 相关项目

|

||||

[FastGPT](https://github.com/c121914yu/FastGPT): 三分钟搭建 AI 知识库

|

||||

|

||||

## 注意

|

||||

本项目为开源项目,请在遵循 OpenAI 的[使用条款](https://openai.com/policies/terms-of-use)以及**法律法规**的情况下使用,不得用于非法用途。

|

||||

|

||||

本项目使用 MIT 协议进行开源,请以某种方式保留 One API 的版权信息。

|

||||

本项目使用 MIT 协议进行开源,**在此基础上**,必须在页面底部保留署名以及指向本项目的链接。如果不想保留署名,必须首先获得授权。

|

||||

|

||||

同样适用于基于本项目的二开项目。

|

||||

|

||||

依据 MIT 协议,使用者需自行承担使用本项目的风险与责任,本开源项目开发者与此无关。

|

||||

@@ -1,25 +1,29 @@

|

||||

#!/bin/bash

|

||||

|

||||

if [ $# -ne 3 ]; then

|

||||

echo "Usage: time_test.sh <domain> <key> <count>"

|

||||

if [ $# -lt 3 ]; then

|

||||

echo "Usage: time_test.sh <domain> <key> <count> [<model>]"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

domain=$1

|

||||

key=$2

|

||||

count=$3

|

||||

model=${4:-"gpt-3.5-turbo"} # 设置默认模型为 gpt-3.5-turbo

|

||||

|

||||

total_time=0

|

||||

times=()

|

||||

|

||||

for ((i=1; i<=count; i++)); do

|

||||

result=$(curl -o /dev/null -s -w %{time_total}\\n \

|

||||

result=$(curl -o /dev/null -s -w "%{http_code} %{time_total}\\n" \

|

||||

https://"$domain"/v1/chat/completions \

|

||||

-H "Content-Type: application/json" \

|

||||

-H "Authorization: Bearer $key" \

|

||||

-d '{"messages": [{"content": "echo hi", "role": "user"}], "model": "gpt-3.5-turbo", "stream": false, "max_tokens": 1}')

|

||||

echo "$result"

|

||||

total_time=$(bc <<< "$total_time + $result")

|

||||

times+=("$result")

|

||||

-d '{"messages": [{"content": "echo hi", "role": "user"}], "model": "'"$model"'", "stream": false, "max_tokens": 1}')

|

||||

http_code=$(echo "$result" | awk '{print $1}')

|

||||

time=$(echo "$result" | awk '{print $2}')

|

||||

echo "HTTP status code: $http_code, Time taken: $time"

|

||||

total_time=$(bc <<< "$total_time + $time")

|

||||

times+=("$time")

|

||||

done

|

||||

|

||||

average_time=$(echo "scale=4; $total_time / $count" | bc)

|

||||

|

||||

@@ -67,12 +67,14 @@ var ChannelDisableThreshold = 5.0

|

||||

var AutomaticDisableChannelEnabled = false

|

||||

var QuotaRemindThreshold = 1000

|

||||

var PreConsumedQuota = 500

|

||||

var ApproximateTokenEnabled = false

|

||||

var RetryTimes = 0

|

||||

|

||||

var RootUserEmail = ""

|

||||

|

||||

var IsMasterNode = os.Getenv("NODE_TYPE") != "slave"

|

||||

|

||||

var requestInterval, _ = strconv.Atoi(os.Getenv("REQUEST_INTERVAL"))

|

||||

var requestInterval, _ = strconv.Atoi(os.Getenv("POLLING_INTERVAL"))

|

||||

var RequestInterval = time.Duration(requestInterval) * time.Second

|

||||

|

||||

const (

|

||||

@@ -148,20 +150,28 @@ const (

|

||||

ChannelTypeAIProxy = 10

|

||||

ChannelTypePaLM = 11

|

||||

ChannelTypeAPI2GPT = 12

|

||||

ChannelTypeAIGC2D = 13

|

||||

ChannelTypeAnthropic = 14

|

||||

ChannelTypeBaidu = 15

|

||||

ChannelTypeZhipu = 16

|

||||

)

|

||||

|

||||

var ChannelBaseURLs = []string{

|

||||

"", // 0

|

||||

"https://api.openai.com", // 1

|

||||

"https://oa.api2d.net", // 2

|

||||

"", // 3

|

||||

"https://api.openai-proxy.org", // 4

|

||||

"https://api.openai-sb.com", // 5

|

||||

"https://api.openaimax.com", // 6

|

||||

"https://api.ohmygpt.com", // 7

|

||||

"", // 8

|

||||

"https://api.caipacity.com", // 9

|

||||

"https://api.aiproxy.io", // 10

|

||||

"", // 11

|

||||

"https://api.api2gpt.com", // 12

|

||||

"", // 0

|

||||

"https://api.openai.com", // 1

|

||||

"https://oa.api2d.net", // 2

|

||||

"", // 3

|

||||

"https://api.closeai-proxy.xyz", // 4

|

||||

"https://api.openai-sb.com", // 5

|

||||

"https://api.openaimax.com", // 6

|

||||

"https://api.ohmygpt.com", // 7

|

||||

"", // 8

|

||||

"https://api.caipacity.com", // 9

|

||||

"https://api.aiproxy.io", // 10

|

||||

"", // 11

|

||||

"https://api.api2gpt.com", // 12

|

||||

"https://api.aigc2d.com", // 13

|

||||

"https://api.anthropic.com", // 14

|

||||

"https://aip.baidubce.com", // 15

|

||||

"https://open.bigmodel.cn", // 16

|

||||

}

|

||||

|

||||

@@ -4,9 +4,11 @@ import "encoding/json"

|

||||

|

||||

// ModelRatio

|

||||

// https://platform.openai.com/docs/models/model-endpoint-compatibility

|

||||

// https://cloud.baidu.com/doc/WENXINWORKSHOP/s/Blfmc9dlf

|

||||

// https://openai.com/pricing

|

||||

// TODO: when a new api is enabled, check the pricing here

|

||||

// 1 === $0.002 / 1K tokens

|

||||

// 1 === ¥0.014 / 1k tokens

|

||||

var ModelRatio = map[string]float64{

|

||||

"gpt-4": 15,

|

||||

"gpt-4-0314": 15,

|

||||

@@ -31,10 +33,19 @@ var ModelRatio = map[string]float64{

|

||||

"curie": 10,

|

||||

"babbage": 10,

|

||||

"ada": 10,

|

||||

"text-embedding-ada-002": 0.2,

|

||||

"text-embedding-ada-002": 0.05,

|

||||

"text-search-ada-doc-001": 10,

|

||||

"text-moderation-stable": 0.1,

|

||||

"text-moderation-latest": 0.1,

|

||||

"dall-e": 8,

|

||||

"claude-instant-1": 0.75,

|

||||

"claude-2": 30,

|

||||

"ERNIE-Bot": 0.8572, // ¥0.012 / 1k tokens

|

||||

"ERNIE-Bot-turbo": 0.5715, // ¥0.008 / 1k tokens

|

||||

"PaLM-2": 1,

|

||||

"chatglm_pro": 0.7143, // ¥0.01 / 1k tokens

|

||||

"chatglm_std": 0.3572, // ¥0.005 / 1k tokens

|

||||

"chatglm_lite": 0.1429, // ¥0.002 / 1k tokens

|

||||

}

|

||||

|

||||

func ModelRatio2JSONString() string {

|

||||

|

||||

@@ -7,16 +7,24 @@ import (

|

||||

)

|

||||

|

||||

func GetSubscription(c *gin.Context) {

|

||||

var quota int

|

||||

var remainQuota int

|

||||

var usedQuota int

|

||||

var err error

|

||||

var token *model.Token

|

||||

var expiredTime int64

|

||||

if common.DisplayTokenStatEnabled {

|

||||

tokenId := c.GetInt("token_id")

|

||||

token, err = model.GetTokenById(tokenId)

|

||||

quota = token.RemainQuota

|

||||

expiredTime = token.ExpiredTime

|

||||

remainQuota = token.RemainQuota

|

||||

usedQuota = token.UsedQuota

|

||||

} else {

|

||||

userId := c.GetInt("id")

|

||||

quota, err = model.GetUserQuota(userId)

|

||||

remainQuota, err = model.GetUserQuota(userId)

|

||||

usedQuota, err = model.GetUserUsedQuota(userId)

|

||||

}

|

||||

if expiredTime <= 0 {

|

||||

expiredTime = 0

|

||||

}

|

||||

if err != nil {

|

||||

openAIError := OpenAIError{

|

||||

@@ -28,16 +36,21 @@ func GetSubscription(c *gin.Context) {

|

||||

})

|

||||

return

|

||||

}

|

||||

quota := remainQuota + usedQuota

|

||||

amount := float64(quota)

|

||||

if common.DisplayInCurrencyEnabled {

|

||||

amount /= common.QuotaPerUnit

|

||||

}

|

||||

if token != nil && token.UnlimitedQuota {

|

||||

amount = 100000000

|

||||

}

|

||||

subscription := OpenAISubscriptionResponse{

|

||||

Object: "billing_subscription",

|

||||

HasPaymentMethod: true,

|

||||

SoftLimitUSD: amount,

|

||||

HardLimitUSD: amount,

|

||||

SystemHardLimitUSD: amount,

|

||||

AccessUntil: expiredTime,

|

||||

}

|

||||

c.JSON(200, subscription)

|

||||

return

|

||||

@@ -71,7 +84,7 @@ func GetUsage(c *gin.Context) {

|

||||

}

|

||||

usage := OpenAIUsageResponse{

|

||||

Object: "list",

|

||||

TotalUsage: amount,

|

||||

TotalUsage: amount * 100,

|

||||

}

|

||||

c.JSON(200, usage)

|

||||

return

|

||||

|

||||

@@ -22,6 +22,7 @@ type OpenAISubscriptionResponse struct {

|

||||

SoftLimitUSD float64 `json:"soft_limit_usd"`

|

||||

HardLimitUSD float64 `json:"hard_limit_usd"`

|

||||

SystemHardLimitUSD float64 `json:"system_hard_limit_usd"`

|

||||

AccessUntil int64 `json:"access_until"`

|

||||

}

|

||||

|

||||

type OpenAIUsageDailyCost struct {

|

||||

@@ -32,6 +33,13 @@ type OpenAIUsageDailyCost struct {

|

||||

}

|

||||

}

|

||||

|

||||

type OpenAICreditGrants struct {

|

||||

Object string `json:"object"`

|

||||

TotalGranted float64 `json:"total_granted"`

|

||||

TotalUsed float64 `json:"total_used"`

|

||||

TotalAvailable float64 `json:"total_available"`

|

||||

}

|

||||

|

||||

type OpenAIUsageResponse struct {

|

||||

Object string `json:"object"`

|

||||

//DailyCosts []OpenAIUsageDailyCost `json:"daily_costs"`

|

||||

@@ -61,6 +69,14 @@ type API2GPTUsageResponse struct {

|

||||

TotalRemaining float64 `json:"total_remaining"`

|

||||

}

|

||||

|

||||

type APGC2DGPTUsageResponse struct {

|

||||

//Grants interface{} `json:"grants"`

|

||||

Object string `json:"object"`

|

||||

TotalAvailable float64 `json:"total_available"`

|

||||

TotalGranted float64 `json:"total_granted"`

|

||||

TotalUsed float64 `json:"total_used"`

|

||||

}

|

||||

|

||||

// GetAuthHeader get auth header

|

||||

func GetAuthHeader(token string) http.Header {

|

||||

h := http.Header{}

|

||||

@@ -81,6 +97,9 @@ func GetResponseBody(method, url string, channel *model.Channel, headers http.He

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

if res.StatusCode != http.StatusOK {

|

||||

return nil, fmt.Errorf("status code: %d", res.StatusCode)

|

||||

}

|

||||

body, err := io.ReadAll(res.Body)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

@@ -92,6 +111,22 @@ func GetResponseBody(method, url string, channel *model.Channel, headers http.He

|

||||

return body, nil

|

||||

}

|

||||

|

||||

func updateChannelCloseAIBalance(channel *model.Channel) (float64, error) {

|

||||

url := fmt.Sprintf("%s/dashboard/billing/credit_grants", channel.BaseURL)

|

||||

body, err := GetResponseBody("GET", url, channel, GetAuthHeader(channel.Key))

|

||||

|

||||

if err != nil {

|

||||

return 0, err

|

||||

}

|

||||

response := OpenAICreditGrants{}

|

||||

err = json.Unmarshal(body, &response)

|

||||

if err != nil {

|

||||

return 0, err

|

||||

}

|

||||

channel.UpdateBalance(response.TotalAvailable)

|

||||

return response.TotalAvailable, nil

|

||||

}

|

||||

|

||||

func updateChannelOpenAISBBalance(channel *model.Channel) (float64, error) {

|

||||

url := fmt.Sprintf("https://api.openai-sb.com/sb-api/user/status?api_key=%s", channel.Key)

|

||||

body, err := GetResponseBody("GET", url, channel, GetAuthHeader(channel.Key))

|

||||

@@ -150,8 +185,26 @@ func updateChannelAPI2GPTBalance(channel *model.Channel) (float64, error) {

|

||||

return response.TotalRemaining, nil

|

||||

}

|

||||

|

||||

func updateChannelAIGC2DBalance(channel *model.Channel) (float64, error) {

|

||||

url := "https://api.aigc2d.com/dashboard/billing/credit_grants"

|

||||

body, err := GetResponseBody("GET", url, channel, GetAuthHeader(channel.Key))

|

||||

if err != nil {

|

||||

return 0, err

|

||||

}

|

||||

response := APGC2DGPTUsageResponse{}

|

||||

err = json.Unmarshal(body, &response)

|

||||

if err != nil {

|

||||

return 0, err

|

||||

}

|

||||

channel.UpdateBalance(response.TotalAvailable)

|

||||

return response.TotalAvailable, nil

|

||||

}

|

||||

|

||||

func updateChannelBalance(channel *model.Channel) (float64, error) {

|

||||

baseURL := common.ChannelBaseURLs[channel.Type]

|

||||

if channel.BaseURL == "" {

|

||||

channel.BaseURL = baseURL

|

||||

}

|

||||

switch channel.Type {

|

||||

case common.ChannelTypeOpenAI:

|

||||

if channel.BaseURL != "" {

|

||||

@@ -161,12 +214,16 @@ func updateChannelBalance(channel *model.Channel) (float64, error) {

|

||||

return 0, errors.New("尚未实现")

|

||||

case common.ChannelTypeCustom:

|

||||

baseURL = channel.BaseURL

|

||||

case common.ChannelTypeCloseAI:

|

||||

return updateChannelCloseAIBalance(channel)

|

||||

case common.ChannelTypeOpenAISB:

|

||||

return updateChannelOpenAISBBalance(channel)

|

||||

case common.ChannelTypeAIProxy:

|

||||

return updateChannelAIProxyBalance(channel)

|

||||

case common.ChannelTypeAPI2GPT:

|

||||

return updateChannelAPI2GPTBalance(channel)

|

||||

case common.ChannelTypeAIGC2D:

|

||||

return updateChannelAIGC2DBalance(channel)

|

||||

default:

|

||||

return 0, errors.New("尚未实现")

|

||||

}

|

||||

|

||||

@@ -14,7 +14,7 @@ import (

|

||||

"time"

|

||||

)

|

||||

|

||||

func testChannel(channel *model.Channel, request ChatRequest) error {

|

||||

func testChannel(channel *model.Channel, request ChatRequest) (error, *OpenAIError) {

|

||||

switch channel.Type {

|

||||

case common.ChannelTypeAzure:

|

||||

request.Model = "gpt-35-turbo"

|

||||

@@ -33,11 +33,11 @@ func testChannel(channel *model.Channel, request ChatRequest) error {

|

||||

|

||||

jsonData, err := json.Marshal(request)

|

||||

if err != nil {

|

||||

return err

|

||||

return err, nil

|

||||

}

|

||||

req, err := http.NewRequest("POST", requestURL, bytes.NewBuffer(jsonData))

|

||||

if err != nil {

|

||||

return err

|

||||

return err, nil

|

||||

}

|

||||

if channel.Type == common.ChannelTypeAzure {

|

||||

req.Header.Set("api-key", channel.Key)

|

||||

@@ -48,18 +48,18 @@ func testChannel(channel *model.Channel, request ChatRequest) error {

|

||||

client := &http.Client{}

|

||||

resp, err := client.Do(req)

|

||||

if err != nil {

|

||||

return err

|

||||

return err, nil

|

||||

}

|

||||

defer resp.Body.Close()

|

||||

var response TextResponse

|

||||

err = json.NewDecoder(resp.Body).Decode(&response)

|

||||

if err != nil {

|

||||

return err

|

||||

return err, nil

|

||||

}

|

||||

if response.Usage.CompletionTokens == 0 {

|

||||

return errors.New(fmt.Sprintf("type %s, code %v, message %s", response.Error.Type, response.Error.Code, response.Error.Message))

|

||||

return errors.New(fmt.Sprintf("type %s, code %v, message %s", response.Error.Type, response.Error.Code, response.Error.Message)), &response.Error

|

||||

}

|

||||

return nil

|

||||

return nil, nil

|

||||

}

|

||||

|

||||

func buildTestRequest() *ChatRequest {

|

||||

@@ -94,7 +94,7 @@ func TestChannel(c *gin.Context) {

|

||||

}

|

||||

testRequest := buildTestRequest()

|

||||

tik := time.Now()

|

||||

err = testChannel(channel, *testRequest)

|

||||

err, _ = testChannel(channel, *testRequest)

|

||||

tok := time.Now()

|

||||

milliseconds := tok.Sub(tik).Milliseconds()

|

||||

go channel.UpdateResponseTime(milliseconds)

|

||||

@@ -158,13 +158,14 @@ func testAllChannels(notify bool) error {

|

||||

continue

|

||||

}

|

||||

tik := time.Now()

|

||||

err := testChannel(channel, *testRequest)

|

||||

err, openaiErr := testChannel(channel, *testRequest)

|

||||

tok := time.Now()

|

||||

milliseconds := tok.Sub(tik).Milliseconds()

|

||||

if err != nil || milliseconds > disableThreshold {

|

||||

if milliseconds > disableThreshold {

|

||||

err = errors.New(fmt.Sprintf("响应时间 %.2fs 超过阈值 %.2fs", float64(milliseconds)/1000.0, float64(disableThreshold)/1000.0))

|

||||

}

|

||||

if milliseconds > disableThreshold {

|

||||

err = errors.New(fmt.Sprintf("响应时间 %.2fs 超过阈值 %.2fs", float64(milliseconds)/1000.0, float64(disableThreshold)/1000.0))

|

||||

disableChannel(channel.Id, channel.Name, err.Error())

|

||||

}

|

||||

if shouldDisableChannel(openaiErr) {

|

||||

disableChannel(channel.Id, channel.Name, err.Error())

|

||||

}

|

||||

channel.UpdateResponseTime(milliseconds)

|

||||

|

||||

@@ -13,7 +13,12 @@ func GetAllLogs(c *gin.Context) {

|

||||

p = 0

|

||||

}

|

||||

logType, _ := strconv.Atoi(c.Query("type"))

|

||||

logs, err := model.GetAllLogs(logType, p*common.ItemsPerPage, common.ItemsPerPage)

|

||||

startTimestamp, _ := strconv.ParseInt(c.Query("start_timestamp"), 10, 64)

|

||||

endTimestamp, _ := strconv.ParseInt(c.Query("end_timestamp"), 10, 64)

|

||||

username := c.Query("username")

|

||||

tokenName := c.Query("token_name")

|

||||

modelName := c.Query("model_name")

|

||||

logs, err := model.GetAllLogs(logType, startTimestamp, endTimestamp, modelName, username, tokenName, p*common.ItemsPerPage, common.ItemsPerPage)

|

||||

if err != nil {

|

||||

c.JSON(200, gin.H{

|

||||

"success": false,

|

||||

@@ -35,7 +40,11 @@ func GetUserLogs(c *gin.Context) {

|

||||

}

|

||||

userId := c.GetInt("id")

|

||||

logType, _ := strconv.Atoi(c.Query("type"))

|

||||

logs, err := model.GetUserLogs(userId, logType, p*common.ItemsPerPage, common.ItemsPerPage)

|

||||

startTimestamp, _ := strconv.ParseInt(c.Query("start_timestamp"), 10, 64)

|

||||

endTimestamp, _ := strconv.ParseInt(c.Query("end_timestamp"), 10, 64)

|

||||

tokenName := c.Query("token_name")

|

||||

modelName := c.Query("model_name")

|

||||

logs, err := model.GetUserLogs(userId, logType, startTimestamp, endTimestamp, modelName, tokenName, p*common.ItemsPerPage, common.ItemsPerPage)

|

||||

if err != nil {

|

||||

c.JSON(200, gin.H{

|

||||

"success": false,

|

||||

@@ -84,3 +93,41 @@ func SearchUserLogs(c *gin.Context) {

|

||||

"data": logs,

|

||||

})

|

||||

}

|

||||

|

||||

func GetLogsStat(c *gin.Context) {

|

||||

logType, _ := strconv.Atoi(c.Query("type"))

|

||||

startTimestamp, _ := strconv.ParseInt(c.Query("start_timestamp"), 10, 64)

|

||||

endTimestamp, _ := strconv.ParseInt(c.Query("end_timestamp"), 10, 64)

|

||||

tokenName := c.Query("token_name")

|

||||

username := c.Query("username")

|

||||

modelName := c.Query("model_name")

|

||||

quotaNum := model.SumUsedQuota(logType, startTimestamp, endTimestamp, modelName, username, tokenName)

|

||||

//tokenNum := model.SumUsedToken(logType, startTimestamp, endTimestamp, modelName, username, "")

|

||||

c.JSON(200, gin.H{

|

||||

"success": true,

|

||||

"message": "",

|

||||

"data": gin.H{

|

||||

"quota": quotaNum,

|

||||

//"token": tokenNum,

|

||||

},

|

||||

})

|

||||

}

|

||||

|

||||

func GetLogsSelfStat(c *gin.Context) {

|

||||

username := c.GetString("username")

|

||||

logType, _ := strconv.Atoi(c.Query("type"))

|

||||

startTimestamp, _ := strconv.ParseInt(c.Query("start_timestamp"), 10, 64)

|

||||

endTimestamp, _ := strconv.ParseInt(c.Query("end_timestamp"), 10, 64)

|

||||

tokenName := c.Query("token_name")

|

||||

modelName := c.Query("model_name")

|

||||

quotaNum := model.SumUsedQuota(logType, startTimestamp, endTimestamp, modelName, username, tokenName)

|

||||

//tokenNum := model.SumUsedToken(logType, startTimestamp, endTimestamp, modelName, username, tokenName)

|

||||

c.JSON(200, gin.H{

|

||||

"success": true,

|

||||

"message": "",

|

||||

"data": gin.H{

|

||||

"quota": quotaNum,

|

||||

//"token": tokenNum,

|

||||

},

|

||||

})

|

||||

}

|

||||

|

||||

@@ -127,8 +127,9 @@ func SendPasswordResetEmail(c *gin.Context) {

|

||||

link := fmt.Sprintf("%s/user/reset?email=%s&token=%s", common.ServerAddress, email, code)

|

||||

subject := fmt.Sprintf("%s密码重置", common.SystemName)

|

||||

content := fmt.Sprintf("<p>您好,你正在进行%s密码重置。</p>"+

|

||||

"<p>点击<a href='%s'>此处</a>进行密码重置。</p>"+

|

||||

"<p>重置链接 %d 分钟内有效,如果不是本人操作,请忽略。</p>", common.SystemName, link, common.VerificationValidMinutes)

|

||||

"<p>点击 <a href='%s'>此处</a> 进行密码重置。</p>"+

|

||||

"<p>如果链接无法点击,请尝试点击下面的链接或将其复制到浏览器中打开:<br> %s </p>"+

|

||||

"<p>重置链接 %d 分钟内有效,如果不是本人操作,请忽略。</p>", common.SystemName, link, link, common.VerificationValidMinutes)

|

||||

err := common.SendEmail(subject, email, content)

|

||||

if err != nil {

|

||||

c.JSON(http.StatusOK, gin.H{

|

||||

|

||||

@@ -2,6 +2,7 @@ package controller

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

|

||||

"github.com/gin-gonic/gin"

|

||||

)

|

||||

|

||||

@@ -53,6 +54,15 @@ func init() {

|

||||

})

|

||||

// https://platform.openai.com/docs/models/model-endpoint-compatibility

|

||||

openAIModels = []OpenAIModels{

|

||||

{

|

||||

Id: "dall-e",

|

||||

Object: "model",

|

||||

Created: 1677649963,

|

||||

OwnedBy: "openai",

|

||||

Permission: permission,

|

||||

Root: "dall-e",

|

||||

Parent: nil,

|

||||

},

|

||||

{

|

||||

Id: "gpt-3.5-turbo",

|

||||

Object: "model",

|

||||

@@ -224,6 +234,96 @@ func init() {

|

||||

Root: "text-moderation-stable",

|

||||

Parent: nil,

|

||||

},

|

||||

{

|

||||

Id: "text-davinci-edit-001",

|

||||

Object: "model",

|

||||

Created: 1677649963,

|

||||

OwnedBy: "openai",

|

||||

Permission: permission,

|

||||

Root: "text-davinci-edit-001",

|

||||

Parent: nil,

|

||||

},

|

||||

{

|

||||

Id: "code-davinci-edit-001",

|

||||

Object: "model",

|

||||

Created: 1677649963,

|

||||

OwnedBy: "openai",

|

||||

Permission: permission,

|

||||

Root: "code-davinci-edit-001",

|

||||

Parent: nil,

|

||||

},

|

||||

{

|

||||

Id: "claude-instant-1",

|

||||

Object: "model",

|

||||

Created: 1677649963,

|

||||

OwnedBy: "anturopic",

|

||||

Permission: permission,

|

||||

Root: "claude-instant-1",

|

||||

Parent: nil,

|

||||

},

|

||||

{

|

||||

Id: "claude-2",

|

||||

Object: "model",

|

||||

Created: 1677649963,

|

||||

OwnedBy: "anturopic",

|

||||

Permission: permission,

|

||||

Root: "claude-2",

|

||||

Parent: nil,

|

||||

},

|

||||

{

|

||||

Id: "ERNIE-Bot",

|

||||

Object: "model",

|

||||

Created: 1677649963,

|

||||

OwnedBy: "baidu",

|

||||

Permission: permission,

|

||||

Root: "ERNIE-Bot",

|

||||

Parent: nil,

|

||||

},

|

||||

{

|

||||

Id: "ERNIE-Bot-turbo",

|

||||

Object: "model",

|

||||

Created: 1677649963,

|

||||

OwnedBy: "baidu",

|

||||

Permission: permission,

|

||||

Root: "ERNIE-Bot-turbo",

|

||||

Parent: nil,

|

||||

},

|

||||

{

|

||||

Id: "PaLM-2",

|

||||

Object: "model",

|

||||

Created: 1677649963,

|

||||

OwnedBy: "google",

|

||||

Permission: permission,

|

||||

Root: "PaLM-2",

|

||||

Parent: nil,

|

||||

},

|

||||

{

|

||||

Id: "chatglm_pro",

|

||||

Object: "model",

|

||||

Created: 1677649963,

|

||||

OwnedBy: "zhipu",

|

||||

Permission: permission,

|

||||

Root: "chatglm_pro",

|

||||

Parent: nil,

|

||||

},

|

||||

{

|

||||

Id: "chatglm_std",

|

||||

Object: "model",

|

||||

Created: 1677649963,

|

||||

OwnedBy: "zhipu",

|

||||

Permission: permission,

|

||||

Root: "chatglm_std",

|

||||

Parent: nil,

|

||||

},

|

||||

{

|

||||

Id: "chatglm_lite",

|

||||

Object: "model",

|

||||

Created: 1677649963,

|

||||

OwnedBy: "zhipu",

|

||||

Permission: permission,

|

||||

Root: "chatglm_lite",

|

||||

Parent: nil,

|

||||

},

|

||||

}

|

||||

openAIModelsMap = make(map[string]OpenAIModels)

|

||||

for _, model := range openAIModels {

|

||||

|

||||

203

controller/relay-baidu.go

Normal file

203

controller/relay-baidu.go

Normal file

@@ -0,0 +1,203 @@

|

||||

package controller

|

||||

|

||||

import (

|

||||

"bufio"

|

||||

"encoding/json"

|

||||

"github.com/gin-gonic/gin"

|

||||

"io"

|

||||

"net/http"

|

||||

"one-api/common"

|

||||

"strings"

|

||||

)

|

||||

|

||||

// https://cloud.baidu.com/doc/WENXINWORKSHOP/s/flfmc9do2

|

||||

|

||||

type BaiduTokenResponse struct {

|

||||

RefreshToken string `json:"refresh_token"`

|

||||

ExpiresIn int `json:"expires_in"`

|

||||

SessionKey string `json:"session_key"`

|

||||

AccessToken string `json:"access_token"`

|

||||

Scope string `json:"scope"`

|

||||

SessionSecret string `json:"session_secret"`

|

||||

}

|

||||

|

||||

type BaiduMessage struct {

|

||||

Role string `json:"role"`

|

||||

Content string `json:"content"`

|

||||

}

|

||||

|

||||

type BaiduChatRequest struct {

|

||||

Messages []BaiduMessage `json:"messages"`

|

||||

Stream bool `json:"stream"`

|

||||

UserId string `json:"user_id,omitempty"`

|

||||

}

|

||||

|

||||

type BaiduError struct {

|

||||

ErrorCode int `json:"error_code"`

|

||||

ErrorMsg string `json:"error_msg"`

|

||||

}

|

||||

|

||||

type BaiduChatResponse struct {

|

||||

Id string `json:"id"`

|

||||

Object string `json:"object"`

|

||||

Created int64 `json:"created"`

|

||||

Result string `json:"result"`

|

||||

IsTruncated bool `json:"is_truncated"`

|

||||

NeedClearHistory bool `json:"need_clear_history"`

|

||||

Usage Usage `json:"usage"`

|

||||

BaiduError

|

||||

}

|

||||

|

||||

type BaiduChatStreamResponse struct {

|

||||

BaiduChatResponse

|

||||

SentenceId int `json:"sentence_id"`

|

||||

IsEnd bool `json:"is_end"`

|

||||

}

|

||||

|

||||

func requestOpenAI2Baidu(request GeneralOpenAIRequest) *BaiduChatRequest {

|

||||

messages := make([]BaiduMessage, 0, len(request.Messages))

|

||||

for _, message := range request.Messages {

|

||||

messages = append(messages, BaiduMessage{

|

||||

Role: message.Role,

|

||||

Content: message.Content,

|

||||

})

|

||||

}

|

||||

return &BaiduChatRequest{

|

||||

Messages: messages,

|

||||

Stream: request.Stream,

|

||||

}

|

||||

}

|

||||

|

||||

func responseBaidu2OpenAI(response *BaiduChatResponse) *OpenAITextResponse {

|

||||

choice := OpenAITextResponseChoice{

|

||||

Index: 0,

|

||||

Message: Message{

|

||||

Role: "assistant",

|

||||

Content: response.Result,

|

||||

},

|

||||

FinishReason: "stop",

|

||||

}

|

||||

fullTextResponse := OpenAITextResponse{

|

||||

Id: response.Id,

|

||||

Object: "chat.completion",

|

||||

Created: response.Created,

|

||||

Choices: []OpenAITextResponseChoice{choice},

|

||||

Usage: response.Usage,

|

||||

}

|

||||

return &fullTextResponse

|

||||

}

|

||||

|

||||

func streamResponseBaidu2OpenAI(baiduResponse *BaiduChatStreamResponse) *ChatCompletionsStreamResponse {

|

||||

var choice ChatCompletionsStreamResponseChoice

|

||||

choice.Delta.Content = baiduResponse.Result

|

||||

choice.FinishReason = "stop"

|

||||

response := ChatCompletionsStreamResponse{

|

||||

Id: baiduResponse.Id,

|

||||

Object: "chat.completion.chunk",

|

||||

Created: baiduResponse.Created,

|

||||

Model: "ernie-bot",

|

||||

Choices: []ChatCompletionsStreamResponseChoice{choice},

|

||||

}

|

||||

return &response

|

||||

}

|

||||

|

||||

func baiduStreamHandler(c *gin.Context, resp *http.Response) (*OpenAIErrorWithStatusCode, *Usage) {

|

||||

var usage Usage

|

||||

scanner := bufio.NewScanner(resp.Body)

|

||||

scanner.Split(func(data []byte, atEOF bool) (advance int, token []byte, err error) {

|