Compare commits

242 Commits

0.0.3

...

v0.5.11-al

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

d062bc60e4 | ||

|

|

39c1882970 | ||

|

|

9c42c7dfd9 | ||

|

|

903aaeded0 | ||

|

|

bdd4be562d | ||

|

|

37afb313b5 | ||

|

|

c9ebcab8b8 | ||

|

|

86261cc656 | ||

|

|

8491785c9d | ||

|

|

e848a3f7fa | ||

|

|

318adf5985 | ||

|

|

965d7fc3d2 | ||

|

|

aa3f605894 | ||

|

|

7b8eff1f22 | ||

|

|

e80cd508ba | ||

|

|

d37f836d53 | ||

|

|

e0b2d1ae47 | ||

|

|

797ead686b | ||

|

|

0d22cf9ead | ||

|

|

48989d4a0b | ||

|

|

6227eee5bc | ||

|

|

cbf8f07747 | ||

|

|

4a96031ce6 | ||

|

|

92886093ae | ||

|

|

0c022f17cb | ||

|

|

83f95935de | ||

|

|

aa03c89133 | ||

|

|

505817ca17 | ||

|

|

cb5a3df616 | ||

|

|

7772064d87 | ||

|

|

c50c609565 | ||

|

|

498dea2dbb | ||

|

|

c725cc8842 | ||

|

|

af8908db54 | ||

|

|

d8029550f7 | ||

|

|

f44fbe3fe7 | ||

|

|

1c8922153d | ||

|

|

f3c07e1451 | ||

|

|

40ceb29e54 | ||

|

|

0699ecd0af | ||

|

|

ee9e746520 | ||

|

|

a763681c2e | ||

|

|

b7fcb319da | ||

|

|

67c64e71c8 | ||

|

|

97030e27f8 | ||

|

|

461f5dab56 | ||

|

|

af378c59af | ||

|

|

bc6769826b | ||

|

|

0fe26cc4bd | ||

|

|

7d6a169669 | ||

|

|

66f06e5d6f | ||

|

|

6acb9537a9 | ||

|

|

7069c49bdf | ||

|

|

58dee76bf7 | ||

|

|

5cf23d8698 | ||

|

|

366b82128f | ||

|

|

2a70744dbf | ||

|

|

4c5feee0b6 | ||

|

|

9ba5388367 | ||

|

|

379074f7d0 | ||

|

|

01f7b0186f | ||

|

|

a3f80a3392 | ||

|

|

8f5b83562b | ||

|

|

b7570d5c77 | ||

|

|

0e73418cdf | ||

|

|

9889377f0e | ||

|

|

b273464e77 | ||

|

|

b4e43d97fd | ||

|

|

3347a44023 | ||

|

|

923e24534b | ||

|

|

b4d67ca614 | ||

|

|

d85e356b6e | ||

|

|

495fc628e4 | ||

|

|

76f9288c34 | ||

|

|

915d13fdd4 | ||

|

|

969f539777 | ||

|

|

54e5f8ecd2 | ||

|

|

34d517cfa2 | ||

|

|

ddcaf95f5f | ||

|

|

1d15157f7d | ||

|

|

de7b9710a5 | ||

|

|

58bb3ab6f6 | ||

|

|

d306cb5229 | ||

|

|

6c5307d0c4 | ||

|

|

7c4505bdfc | ||

|

|

9d43ec57d8 | ||

|

|

e5311892d1 | ||

|

|

bc7c9105f4 | ||

|

|

3fe76c8af7 | ||

|

|

c70c614018 | ||

|

|

0d87de697c | ||

|

|

aec343dc38 | ||

|

|

89d458b9cf | ||

|

|

63fafba112 | ||

|

|

a398f35968 | ||

|

|

57aa637c77 | ||

|

|

3b483639a4 | ||

|

|

22980b4c44 | ||

|

|

64cdb7eafb | ||

|

|

824444244b | ||

|

|

fbe9985f57 | ||

|

|

a27a5bcc06 | ||

|

|

e28d4b1741 | ||

|

|

f073592d39 | ||

|

|

fa41ca9805 | ||

|

|

e338de45b6 | ||

|

|

114587b46f | ||

|

|

b4b4acc288 | ||

|

|

d663de3e3a | ||

|

|

a85ecace2e | ||

|

|

fbdea91ea1 | ||

|

|

8d34b7a77e | ||

|

|

cbd62011b8 | ||

|

|

4701897e2e | ||

|

|

0f6c132a80 | ||

|

|

3cac45dc85 | ||

|

|

47c08c72ce | ||

|

|

53b2cace0b | ||

|

|

f0fc991b44 | ||

|

|

594f06e7b0 | ||

|

|

197d1d7a9d | ||

|

|

f9b748c2ca | ||

|

|

fd98463611 | ||

|

|

f5a1cd3463 | ||

|

|

8651451e53 | ||

|

|

1c5bb97a42 | ||

|

|

de868e4e4e | ||

|

|

1d258cc898 | ||

|

|

37e09d764c | ||

|

|

159b9e3369 | ||

|

|

92001986db | ||

|

|

a5647b1ea7 | ||

|

|

215e54fc96 | ||

|

|

ecf8a6d875 | ||

|

|

24df3e5f62 | ||

|

|

12ef9679a7 | ||

|

|

328aa68255 | ||

|

|

4335f005a6 | ||

|

|

fe26a1448d | ||

|

|

42451d9d02 | ||

|

|

25c4c111ab | ||

|

|

0d50ad4b2b | ||

|

|

959bcdef88 | ||

|

|

39ae8075e4 | ||

|

|

b57a0eca16 | ||

|

|

1b4cc78890 | ||

|

|

420c375140 | ||

|

|

01863d3e44 | ||

|

|

d0a0e871e1 | ||

|

|

bd6fe1e93c | ||

|

|

c55bb67818 | ||

|

|

0f949c3782 | ||

|

|

a721a5b6f9 | ||

|

|

276163affd | ||

|

|

621eb91b46 | ||

|

|

7e575abb95 | ||

|

|

9db93316c4 | ||

|

|

c3dc315e75 | ||

|

|

04acdb1ccb | ||

|

|

f0d5e102a3 | ||

|

|

abbf2fded0 | ||

|

|

ef2c5abb5b | ||

|

|

56b5007379 | ||

|

|

d09d317459 | ||

|

|

1c4409ae80 | ||

|

|

5ee24e8acf | ||

|

|

4f2f911e4d | ||

|

|

fdb2cccf65 | ||

|

|

a3e267df7e | ||

|

|

ac7c0f3a76 | ||

|

|

efeb9a16ce | ||

|

|

05e4f2b439 | ||

|

|

7e058bfb9b | ||

|

|

dfaa0183b7 | ||

|

|

1b56becfaa | ||

|

|

23b1c63538 | ||

|

|

49d1a63402 | ||

|

|

2a7b82650c | ||

|

|

8ea7b9aae2 | ||

|

|

5136b12612 | ||

|

|

80a49e01a3 | ||

|

|

8fb082ba3b | ||

|

|

86c2627c24 | ||

|

|

90b4cac7f3 | ||

|

|

e4bacc45d6 | ||

|

|

da1d81998f | ||

|

|

cac61b9f66 | ||

|

|

3da12e99d9 | ||

|

|

4ef5e2020c | ||

|

|

af20063a8d | ||

|

|

ca512f6a38 | ||

|

|

0e9ff8825e | ||

|

|

e0b4f96b5b | ||

|

|

eae9b6e607 | ||

|

|

7bddc73b96 | ||

|

|

2a527ee436 | ||

|

|

e42119b73d | ||

|

|

821c559e89 | ||

|

|

7e2bca7e9c | ||

|

|

1e16ef3e0d | ||

|

|

476a46ad7e | ||

|

|

c58f710227 | ||

|

|

150d068e9f | ||

|

|

be780462f1 | ||

|

|

f2159e1033 | ||

|

|

466005de07 | ||

|

|

2b088a1678 | ||

|

|

3a18cebe34 | ||

|

|

cc36bf9c13 | ||

|

|

3b36608bbd | ||

|

|

29fa94e7d2 | ||

|

|

9c436921d1 | ||

|

|

463b0b3c51 | ||

|

|

c3d85a28d4 | ||

|

|

7422b0d051 | ||

|

|

5a62357c93 | ||

|

|

b464e2907a | ||

|

|

d96cf2e84d | ||

|

|

446337c329 | ||

|

|

1dfa190e79 | ||

|

|

2d49ca6a07 | ||

|

|

89bcaaf989 | ||

|

|

afcd1bd27b | ||

|

|

c2c455c980 | ||

|

|

30a7f1a1c7 | ||

|

|

c9d2e42a9e | ||

|

|

3fca6ff534 | ||

|

|

8cbbeb784f | ||

|

|

ec88c0c240 | ||

|

|

065147b440 | ||

|

|

fe8f216dd9 | ||

|

|

b7d0616ae0 | ||

|

|

ce9c8024a6 | ||

|

|

8a866078b2 | ||

|

|

3e81d8af45 | ||

|

|

b8cb86c2c1 | ||

|

|

f45d586400 | ||

|

|

50dec03ff3 | ||

|

|

f31d400b6f | ||

|

|

130e6bfd83 | ||

|

|

d1335ebc01 | ||

|

|

e92da7928b |

11

.github/workflows/linux-release.yml

vendored

@@ -7,6 +7,11 @@ on:

|

||||

tags:

|

||||

- '*'

|

||||

- '!*-alpha*'

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

name:

|

||||

description: 'reason'

|

||||

required: false

|

||||

jobs:

|

||||

release:

|

||||

runs-on: ubuntu-latest

|

||||

@@ -18,13 +23,13 @@ jobs:

|

||||

- uses: actions/setup-node@v3

|

||||

with:

|

||||

node-version: 16

|

||||

- name: Build Frontend

|

||||

- name: Build Frontend (theme default)

|

||||

env:

|

||||

CI: ""

|

||||

run: |

|

||||

cd web

|

||||

npm install

|

||||

REACT_APP_VERSION=$(git describe --tags) npm run build

|

||||

git describe --tags > VERSION

|

||||

REACT_APP_VERSION=$(git describe --tags) chmod u+x ./build.sh && ./build.sh

|

||||

cd ..

|

||||

- name: Set up Go

|

||||

uses: actions/setup-go@v3

|

||||

|

||||

11

.github/workflows/macos-release.yml

vendored

@@ -7,6 +7,11 @@ on:

|

||||

tags:

|

||||

- '*'

|

||||

- '!*-alpha*'

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

name:

|

||||

description: 'reason'

|

||||

required: false

|

||||

jobs:

|

||||

release:

|

||||

runs-on: macos-latest

|

||||

@@ -18,13 +23,13 @@ jobs:

|

||||

- uses: actions/setup-node@v3

|

||||

with:

|

||||

node-version: 16

|

||||

- name: Build Frontend

|

||||

- name: Build Frontend (theme default)

|

||||

env:

|

||||

CI: ""

|

||||

run: |

|

||||

cd web

|

||||

npm install

|

||||

REACT_APP_VERSION=$(git describe --tags) npm run build

|

||||

git describe --tags > VERSION

|

||||

REACT_APP_VERSION=$(git describe --tags) chmod u+x ./build.sh && ./build.sh

|

||||

cd ..

|

||||

- name: Set up Go

|

||||

uses: actions/setup-go@v3

|

||||

|

||||

11

.github/workflows/windows-release.yml

vendored

@@ -7,6 +7,11 @@ on:

|

||||

tags:

|

||||

- '*'

|

||||

- '!*-alpha*'

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

name:

|

||||

description: 'reason'

|

||||

required: false

|

||||

jobs:

|

||||

release:

|

||||

runs-on: windows-latest

|

||||

@@ -21,14 +26,14 @@ jobs:

|

||||

- uses: actions/setup-node@v3

|

||||

with:

|

||||

node-version: 16

|

||||

- name: Build Frontend

|

||||

- name: Build Frontend (theme default)

|

||||

env:

|

||||

CI: ""

|

||||

run: |

|

||||

cd web

|

||||

cd web/default

|

||||

npm install

|

||||

REACT_APP_VERSION=$(git describe --tags) npm run build

|

||||

cd ..

|

||||

cd ../..

|

||||

- name: Set up Go

|

||||

uses: actions/setup-go@v3

|

||||

with:

|

||||

|

||||

4

.gitignore

vendored

@@ -4,4 +4,6 @@ upload

|

||||

*.exe

|

||||

*.db

|

||||

build

|

||||

*.db-journal

|

||||

*.db-journal

|

||||

logs

|

||||

data

|

||||

19

Dockerfile

@@ -1,10 +1,16 @@

|

||||

FROM node:16 as builder

|

||||

|

||||

WORKDIR /build

|

||||

COPY ./web .

|

||||

WORKDIR /web

|

||||

COPY ./VERSION .

|

||||

COPY ./web .

|

||||

|

||||

WORKDIR /web/default

|

||||

RUN npm install

|

||||

RUN REACT_APP_VERSION=$(cat VERSION) npm run build

|

||||

RUN DISABLE_ESLINT_PLUGIN='true' REACT_APP_VERSION=$(cat VERSION) npm run build

|

||||

|

||||

WORKDIR /web/berry

|

||||

RUN npm install

|

||||

RUN DISABLE_ESLINT_PLUGIN='true' REACT_APP_VERSION=$(cat VERSION) npm run build

|

||||

|

||||

FROM golang AS builder2

|

||||

|

||||

@@ -13,9 +19,10 @@ ENV GO111MODULE=on \

|

||||

GOOS=linux

|

||||

|

||||

WORKDIR /build

|

||||

COPY . .

|

||||

COPY --from=builder /build/build ./web/build

|

||||

ADD go.mod go.sum ./

|

||||

RUN go mod download

|

||||

COPY . .

|

||||

COPY --from=builder /web/build ./web/build

|

||||

RUN go build -ldflags "-s -w -X 'one-api/common.Version=$(cat VERSION)' -extldflags '-static'" -o one-api

|

||||

|

||||

FROM alpine

|

||||

@@ -28,4 +35,4 @@ RUN apk update \

|

||||

COPY --from=builder2 /build/one-api /

|

||||

EXPOSE 3000

|

||||

WORKDIR /data

|

||||

ENTRYPOINT ["/one-api"]

|

||||

ENTRYPOINT ["/one-api"]

|

||||

35

README.en.md

@@ -1,9 +1,9 @@

|

||||

<p align="right">

|

||||

<a href="./README.md">中文</a> | <strong>English</strong>

|

||||

<a href="./README.md">中文</a> | <strong>English</strong> | <a href="./README.ja.md">日本語</a>

|

||||

</p>

|

||||

|

||||

<p align="center">

|

||||

<a href="https://github.com/songquanpeng/one-api"><img src="https://raw.githubusercontent.com/songquanpeng/one-api/main/web/public/logo.png" width="150" height="150" alt="one-api logo"></a>

|

||||

<a href="https://github.com/songquanpeng/one-api"><img src="https://raw.githubusercontent.com/songquanpeng/one-api/main/web/default/public/logo.png" width="150" height="150" alt="one-api logo"></a>

|

||||

</p>

|

||||

|

||||

<div align="center">

|

||||

@@ -57,15 +57,13 @@ _✨ Access all LLM through the standard OpenAI API format, easy to deploy & use

|

||||

> **Note**: The latest image pulled from Docker may be an `alpha` release. Specify the version manually if you require stability.

|

||||

|

||||

## Features

|

||||

1. Supports multiple API access channels:

|

||||

+ [x] Official OpenAI channel (support proxy configuration)

|

||||

+ [x] **Azure OpenAI API**

|

||||

+ [x] [API Distribute](https://api.gptjk.top/register?aff=QGxj)

|

||||

+ [x] [OpenAI-SB](https://openai-sb.com)

|

||||

+ [x] [API2D](https://api2d.com/r/197971)

|

||||

+ [x] [OhMyGPT](https://aigptx.top?aff=uFpUl2Kf)

|

||||

+ [x] [AI Proxy](https://aiproxy.io/?i=OneAPI) (invitation code: `OneAPI`)

|

||||

+ [x] Custom channel: Various third-party proxy services not included in the list

|

||||

1. Support for multiple large models:

|

||||

+ [x] [OpenAI ChatGPT Series Models](https://platform.openai.com/docs/guides/gpt/chat-completions-api) (Supports [Azure OpenAI API](https://learn.microsoft.com/en-us/azure/ai-services/openai/reference))

|

||||

+ [x] [Anthropic Claude Series Models](https://anthropic.com)

|

||||

+ [x] [Google PaLM2 and Gemini Series Models](https://developers.generativeai.google)

|

||||

+ [x] [Baidu Wenxin Yiyuan Series Models](https://cloud.baidu.com/doc/WENXINWORKSHOP/index.html)

|

||||

+ [x] [Alibaba Tongyi Qianwen Series Models](https://help.aliyun.com/document_detail/2400395.html)

|

||||

+ [x] [Zhipu ChatGLM Series Models](https://bigmodel.cn)

|

||||

2. Supports access to multiple channels through **load balancing**.

|

||||

3. Supports **stream mode** that enables typewriter-like effect through stream transmission.

|

||||

4. Supports **multi-machine deployment**. [See here](#multi-machine-deployment) for more details.

|

||||

@@ -139,7 +137,7 @@ The initial account username is `root` and password is `123456`.

|

||||

cd one-api/web

|

||||

npm install

|

||||

npm run build

|

||||

|

||||

|

||||

# Build the backend

|

||||

cd ..

|

||||

go mod download

|

||||

@@ -175,7 +173,12 @@ If you encounter a blank page after deployment, refer to [#97](https://github.co

|

||||

<summary><strong>Deploy on Sealos</strong></summary>

|

||||

<div>

|

||||

|

||||

Please refer to [this tutorial](https://github.com/c121914yu/FastGPT/blob/main/docs/deploy/one-api/sealos.md).

|

||||

> Sealos supports high concurrency, dynamic scaling, and stable operations for millions of users.

|

||||

|

||||

> Click the button below to deploy with one click.👇

|

||||

|

||||

[](https://cloud.sealos.io/?openapp=system-fastdeploy?templateName=one-api)

|

||||

|

||||

|

||||

</div>

|

||||

</details>

|

||||

@@ -186,8 +189,10 @@ Please refer to [this tutorial](https://github.com/c121914yu/FastGPT/blob/main/d

|

||||

|

||||

> Zeabur's servers are located overseas, automatically solving network issues, and the free quota is sufficient for personal usage.

|

||||

|

||||

[](https://zeabur.com/templates/7Q0KO3)

|

||||

|

||||

1. First, fork the code.

|

||||

2. Go to [Zeabur](https://zeabur.com/), log in, and enter the console.

|

||||

2. Go to [Zeabur](https://zeabur.com?referralCode=songquanpeng), log in, and enter the console.

|

||||

3. Create a new project. In Service -> Add Service, select Marketplace, and choose MySQL. Note down the connection parameters (username, password, address, and port).

|

||||

4. Copy the connection parameters and run ```create database `one-api` ``` to create the database.

|

||||

5. Then, in Service -> Add Service, select Git (authorization is required for the first use) and choose your forked repository.

|

||||

@@ -280,7 +285,7 @@ If the channel ID is not provided, load balancing will be used to distribute the

|

||||

+ Double-check that your interface address and API Key are correct.

|

||||

|

||||

## Related Projects

|

||||

[FastGPT](https://github.com/c121914yu/FastGPT): Build an AI knowledge base in three minutes

|

||||

[FastGPT](https://github.com/labring/FastGPT): Knowledge question answering system based on the LLM

|

||||

|

||||

## Note

|

||||

This project is an open-source project. Please use it in compliance with OpenAI's [Terms of Use](https://openai.com/policies/terms-of-use) and **applicable laws and regulations**. It must not be used for illegal purposes.

|

||||

|

||||

300

README.ja.md

Normal file

@@ -0,0 +1,300 @@

|

||||

<p align="right">

|

||||

<a href="./README.md">中文</a> | <a href="./README.en.md">English</a> | <strong>日本語</strong>

|

||||

</p>

|

||||

|

||||

<p align="center">

|

||||

<a href="https://github.com/songquanpeng/one-api"><img src="https://raw.githubusercontent.com/songquanpeng/one-api/main/web/default/public/logo.png" width="150" height="150" alt="one-api logo"></a>

|

||||

</p>

|

||||

|

||||

<div align="center">

|

||||

|

||||

# One API

|

||||

|

||||

_✨ 標準的な OpenAI API フォーマットを通じてすべての LLM にアクセスでき、導入と利用が容易です ✨_

|

||||

|

||||

</div>

|

||||

|

||||

<p align="center">

|

||||

<a href="https://raw.githubusercontent.com/songquanpeng/one-api/main/LICENSE">

|

||||

<img src="https://img.shields.io/github/license/songquanpeng/one-api?color=brightgreen" alt="license">

|

||||

</a>

|

||||

<a href="https://github.com/songquanpeng/one-api/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/songquanpeng/one-api?color=brightgreen&include_prereleases" alt="release">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/repository/docker/justsong/one-api">

|

||||

<img src="https://img.shields.io/docker/pulls/justsong/one-api?color=brightgreen" alt="docker pull">

|

||||

</a>

|

||||

<a href="https://github.com/songquanpeng/one-api/releases/latest">

|

||||

<img src="https://img.shields.io/github/downloads/songquanpeng/one-api/total?color=brightgreen&include_prereleases" alt="release">

|

||||

</a>

|

||||

<a href="https://goreportcard.com/report/github.com/songquanpeng/one-api">

|

||||

<img src="https://goreportcard.com/badge/github.com/songquanpeng/one-api" alt="GoReportCard">

|

||||

</a>

|

||||

</p>

|

||||

|

||||

<p align="center">

|

||||

<a href="#deployment">デプロイチュートリアル</a>

|

||||

·

|

||||

<a href="#usage">使用方法</a>

|

||||

·

|

||||

<a href="https://github.com/songquanpeng/one-api/issues">フィードバック</a>

|

||||

·

|

||||

<a href="#screenshots">スクリーンショット</a>

|

||||

·

|

||||

<a href="https://openai.justsong.cn/">ライブデモ</a>

|

||||

·

|

||||

<a href="#faq">FAQ</a>

|

||||

·

|

||||

<a href="#related-projects">関連プロジェクト</a>

|

||||

·

|

||||

<a href="https://iamazing.cn/page/reward">寄付</a>

|

||||

</p>

|

||||

|

||||

> **警告**: この README は ChatGPT によって翻訳されています。翻訳ミスを発見した場合は遠慮なく PR を投稿してください。

|

||||

|

||||

> **警告**: 英語版の Docker イメージは `justsong/one-api-en` です。

|

||||

|

||||

> **注**: Docker からプルされた最新のイメージは、`alpha` リリースかもしれません。安定性が必要な場合は、手動でバージョンを指定してください。

|

||||

|

||||

## 特徴

|

||||

1. 複数の大型モデルをサポート:

|

||||

+ [x] [OpenAI ChatGPT シリーズモデル](https://platform.openai.com/docs/guides/gpt/chat-completions-api) ([Azure OpenAI API](https://learn.microsoft.com/en-us/azure/ai-services/openai/reference) をサポート)

|

||||

+ [x] [Anthropic Claude シリーズモデル](https://anthropic.com)

|

||||

+ [x] [Google PaLM2/Gemini シリーズモデル](https://developers.generativeai.google)

|

||||

+ [x] [Baidu Wenxin Yiyuan シリーズモデル](https://cloud.baidu.com/doc/WENXINWORKSHOP/index.html)

|

||||

+ [x] [Alibaba Tongyi Qianwen シリーズモデル](https://help.aliyun.com/document_detail/2400395.html)

|

||||

+ [x] [Zhipu ChatGLM シリーズモデル](https://bigmodel.cn)

|

||||

2. **ロードバランシング**による複数チャンネルへのアクセスをサポート。

|

||||

3. ストリーム伝送によるタイプライター的効果を可能にする**ストリームモード**に対応。

|

||||

4. **マルチマシンデプロイ**に対応。[詳細はこちら](#multi-machine-deployment)を参照。

|

||||

5. トークンの有効期限や使用回数を設定できる**トークン管理**に対応しています。

|

||||

6. **バウチャー管理**に対応しており、バウチャーの一括生成やエクスポートが可能です。バウチャーは口座残高の補充に利用できます。

|

||||

7. **チャンネル管理**に対応し、チャンネルの一括作成が可能。

|

||||

8. グループごとに異なるレートを設定するための**ユーザーグループ**と**チャンネルグループ**をサポートしています。

|

||||

9. チャンネル**モデルリスト設定**に対応。

|

||||

10. **クォータ詳細チェック**をサポート。

|

||||

11. **ユーザー招待報酬**をサポートします。

|

||||

12. 米ドルでの残高表示が可能。

|

||||

13. 新規ユーザー向けのお知らせ公開、リチャージリンク設定、初期残高設定に対応。

|

||||

14. 豊富な**カスタマイズ**オプションを提供します:

|

||||

1. システム名、ロゴ、フッターのカスタマイズが可能。

|

||||

2. HTML と Markdown コードを使用したホームページとアバウトページのカスタマイズ、または iframe を介したスタンドアロンウェブページの埋め込みをサポートしています。

|

||||

15. システム・アクセストークンによる管理 API アクセスをサポートする。

|

||||

16. Cloudflare Turnstile によるユーザー認証に対応。

|

||||

17. ユーザー管理と複数のユーザーログイン/登録方法をサポート:

|

||||

+ 電子メールによるログイン/登録とパスワードリセット。

|

||||

+ [GitHub OAuth](https://github.com/settings/applications/new)。

|

||||

+ WeChat 公式アカウントの認証([WeChat Server](https://github.com/songquanpeng/wechat-server)の追加導入が必要)。

|

||||

18. 他の主要なモデル API が利用可能になった場合、即座にサポートし、カプセル化する。

|

||||

|

||||

## デプロイメント

|

||||

### Docker デプロイメント

|

||||

デプロイコマンド: `docker run --name one-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api-en`。

|

||||

|

||||

コマンドを更新する: `docker run --rm -v /var/run/docker.sock:/var/run/docker.sock containrr/watchtower -cR`。

|

||||

|

||||

`-p 3000:3000` の最初の `3000` はホストのポートで、必要に応じて変更できます。

|

||||

|

||||

データはホストの `/home/ubuntu/data/one-api` ディレクトリに保存される。このディレクトリが存在し、書き込み権限があることを確認する、もしくは適切なディレクトリに変更してください。

|

||||

|

||||

Nginxリファレンス設定:

|

||||

```

|

||||

server{

|

||||

server_name openai.justsong.cn; # ドメイン名は適宜変更

|

||||

|

||||

location / {

|

||||

client_max_body_size 64m;

|

||||

proxy_http_version 1.1;

|

||||

proxy_pass http://localhost:3000; # それに応じてポートを変更

|

||||

proxy_set_header Host $host;

|

||||

proxy_set_header X-Forwarded-For $remote_addr;

|

||||

proxy_cache_bypass $http_upgrade;

|

||||

proxy_set_header Accept-Encoding gzip;

|

||||

proxy_read_timeout 300s; # GPT-4 はより長いタイムアウトが必要

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

次に、Let's Encrypt certbot を使って HTTPS を設定します:

|

||||

```bash

|

||||

# Ubuntu に certbot をインストール:

|

||||

sudo snap install --classic certbot

|

||||

sudo ln -s /snap/bin/certbot /usr/bin/certbot

|

||||

# 証明書の生成と Nginx 設定の変更

|

||||

sudo certbot --nginx

|

||||

# プロンプトに従う

|

||||

# Nginx を再起動

|

||||

sudo service nginx restart

|

||||

```

|

||||

|

||||

初期アカウントのユーザー名は `root` で、パスワードは `123456` です。

|

||||

|

||||

### マニュアルデプロイ

|

||||

1. [GitHub Releases](https://github.com/songquanpeng/one-api/releases/latest) から実行ファイルをダウンロードする、もしくはソースからコンパイルする:

|

||||

```shell

|

||||

git clone https://github.com/songquanpeng/one-api.git

|

||||

|

||||

# フロントエンドのビルド

|

||||

cd one-api/web

|

||||

npm install

|

||||

npm run build

|

||||

|

||||

# バックエンドのビルド

|

||||

cd ..

|

||||

go mod download

|

||||

go build -ldflags "-s -w" -o one-api

|

||||

```

|

||||

2. 実行:

|

||||

```shell

|

||||

chmod u+x one-api

|

||||

./one-api --port 3000 --log-dir ./logs

|

||||

```

|

||||

3. [http://localhost:3000/](http://localhost:3000/) にアクセスし、ログインする。初期アカウントのユーザー名は `root`、パスワードは `123456` である。

|

||||

|

||||

より詳細なデプロイのチュートリアルについては、[このページ](https://iamazing.cn/page/how-to-deploy-a-website) を参照してください。

|

||||

|

||||

### マルチマシンデプロイ

|

||||

1. すべてのサーバに同じ `SESSION_SECRET` を設定する。

|

||||

2. `SQL_DSN` を設定し、SQLite の代わりに MySQL を使用する。すべてのサーバは同じデータベースに接続する。

|

||||

3. マスターノード以外のノードの `NODE_TYPE` を `slave` に設定する。

|

||||

4. データベースから定期的に設定を同期するサーバーには `SYNC_FREQUENCY` を設定する。

|

||||

5. マスター以外のノードでは、オプションで `FRONTEND_BASE_URL` を設定して、ページ要求をマスターサーバーにリダイレクトすることができます。

|

||||

6. マスター以外のノードには Redis を個別にインストールし、`REDIS_CONN_STRING` を設定して、キャッシュの有効期限が切れていないときにデータベースにゼロレイテンシーでアクセスできるようにする。

|

||||

7. メインサーバーでもデータベースへのアクセスが高レイテンシになる場合は、Redis を有効にし、`SYNC_FREQUENCY` を設定してデータベースから定期的に設定を同期する必要がある。

|

||||

|

||||

Please refer to the [environment variables](#environment-variables) section for details on using environment variables.

|

||||

|

||||

### コントロールパネル(例: Baota)への展開

|

||||

詳しい手順は [#175](https://github.com/songquanpeng/one-api/issues/175) を参照してください。

|

||||

|

||||

配置後に空白のページが表示される場合は、[#97](https://github.com/songquanpeng/one-api/issues/97) を参照してください。

|

||||

|

||||

### サードパーティプラットフォームへのデプロイ

|

||||

<details>

|

||||

<summary><strong>Sealos へのデプロイ</strong></summary>

|

||||

<div>

|

||||

|

||||

> Sealos は、高い同時実行性、ダイナミックなスケーリング、数百万人のユーザーに対する安定した運用をサポートしています。

|

||||

|

||||

> 下のボタンをクリックすると、ワンクリックで展開できます。👇

|

||||

|

||||

[](https://cloud.sealos.io/?openapp=system-fastdeploy?templateName=one-api)

|

||||

|

||||

|

||||

</div>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary><strong>Zeabur へのデプロイ</strong></summary>

|

||||

<div>

|

||||

|

||||

> Zeabur のサーバーは海外にあるため、ネットワークの問題は自動的に解決されます。

|

||||

|

||||

[](https://zeabur.com/templates/7Q0KO3)

|

||||

|

||||

1. まず、コードをフォークする。

|

||||

2. [Zeabur](https://zeabur.com?referralCode=songquanpeng) にアクセスしてログインし、コンソールに入る。

|

||||

3. 新しいプロジェクトを作成します。Service -> Add ServiceでMarketplace を選択し、MySQL を選択する。接続パラメータ(ユーザー名、パスワード、アドレス、ポート)をメモします。

|

||||

4. 接続パラメータをコピーし、```create database `one-api` ``` を実行してデータベースを作成する。

|

||||

5. その後、Service -> Add Service で Git を選択し(最初の使用には認証が必要です)、フォークしたリポジトリを選択します。

|

||||

6. 自動デプロイが開始されますが、一旦キャンセルしてください。Variable タブで `PORT` に `3000` を追加し、`SQL_DSN` に `<username>:<password>@tcp(<addr>:<port>)/one-api` を追加します。変更を保存する。SQL_DSN` が設定されていないと、データが永続化されず、再デプロイ後にデータが失われるので注意すること。

|

||||

7. 再デプロイを選択します。

|

||||

8. Domains タブで、"my-one-api" のような適切なドメイン名の接頭辞を選択する。最終的なドメイン名は "my-one-api.zeabur.app" となります。独自のドメイン名を CNAME することもできます。

|

||||

9. デプロイが完了するのを待ち、生成されたドメイン名をクリックして One API にアクセスします。

|

||||

|

||||

</div>

|

||||

</details>

|

||||

|

||||

## コンフィグ

|

||||

システムは箱から出してすぐに使えます。

|

||||

|

||||

環境変数やコマンドラインパラメータを設定することで、システムを構成することができます。

|

||||

|

||||

システム起動後、`root` ユーザーとしてログインし、さらにシステムを設定します。

|

||||

|

||||

## 使用方法

|

||||

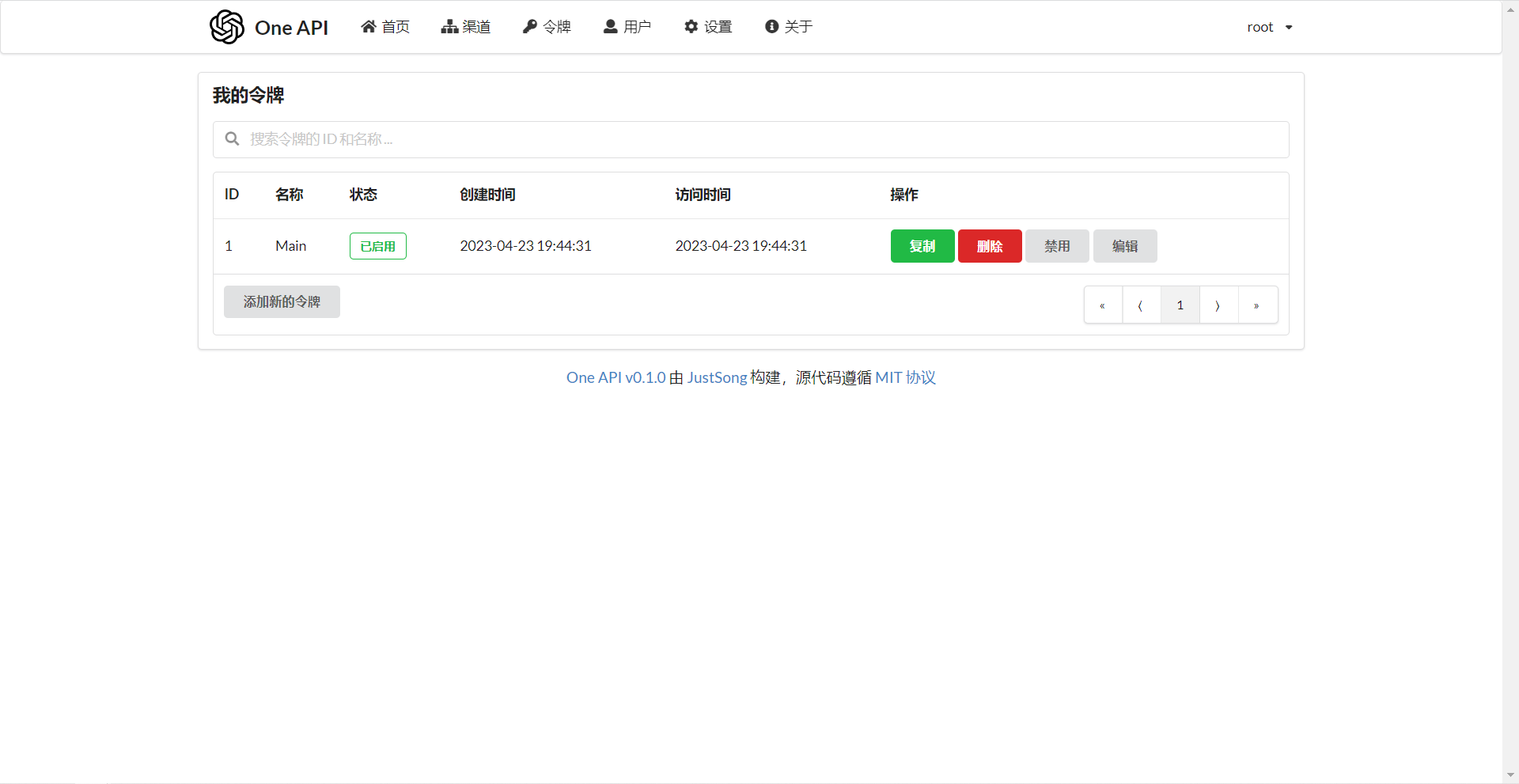

`Channels` ページで API Key を追加し、`Tokens` ページでアクセストークンを追加する。

|

||||

|

||||

アクセストークンを使って One API にアクセスすることができる。使い方は [OpenAI API](https://platform.openai.com/docs/api-reference/introduction) と同じです。

|

||||

|

||||

OpenAI API が使用されている場所では、API Base に One API のデプロイアドレスを設定することを忘れないでください(例: `https://openai.justsong.cn`)。API Key は One API で生成されたトークンでなければなりません。

|

||||

|

||||

具体的な API Base のフォーマットは、使用しているクライアントに依存することに注意してください。

|

||||

|

||||

```mermaid

|

||||

graph LR

|

||||

A(ユーザ)

|

||||

A --->|リクエスト| B(One API)

|

||||

B -->|中継リクエスト| C(OpenAI)

|

||||

B -->|中継リクエスト| D(Azure)

|

||||

B -->|中継リクエスト| E(その他のダウンストリームチャンネル)

|

||||

```

|

||||

|

||||

現在のリクエストにどのチャネルを使うかを指定するには、トークンの後に チャネル ID を追加します: 例えば、`Authorization: Bearer ONE_API_KEY-CHANNEL_ID` のようにします。

|

||||

チャンネル ID を指定するためには、トークンは管理者によって作成される必要があることに注意してください。

|

||||

|

||||

もしチャネル ID が指定されない場合、ロードバランシングによってリクエストが複数のチャネルに振り分けられます。

|

||||

|

||||

### 環境変数

|

||||

1. `REDIS_CONN_STRING`: 設定すると、リクエストレート制限のためのストレージとして、メモリの代わりに Redis が使われる。

|

||||

+ 例: `REDIS_CONN_STRING=redis://default:redispw@localhost:49153`

|

||||

2. `SESSION_SECRET`: 設定すると、固定セッションキーが使用され、システムの再起動後もログインユーザーのクッキーが有効であることが保証されます。

|

||||

+ 例: `SESSION_SECRET=random_string`

|

||||

3. `SQL_DSN`: 設定すると、SQLite の代わりに指定したデータベースが使用されます。MySQL バージョン 8.0 を使用してください。

|

||||

+ 例: `SQL_DSN=root:123456@tcp(localhost:3306)/oneapi`

|

||||

4. `FRONTEND_BASE_URL`: 設定されると、バックエンドアドレスではなく、指定されたフロントエンドアドレスが使われる。

|

||||

+ 例: `FRONTEND_BASE_URL=https://openai.justsong.cn`

|

||||

5. `SYNC_FREQUENCY`: 設定された場合、システムは定期的にデータベースからコンフィグを秒単位で同期する。設定されていない場合、同期は行われません。

|

||||

+ 例: `SYNC_FREQUENCY=60`

|

||||

6. `NODE_TYPE`: 設定すると、ノードのタイプを指定する。有効な値は `master` と `slave` である。設定されていない場合、デフォルトは `master`。

|

||||

+ 例: `NODE_TYPE=slave`

|

||||

7. `CHANNEL_UPDATE_FREQUENCY`: 設定すると、チャンネル残高を分単位で定期的に更新する。設定されていない場合、更新は行われません。

|

||||

+ 例: `CHANNEL_UPDATE_FREQUENCY=1440`

|

||||

8. `CHANNEL_TEST_FREQUENCY`: 設定すると、チャンネルを定期的にテストする。設定されていない場合、テストは行われません。

|

||||

+ 例: `CHANNEL_TEST_FREQUENCY=1440`

|

||||

9. `POLLING_INTERVAL`: チャネル残高の更新とチャネルの可用性をテストするときのリクエスト間の時間間隔 (秒)。デフォルトは間隔なし。

|

||||

+ 例: `POLLING_INTERVAL=5`

|

||||

|

||||

### コマンドラインパラメータ

|

||||

1. `--port <port_number>`: サーバがリッスンするポート番号を指定。デフォルトは `3000` です。

|

||||

+ 例: `--port 3000`

|

||||

2. `--log-dir <log_dir>`: ログディレクトリを指定。設定しない場合、ログは保存されません。

|

||||

+ 例: `--log-dir ./logs`

|

||||

3. `--version`: システムのバージョン番号を表示して終了する。

|

||||

4. `--help`: コマンドの使用法ヘルプとパラメータの説明を表示。

|

||||

|

||||

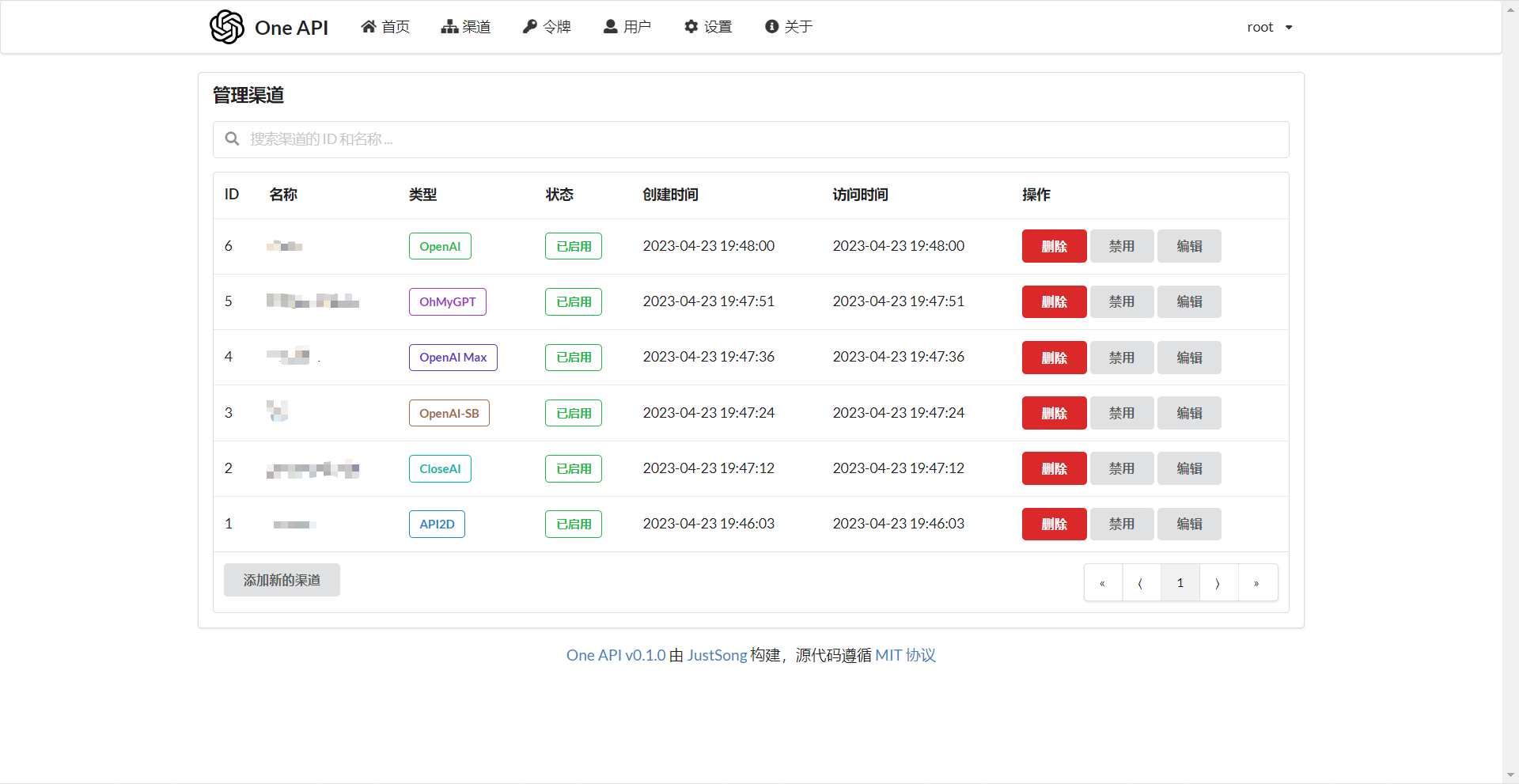

## スクリーンショット

|

||||

|

||||

|

||||

|

||||

## FAQ

|

||||

1. ノルマとは何か?どのように計算されますか?One API にはノルマ計算の問題はありますか?

|

||||

+ ノルマ = グループ倍率 * モデル倍率 * (プロンプトトークンの数 + 完了トークンの数 * 完了倍率)

|

||||

+ 完了倍率は、公式の定義と一致するように、GPT3.5 では 1.33、GPT4 では 2 に固定されています。

|

||||

+ ストリームモードでない場合、公式 API は消費したトークンの総数を返す。ただし、プロンプトとコンプリートの消費倍率は異なるので注意してください。

|

||||

2. アカウント残高は十分なのに、"insufficient quota" と表示されるのはなぜですか?

|

||||

+ トークンのクォータが十分かどうかご確認ください。トークンクォータはアカウント残高とは別のものです。

|

||||

+ トークンクォータは最大使用量を設定するためのもので、ユーザーが自由に設定できます。

|

||||

3. チャンネルを使おうとすると "No available channels" と表示されます。どうすればいいですか?

|

||||

+ ユーザーとチャンネルグループの設定を確認してください。

|

||||

+ チャンネルモデルの設定も確認してください。

|

||||

4. チャンネルテストがエラーを報告する: "invalid character '<' looking for beginning of value"

|

||||

+ このエラーは、返された値が有効な JSON ではなく、HTML ページである場合に発生する。

|

||||

+ ほとんどの場合、デプロイサイトのIPかプロキシのノードが CloudFlare によってブロックされています。

|

||||

5. ChatGPT Next Web でエラーが発生しました: "Failed to fetch"

|

||||

+ デプロイ時に `BASE_URL` を設定しないでください。

|

||||

+ インターフェイスアドレスと API Key が正しいか再確認してください。

|

||||

|

||||

## 関連プロジェクト

|

||||

[FastGPT](https://github.com/labring/FastGPT): LLM に基づく知識質問応答システム

|

||||

|

||||

## 注

|

||||

本プロジェクトはオープンソースプロジェクトです。OpenAI の[利用規約](https://openai.com/policies/terms-of-use)および**適用される法令**を遵守してご利用ください。違法な目的での利用はご遠慮ください。

|

||||

|

||||

このプロジェクトは MIT ライセンスで公開されています。これに基づき、ページの最下部に帰属表示と本プロジェクトへのリンクを含める必要があります。

|

||||

|

||||

このプロジェクトを基にした派生プロジェクトについても同様です。

|

||||

|

||||

帰属表示を含めたくない場合は、事前に許可を得なければなりません。

|

||||

|

||||

MIT ライセンスによると、このプロジェクトを利用するリスクと責任は利用者が負うべきであり、このオープンソースプロジェクトの開発者は責任を負いません。

|

||||

178

README.md

@@ -1,10 +1,10 @@

|

||||

<p align="right">

|

||||

<strong>中文</strong> | <a href="./README.en.md">English</a>

|

||||

<strong>中文</strong> | <a href="./README.en.md">English</a> | <a href="./README.ja.md">日本語</a>

|

||||

</p>

|

||||

|

||||

|

||||

<p align="center">

|

||||

<a href="https://github.com/songquanpeng/one-api"><img src="https://raw.githubusercontent.com/songquanpeng/one-api/main/web/public/logo.png" width="150" height="150" alt="one-api logo"></a>

|

||||

<a href="https://github.com/songquanpeng/one-api"><img src="https://raw.githubusercontent.com/songquanpeng/one-api/main/web/default/public/logo.png" width="150" height="150" alt="one-api logo"></a>

|

||||

</p>

|

||||

|

||||

<div align="center">

|

||||

@@ -51,27 +51,29 @@ _✨ 通过标准的 OpenAI API 格式访问所有的大模型,开箱即用

|

||||

<a href="https://iamazing.cn/page/reward">赞赏支持</a>

|

||||

</p>

|

||||

|

||||

> **Note**:本项目为开源项目,使用者必须在遵循 OpenAI 的[使用条款](https://openai.com/policies/terms-of-use)以及**法律法规**的情况下使用,不得用于非法用途。

|

||||

> [!NOTE]

|

||||

> 本项目为开源项目,使用者必须在遵循 OpenAI 的[使用条款](https://openai.com/policies/terms-of-use)以及**法律法规**的情况下使用,不得用于非法用途。

|

||||

>

|

||||

> 根据[《生成式人工智能服务管理暂行办法》](http://www.cac.gov.cn/2023-07/13/c_1690898327029107.htm)的要求,请勿对中国地区公众提供一切未经备案的生成式人工智能服务。

|

||||

|

||||

> **Note**:使用 Docker 拉取的最新镜像可能是 `alpha` 版本,如果追求稳定性请手动指定版本。

|

||||

> [!WARNING]

|

||||

> 使用 Docker 拉取的最新镜像可能是 `alpha` 版本,如果追求稳定性请手动指定版本。

|

||||

|

||||

> **Warning**:从 `v0.3` 版本升级到 `v0.4` 版本需要手动迁移数据库,请手动执行[数据库迁移脚本](./bin/migration_v0.3-v0.4.sql)。

|

||||

> [!WARNING]

|

||||

> 使用 root 用户初次登录系统后,务必修改默认密码 `123456`!

|

||||

|

||||

## 功能

|

||||

1. 支持多种大模型:

|

||||

+ [x] [OpenAI ChatGPT 系列模型](https://platform.openai.com/docs/guides/gpt/chat-completions-api)(支持 [Azure OpenAI API](https://learn.microsoft.com/en-us/azure/ai-services/openai/reference))

|

||||

+ [x] [Anthropic Claude 系列模型](https://anthropic.com)

|

||||

+ [x] [Google PaLM2 系列模型](https://developers.generativeai.google)

|

||||

+ [x] [Google PaLM2/Gemini 系列模型](https://developers.generativeai.google)

|

||||

+ [x] [百度文心一言系列模型](https://cloud.baidu.com/doc/WENXINWORKSHOP/index.html)

|

||||

+ [x] [阿里通义千问系列模型](https://help.aliyun.com/document_detail/2400395.html)

|

||||

+ [x] [讯飞星火认知大模型](https://www.xfyun.cn/doc/spark/Web.html)

|

||||

+ [x] [智谱 ChatGLM 系列模型](https://bigmodel.cn)

|

||||

2. 支持配置镜像以及众多第三方代理服务:

|

||||

+ [x] [API Distribute](https://api.gptjk.top/register?aff=QGxj)

|

||||

+ [x] [OpenAI-SB](https://openai-sb.com)

|

||||

+ [x] [API2D](https://api2d.com/r/197971)

|

||||

+ [x] [OhMyGPT](https://aigptx.top?aff=uFpUl2Kf)

|

||||

+ [x] [AI Proxy](https://aiproxy.io/?i=OneAPI) (邀请码:`OneAPI`)

|

||||

+ [x] [CloseAI](https://console.closeai-asia.com/r/2412)

|

||||

+ [x] 自定义渠道:例如各种未收录的第三方代理服务

|

||||

+ [x] [360 智脑](https://ai.360.cn)

|

||||

+ [x] [腾讯混元大模型](https://cloud.tencent.com/document/product/1729)

|

||||

2. 支持配置镜像以及众多[第三方代理服务](https://iamazing.cn/page/openai-api-third-party-services)。

|

||||

3. 支持通过**负载均衡**的方式访问多个渠道。

|

||||

4. 支持 **stream 模式**,可以通过流式传输实现打字机效果。

|

||||

5. 支持**多机部署**,[详见此处](#多机部署)。

|

||||

@@ -84,33 +86,43 @@ _✨ 通过标准的 OpenAI API 格式访问所有的大模型,开箱即用

|

||||

12. 支持**用户邀请奖励**。

|

||||

13. 支持以美元为单位显示额度。

|

||||

14. 支持发布公告,设置充值链接,设置新用户初始额度。

|

||||

15. 支持模型映射,重定向用户的请求模型。

|

||||

15. 支持模型映射,重定向用户的请求模型,如无必要请不要设置,设置之后会导致请求体被重新构造而非直接透传,会导致部分还未正式支持的字段无法传递成功。

|

||||

16. 支持失败自动重试。

|

||||

17. 支持绘图接口。

|

||||

18. 支持丰富的**自定义**设置,

|

||||

18. 支持 [Cloudflare AI Gateway](https://developers.cloudflare.com/ai-gateway/providers/openai/),渠道设置的代理部分填写 `https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/openai` 即可。

|

||||

19. 支持丰富的**自定义**设置,

|

||||

1. 支持自定义系统名称,logo 以及页脚。

|

||||

2. 支持自定义首页和关于页面,可以选择使用 HTML & Markdown 代码进行自定义,或者使用一个单独的网页通过 iframe 嵌入。

|

||||

19. 支持通过系统访问令牌访问管理 API。

|

||||

20. 支持 Cloudflare Turnstile 用户校验。

|

||||

21. 支持用户管理,支持**多种用户登录注册方式**:

|

||||

+ 邮箱登录注册以及通过邮箱进行密码重置。

|

||||

20. 支持通过系统访问令牌访问管理 API(bearer token,用以替代 cookie,你可以自行抓包来查看 API 的用法)。

|

||||

21. 支持 Cloudflare Turnstile 用户校验。

|

||||

22. 支持用户管理,支持**多种用户登录注册方式**:

|

||||

+ 邮箱登录注册(支持注册邮箱白名单)以及通过邮箱进行密码重置。

|

||||

+ [GitHub 开放授权](https://github.com/settings/applications/new)。

|

||||

+ 微信公众号授权(需要额外部署 [WeChat Server](https://github.com/songquanpeng/wechat-server))。

|

||||

23. 支持主题切换,设置环境变量 `THEME` 即可,默认为 `default`,欢迎 PR 更多主题,具体参考[此处](./web/README.md)。

|

||||

|

||||

## 部署

|

||||

### 基于 Docker 进行部署

|

||||

部署命令:`docker run --name one-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api`

|

||||

```shell

|

||||

# 使用 SQLite 的部署命令:

|

||||

docker run --name one-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api

|

||||

# 使用 MySQL 的部署命令,在上面的基础上添加 `-e SQL_DSN="root:123456@tcp(localhost:3306)/oneapi"`,请自行修改数据库连接参数,不清楚如何修改请参见下面环境变量一节。

|

||||

# 例如:

|

||||

docker run --name one-api -d --restart always -p 3000:3000 -e SQL_DSN="root:123456@tcp(localhost:3306)/oneapi" -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api

|

||||

```

|

||||

|

||||

其中,`-p 3000:3000` 中的第一个 `3000` 是宿主机的端口,可以根据需要进行修改。

|

||||

|

||||

数据和日志将会保存在宿主机的 `/home/ubuntu/data/one-api` 目录,请确保该目录存在且具有写入权限,或者更改为合适的目录。

|

||||

|

||||

如果启动失败,请添加 `--privileged=true`,具体参考 https://github.com/songquanpeng/one-api/issues/482 。

|

||||

|

||||

如果上面的镜像无法拉取,可以尝试使用 GitHub 的 Docker 镜像,将上面的 `justsong/one-api` 替换为 `ghcr.io/songquanpeng/one-api` 即可。

|

||||

|

||||

如果你的并发量较大,推荐设置 `SQL_DSN`,详见下面[环境变量](#环境变量)一节。

|

||||

如果你的并发量较大,**务必**设置 `SQL_DSN`,详见下面[环境变量](#环境变量)一节。

|

||||

|

||||

更新命令:`docker run --rm -v /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower -cR`

|

||||

|

||||

`-p 3000:3000` 中的第一个 `3000` 是宿主机的端口,可以根据需要进行修改。

|

||||

|

||||

数据将会保存在宿主机的 `/home/ubuntu/data/one-api` 目录,请确保该目录存在且具有写入权限,或者更改为合适的目录。

|

||||

|

||||

Nginx 的参考配置:

|

||||

```

|

||||

server{

|

||||

@@ -143,6 +155,19 @@ sudo service nginx restart

|

||||

|

||||

初始账号用户名为 `root`,密码为 `123456`。

|

||||

|

||||

|

||||

### 基于 Docker Compose 进行部署

|

||||

|

||||

> 仅启动方式不同,参数设置不变,请参考基于 Docker 部署部分

|

||||

|

||||

```shell

|

||||

# 目前支持 MySQL 启动,数据存储在 ./data/mysql 文件夹内

|

||||

docker-compose up -d

|

||||

|

||||

# 查看部署状态

|

||||

docker-compose ps

|

||||

```

|

||||

|

||||

### 手动部署

|

||||

1. 从 [GitHub Releases](https://github.com/songquanpeng/one-api/releases/latest) 下载可执行文件或者从源码编译:

|

||||

```shell

|

||||

@@ -152,7 +177,7 @@ sudo service nginx restart

|

||||

cd one-api/web

|

||||

npm install

|

||||

npm run build

|

||||

|

||||

|

||||

# 构建后端

|

||||

cd ..

|

||||

go mod download

|

||||

@@ -205,14 +230,23 @@ docker run --name chatgpt-web -d -p 3002:3002 -e OPENAI_API_BASE_URL=https://ope

|

||||

|

||||

注意修改端口号、`OPENAI_API_BASE_URL` 和 `OPENAI_API_KEY`。

|

||||

|

||||

#### QChatGPT - QQ机器人

|

||||

项目主页:https://github.com/RockChinQ/QChatGPT

|

||||

|

||||

根据文档完成部署后,在`config.py`设置配置项`openai_config`的`reverse_proxy`为 One API 后端地址,设置`api_key`为 One API 生成的key,并在配置项`completion_api_params`的`model`参数设置为 One API 支持的模型名称。

|

||||

|

||||

可安装 [Switcher 插件](https://github.com/RockChinQ/Switcher)在运行时切换所使用的模型。

|

||||

|

||||

### 部署到第三方平台

|

||||

<details>

|

||||

<summary><strong>部署到 Sealos </strong></summary>

|

||||

<div>

|

||||

|

||||

> Sealos 可视化部署,仅需 1 分钟。

|

||||

> Sealos 的服务器在国外,不需要额外处理网络问题,支持高并发 & 动态伸缩。

|

||||

|

||||

参考这个[教程](https://github.com/c121914yu/FastGPT/blob/main/docs/deploy/one-api/sealos.md)中 1~5 步。

|

||||

点击以下按钮一键部署(部署后访问出现 404 请等待 3~5 分钟):

|

||||

|

||||

[](https://cloud.sealos.io/?openapp=system-fastdeploy?templateName=one-api)

|

||||

|

||||

</div>

|

||||

</details>

|

||||

@@ -221,10 +255,12 @@ docker run --name chatgpt-web -d -p 3002:3002 -e OPENAI_API_BASE_URL=https://ope

|

||||

<summary><strong>部署到 Zeabur</strong></summary>

|

||||

<div>

|

||||

|

||||

> Zeabur 的服务器在国外,自动解决了网络的问题,同时免费的额度也足够个人使用。

|

||||

> Zeabur 的服务器在国外,自动解决了网络的问题,同时免费的额度也足够个人使用

|

||||

|

||||

[](https://zeabur.com/templates/7Q0KO3)

|

||||

|

||||

1. 首先 fork 一份代码。

|

||||

2. 进入 [Zeabur](https://zeabur.com/),登录,进入控制台。

|

||||

2. 进入 [Zeabur](https://zeabur.com?referralCode=songquanpeng),登录,进入控制台。

|

||||

3. 新建一个 Project,在 Service -> Add Service 选择 Marketplace,选择 MySQL,并记下连接参数(用户名、密码、地址、端口)。

|

||||

4. 复制链接参数,运行 ```create database `one-api` ``` 创建数据库。

|

||||

5. 然后在 Service -> Add Service,选择 Git(第一次使用需要先授权),选择你 fork 的仓库。

|

||||

@@ -236,6 +272,17 @@ docker run --name chatgpt-web -d -p 3002:3002 -e OPENAI_API_BASE_URL=https://ope

|

||||

</div>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary><strong>部署到 Render</strong></summary>

|

||||

<div>

|

||||

|

||||

> Render 提供免费额度,绑卡后可以进一步提升额度

|

||||

|

||||

Render 可以直接部署 docker 镜像,不需要 fork 仓库:https://dashboard.render.com

|

||||

|

||||

</div>

|

||||

</details>

|

||||

|

||||

## 配置

|

||||

系统本身开箱即用。

|

||||

|

||||

@@ -254,13 +301,20 @@ docker run --name chatgpt-web -d -p 3002:3002 -e OPENAI_API_BASE_URL=https://ope

|

||||

|

||||

注意,具体的 API Base 的格式取决于你所使用的客户端。

|

||||

|

||||

例如对于 OpenAI 的官方库:

|

||||

```bash

|

||||

OPENAI_API_KEY="sk-xxxxxx"

|

||||

OPENAI_API_BASE="https://<HOST>:<PORT>/v1"

|

||||

```

|

||||

|

||||

```mermaid

|

||||

graph LR

|

||||

A(用户)

|

||||

A --->|请求| B(One API)

|

||||

A --->|使用 One API 分发的 key 进行请求| B(One API)

|

||||

B -->|中继请求| C(OpenAI)

|

||||

B -->|中继请求| D(Azure)

|

||||

B -->|中继请求| E(其他下游渠道)

|

||||

B -->|中继请求| E(其他 OpenAI API 格式下游渠道)

|

||||

B -->|中继并修改请求体和返回体| F(非 OpenAI API 格式下游渠道)

|

||||

```

|

||||

|

||||

可以通过在令牌后面添加渠道 ID 的方式指定使用哪一个渠道处理本次请求,例如:`Authorization: Bearer ONE_API_KEY-CHANNEL_ID`。

|

||||

@@ -269,32 +323,57 @@ graph LR

|

||||

不加的话将会使用负载均衡的方式使用多个渠道。

|

||||

|

||||

### 环境变量

|

||||

1. `REDIS_CONN_STRING`:设置之后将使用 Redis 作为请求频率限制的存储,而非使用内存存储。

|

||||

1. `REDIS_CONN_STRING`:设置之后将使用 Redis 作为缓存使用。

|

||||

+ 例子:`REDIS_CONN_STRING=redis://default:redispw@localhost:49153`

|

||||

+ 如果数据库访问延迟很低,没有必要启用 Redis,启用后反而会出现数据滞后的问题。

|

||||

2. `SESSION_SECRET`:设置之后将使用固定的会话密钥,这样系统重新启动后已登录用户的 cookie 将依旧有效。

|

||||

+ 例子:`SESSION_SECRET=random_string`

|

||||

3. `SQL_DSN`:设置之后将使用指定数据库而非 SQLite,请使用 MySQL 8.0 版本。

|

||||

+ 例子:`SQL_DSN=root:123456@tcp(localhost:3306)/oneapi`

|

||||

3. `SQL_DSN`:设置之后将使用指定数据库而非 SQLite,请使用 MySQL 或 PostgreSQL。

|

||||

+ 例子:

|

||||

+ MySQL:`SQL_DSN=root:123456@tcp(localhost:3306)/oneapi`

|

||||

+ PostgreSQL:`SQL_DSN=postgres://postgres:123456@localhost:5432/oneapi`(适配中,欢迎反馈)

|

||||

+ 注意需要提前建立数据库 `oneapi`,无需手动建表,程序将自动建表。

|

||||

+ 如果使用本地数据库:部署命令可添加 `--network="host"` 以使得容器内的程序可以访问到宿主机上的 MySQL。

|

||||

+ 如果使用云数据库:如果云服务器需要验证身份,需要在连接参数中添加 `?tls=skip-verify`。

|

||||

+ 请根据你的数据库配置修改下列参数(或者保持默认值):

|

||||

+ `SQL_MAX_IDLE_CONNS`:最大空闲连接数,默认为 `100`。

|

||||

+ `SQL_MAX_OPEN_CONNS`:最大打开连接数,默认为 `1000`。

|

||||

+ 如果报错 `Error 1040: Too many connections`,请适当减小该值。

|

||||

+ `SQL_CONN_MAX_LIFETIME`:连接的最大生命周期,默认为 `60`,单位分钟。

|

||||

4. `FRONTEND_BASE_URL`:设置之后将重定向页面请求到指定的地址,仅限从服务器设置。

|

||||

+ 例子:`FRONTEND_BASE_URL=https://openai.justsong.cn`

|

||||

5. `SYNC_FREQUENCY`:设置之后将定期与数据库同步配置,单位为秒,未设置则不进行同步。

|

||||

5. `MEMORY_CACHE_ENABLED`:启用内存缓存,会导致用户额度的更新存在一定的延迟,可选值为 `true` 和 `false`,未设置则默认为 `false`。

|

||||

+ 例子:`MEMORY_CACHE_ENABLED=true`

|

||||

6. `SYNC_FREQUENCY`:在启用缓存的情况下与数据库同步配置的频率,单位为秒,默认为 `600` 秒。

|

||||

+ 例子:`SYNC_FREQUENCY=60`

|

||||

6. `NODE_TYPE`:设置之后将指定节点类型,可选值为 `master` 和 `slave`,未设置则默认为 `master`。

|

||||

7. `NODE_TYPE`:设置之后将指定节点类型,可选值为 `master` 和 `slave`,未设置则默认为 `master`。

|

||||

+ 例子:`NODE_TYPE=slave`

|

||||

7. `CHANNEL_UPDATE_FREQUENCY`:设置之后将定期更新渠道余额,单位为分钟,未设置则不进行更新。

|

||||

8. `CHANNEL_UPDATE_FREQUENCY`:设置之后将定期更新渠道余额,单位为分钟,未设置则不进行更新。

|

||||

+ 例子:`CHANNEL_UPDATE_FREQUENCY=1440`

|

||||

8. `CHANNEL_TEST_FREQUENCY`:设置之后将定期检查渠道,单位为分钟,未设置则不进行检查。

|

||||

9. `CHANNEL_TEST_FREQUENCY`:设置之后将定期检查渠道,单位为分钟,未设置则不进行检查。

|

||||

+ 例子:`CHANNEL_TEST_FREQUENCY=1440`

|

||||

9. `POLLING_INTERVAL`:批量更新渠道余额以及测试可用性时的请求间隔,单位为秒,默认无间隔。

|

||||

+ 例子:`POLLING_INTERVAL=5`

|

||||

10. `POLLING_INTERVAL`:批量更新渠道余额以及测试可用性时的请求间隔,单位为秒,默认无间隔。

|

||||

+ 例子:`POLLING_INTERVAL=5`

|

||||

11. `BATCH_UPDATE_ENABLED`:启用数据库批量更新聚合,会导致用户额度的更新存在一定的延迟可选值为 `true` 和 `false`,未设置则默认为 `false`。

|

||||

+ 例子:`BATCH_UPDATE_ENABLED=true`

|

||||

+ 如果你遇到了数据库连接数过多的问题,可以尝试启用该选项。

|

||||

12. `BATCH_UPDATE_INTERVAL=5`:批量更新聚合的时间间隔,单位为秒,默认为 `5`。

|

||||

+ 例子:`BATCH_UPDATE_INTERVAL=5`

|

||||

13. 请求频率限制:

|

||||

+ `GLOBAL_API_RATE_LIMIT`:全局 API 速率限制(除中继请求外),单 ip 三分钟内的最大请求数,默认为 `180`。

|

||||

+ `GLOBAL_WEB_RATE_LIMIT`:全局 Web 速率限制,单 ip 三分钟内的最大请求数,默认为 `60`。

|

||||

14. 编码器缓存设置:

|

||||

+ `TIKTOKEN_CACHE_DIR`:默认程序启动时会联网下载一些通用的词元的编码,如:`gpt-3.5-turbo`,在一些网络环境不稳定,或者离线情况,可能会导致启动有问题,可以配置此目录缓存数据,可迁移到离线环境。

|

||||

+ `DATA_GYM_CACHE_DIR`:目前该配置作用与 `TIKTOKEN_CACHE_DIR` 一致,但是优先级没有它高。

|

||||

15. `RELAY_TIMEOUT`:中继超时设置,单位为秒,默认不设置超时时间。

|

||||

16. `SQLITE_BUSY_TIMEOUT`:SQLite 锁等待超时设置,单位为毫秒,默认 `3000`。

|

||||

17. `GEMINI_SAFETY_SETTING`:Gemini 的安全设置,默认 `BLOCK_NONE`。

|

||||

18. `THEME`:系统的主题设置,默认为 `default`,具体可选值参考[此处](./web/README.md)。

|

||||

|

||||

### 命令行参数

|

||||

1. `--port <port_number>`: 指定服务器监听的端口号,默认为 `3000`。

|

||||

+ 例子:`--port 3000`

|

||||

2. `--log-dir <log_dir>`: 指定日志文件夹,如果没有设置,日志将不会被保存。

|

||||

2. `--log-dir <log_dir>`: 指定日志文件夹,如果没有设置,默认保存至工作目录的 `logs` 文件夹下。

|

||||

+ 例子:`--log-dir ./logs`

|

||||

3. `--version`: 打印系统版本号并退出。

|

||||

4. `--help`: 查看命令的使用帮助和参数说明。

|

||||

@@ -313,6 +392,7 @@ https://openai.justsong.cn

|

||||

+ 额度 = 分组倍率 * 模型倍率 * (提示 token 数 + 补全 token 数 * 补全倍率)

|

||||

+ 其中补全倍率对于 GPT3.5 固定为 1.33,GPT4 为 2,与官方保持一致。

|

||||

+ 如果是非流模式,官方接口会返回消耗的总 token,但是你要注意提示和补全的消耗倍率不一样。

|

||||

+ 注意,One API 的默认倍率就是官方倍率,是已经调整过的。

|

||||

2. 账户额度足够为什么提示额度不足?

|

||||

+ 请检查你的令牌额度是否足够,这个和账户额度是分开的。

|

||||

+ 令牌额度仅供用户设置最大使用量,用户可自由设置。

|

||||

@@ -325,11 +405,19 @@ https://openai.justsong.cn

|

||||

5. ChatGPT Next Web 报错:`Failed to fetch`

|

||||

+ 部署的时候不要设置 `BASE_URL`。

|

||||

+ 检查你的接口地址和 API Key 有没有填对。

|

||||

+ 检查是否启用了 HTTPS,浏览器会拦截 HTTPS 域名下的 HTTP 请求。

|

||||

6. 报错:`当前分组负载已饱和,请稍后再试`

|

||||

+ 上游通道 429 了。

|

||||

7. 升级之后我的数据会丢失吗?

|

||||

+ 如果使用 MySQL,不会。

|

||||

+ 如果使用 SQLite,需要按照我所给的部署命令挂载 volume 持久化 one-api.db 数据库文件,否则容器重启后数据会丢失。

|

||||

8. 升级之前数据库需要做变更吗?

|

||||

+ 一般情况下不需要,系统将在初始化的时候自动调整。

|

||||

+ 如果需要的话,我会在更新日志中说明,并给出脚本。

|

||||

|

||||

## 相关项目

|

||||

[FastGPT](https://github.com/c121914yu/FastGPT): 三分钟搭建 AI 知识库

|

||||

* [FastGPT](https://github.com/labring/FastGPT): 基于 LLM 大语言模型的知识库问答系统

|

||||

* [ChatGPT Next Web](https://github.com/Yidadaa/ChatGPT-Next-Web): 一键拥有你自己的跨平台 ChatGPT 应用

|

||||

|

||||

## 注意

|

||||

|

||||

@@ -337,4 +425,4 @@ https://openai.justsong.cn

|

||||

|

||||

同样适用于基于本项目的二开项目。

|

||||

|

||||

依据 MIT 协议,使用者需自行承担使用本项目的风险与责任,本开源项目开发者与此无关。

|

||||

依据 MIT 协议,使用者需自行承担使用本项目的风险与责任,本开源项目开发者与此无关。

|

||||

|

||||

@@ -21,12 +21,9 @@ var QuotaPerUnit = 500 * 1000.0 // $0.002 / 1K tokens

|

||||

var DisplayInCurrencyEnabled = true

|

||||

var DisplayTokenStatEnabled = true

|

||||

|

||||

var UsingSQLite = false

|

||||

|

||||

// Any options with "Secret", "Token" in its key won't be return by GetOptions

|

||||

|

||||

var SessionSecret = uuid.New().String()

|

||||

var SQLitePath = "one-api.db"

|

||||

|

||||

var OptionMap map[string]string

|

||||

var OptionMapRWMutex sync.RWMutex

|

||||

@@ -42,6 +39,22 @@ var WeChatAuthEnabled = false

|

||||

var TurnstileCheckEnabled = false

|

||||

var RegisterEnabled = true

|

||||

|

||||

var EmailDomainRestrictionEnabled = false

|

||||

var EmailDomainWhitelist = []string{

|

||||

"gmail.com",

|

||||

"163.com",

|

||||

"126.com",

|

||||

"qq.com",

|

||||

"outlook.com",

|

||||

"hotmail.com",

|

||||

"icloud.com",

|

||||

"yahoo.com",

|

||||

"foxmail.com",

|

||||

}

|

||||

|

||||

var DebugEnabled = os.Getenv("DEBUG") == "true"

|

||||

var MemoryCacheEnabled = os.Getenv("MEMORY_CACHE_ENABLED") == "true"

|

||||

|

||||

var LogConsumeEnabled = true

|

||||

|

||||

var SMTPServer = ""

|

||||

@@ -65,6 +78,7 @@ var QuotaForInviter = 0

|

||||

var QuotaForInvitee = 0

|

||||

var ChannelDisableThreshold = 5.0

|

||||

var AutomaticDisableChannelEnabled = false

|

||||

var AutomaticEnableChannelEnabled = false

|

||||

var QuotaRemindThreshold = 1000

|

||||

var PreConsumedQuota = 500

|

||||

var ApproximateTokenEnabled = false

|

||||

@@ -77,7 +91,24 @@ var IsMasterNode = os.Getenv("NODE_TYPE") != "slave"

|

||||

var requestInterval, _ = strconv.Atoi(os.Getenv("POLLING_INTERVAL"))

|

||||

var RequestInterval = time.Duration(requestInterval) * time.Second

|

||||

|

||||

var SyncFrequency = 10 * 60 // unit is second, will be overwritten by SYNC_FREQUENCY

|

||||

var SyncFrequency = GetOrDefault("SYNC_FREQUENCY", 10*60) // unit is second

|

||||

|

||||

var BatchUpdateEnabled = false

|

||||

var BatchUpdateInterval = GetOrDefault("BATCH_UPDATE_INTERVAL", 5)

|

||||

|

||||

var RelayTimeout = GetOrDefault("RELAY_TIMEOUT", 0) // unit is second

|

||||

|

||||

var GeminiSafetySetting = GetOrDefaultString("GEMINI_SAFETY_SETTING", "BLOCK_NONE")

|

||||

|

||||

var Theme = GetOrDefaultString("THEME", "default")

|

||||

var ValidThemes = map[string]bool{

|

||||

"default": true,

|

||||

"berry": true,

|

||||

}

|

||||

|

||||

const (

|

||||

RequestIdKey = "X-Oneapi-Request-Id"

|

||||

)

|

||||

|

||||

const (

|

||||

RoleGuestUser = 0

|

||||

@@ -96,10 +127,10 @@ var (

|

||||

// All duration's unit is seconds

|

||||

// Shouldn't larger then RateLimitKeyExpirationDuration

|

||||

var (

|

||||

GlobalApiRateLimitNum = 180

|

||||

GlobalApiRateLimitNum = GetOrDefault("GLOBAL_API_RATE_LIMIT", 180)

|

||||

GlobalApiRateLimitDuration int64 = 3 * 60

|

||||

|

||||

GlobalWebRateLimitNum = 60

|

||||

GlobalWebRateLimitNum = GetOrDefault("GLOBAL_WEB_RATE_LIMIT", 60)

|

||||

GlobalWebRateLimitDuration int64 = 3 * 60

|

||||

|

||||

UploadRateLimitNum = 10

|

||||

@@ -133,47 +164,64 @@ const (

|

||||

)

|

||||

|

||||

const (

|

||||

ChannelStatusUnknown = 0

|

||||

ChannelStatusEnabled = 1 // don't use 0, 0 is the default value!

|

||||

ChannelStatusDisabled = 2 // also don't use 0

|

||||

ChannelStatusUnknown = 0

|

||||

ChannelStatusEnabled = 1 // don't use 0, 0 is the default value!

|

||||

ChannelStatusManuallyDisabled = 2 // also don't use 0

|

||||

ChannelStatusAutoDisabled = 3

|

||||

)

|

||||

|

||||

const (

|

||||

ChannelTypeUnknown = 0

|

||||

ChannelTypeOpenAI = 1

|

||||

ChannelTypeAPI2D = 2

|

||||

ChannelTypeAzure = 3

|

||||

ChannelTypeCloseAI = 4

|

||||

ChannelTypeOpenAISB = 5

|

||||

ChannelTypeOpenAIMax = 6

|

||||

ChannelTypeOhMyGPT = 7

|

||||

ChannelTypeCustom = 8

|

||||

ChannelTypeAILS = 9

|

||||

ChannelTypeAIProxy = 10

|

||||

ChannelTypePaLM = 11

|

||||

ChannelTypeAPI2GPT = 12

|

||||

ChannelTypeAIGC2D = 13

|

||||

ChannelTypeAnthropic = 14

|

||||

ChannelTypeBaidu = 15

|

||||

ChannelTypeZhipu = 16

|

||||

ChannelTypeUnknown = 0

|

||||

ChannelTypeOpenAI = 1

|

||||

ChannelTypeAPI2D = 2

|

||||

ChannelTypeAzure = 3

|

||||

ChannelTypeCloseAI = 4

|

||||

ChannelTypeOpenAISB = 5

|

||||

ChannelTypeOpenAIMax = 6

|

||||

ChannelTypeOhMyGPT = 7

|

||||

ChannelTypeCustom = 8

|

||||

ChannelTypeAILS = 9

|

||||

ChannelTypeAIProxy = 10

|

||||

ChannelTypePaLM = 11

|

||||

ChannelTypeAPI2GPT = 12

|

||||

ChannelTypeAIGC2D = 13

|

||||

ChannelTypeAnthropic = 14

|

||||

ChannelTypeBaidu = 15

|

||||

ChannelTypeZhipu = 16

|

||||

ChannelTypeAli = 17

|

||||

ChannelTypeXunfei = 18

|

||||

ChannelType360 = 19

|

||||

ChannelTypeOpenRouter = 20

|

||||

ChannelTypeAIProxyLibrary = 21

|

||||

ChannelTypeFastGPT = 22

|

||||

ChannelTypeTencent = 23

|

||||

ChannelTypeGemini = 24

|

||||

)

|

||||

|

||||

var ChannelBaseURLs = []string{

|

||||

"", // 0

|

||||

"https://api.openai.com", // 1

|

||||

"https://oa.api2d.net", // 2

|

||||

"", // 3

|

||||

"https://api.closeai-proxy.xyz", // 4

|

||||

"https://api.openai-sb.com", // 5

|

||||

"https://api.openaimax.com", // 6

|

||||

"https://api.ohmygpt.com", // 7

|

||||

"", // 8

|

||||

"https://api.caipacity.com", // 9

|

||||

"https://api.aiproxy.io", // 10

|

||||

"", // 11

|

||||

"https://api.api2gpt.com", // 12

|

||||

"https://api.aigc2d.com", // 13

|

||||

"https://api.anthropic.com", // 14

|

||||

"https://aip.baidubce.com", // 15

|

||||

"https://open.bigmodel.cn", // 16

|

||||

"", // 0

|

||||

"https://api.openai.com", // 1

|

||||

"https://oa.api2d.net", // 2

|

||||

"", // 3

|

||||

"https://api.closeai-proxy.xyz", // 4

|

||||

"https://api.openai-sb.com", // 5

|

||||

"https://api.openaimax.com", // 6

|

||||

"https://api.ohmygpt.com", // 7

|

||||

"", // 8

|

||||

"https://api.caipacity.com", // 9

|

||||

"https://api.aiproxy.io", // 10

|

||||

"", // 11

|

||||

"https://api.api2gpt.com", // 12

|

||||

"https://api.aigc2d.com", // 13

|

||||

"https://api.anthropic.com", // 14

|

||||

"https://aip.baidubce.com", // 15

|

||||

"https://open.bigmodel.cn", // 16

|

||||

"https://dashscope.aliyuncs.com", // 17

|

||||

"", // 18

|

||||

"https://ai.360.cn", // 19

|

||||

"https://openrouter.ai/api", // 20

|

||||

"https://api.aiproxy.io", // 21

|

||||

"https://fastgpt.run/api/openapi", // 22

|

||||

"https://hunyuan.cloud.tencent.com", //23

|

||||

"", //24

|

||||

}

|

||||

|

||||

7

common/database.go

Normal file

@@ -0,0 +1,7 @@

|

||||

package common

|

||||

|

||||

var UsingSQLite = false

|

||||

var UsingPostgreSQL = false

|

||||

|

||||

var SQLitePath = "one-api.db"

|

||||

var SQLiteBusyTimeout = GetOrDefault("SQLITE_BUSY_TIMEOUT", 3000)

|

||||

@@ -1,11 +1,13 @@

|

||||

package common

|

||||

|

||||

import (

|

||||

"crypto/rand"

|

||||

"crypto/tls"

|

||||

"encoding/base64"

|

||||

"fmt"

|

||||

"net/smtp"

|

||||

"strings"

|

||||

"time"

|

||||

)

|

||||

|

||||

func SendEmail(subject string, receiver string, content string) error {

|

||||

@@ -13,15 +15,32 @@ func SendEmail(subject string, receiver string, content string) error {

|

||||

SMTPFrom = SMTPAccount

|

||||

}

|

||||

encodedSubject := fmt.Sprintf("=?UTF-8?B?%s?=", base64.StdEncoding.EncodeToString([]byte(subject)))

|

||||

|

||||

// Extract domain from SMTPFrom

|

||||

parts := strings.Split(SMTPFrom, "@")

|

||||

var domain string

|

||||

if len(parts) > 1 {

|

||||

domain = parts[1]

|

||||

}

|

||||

// Generate a unique Message-ID

|

||||

buf := make([]byte, 16)

|

||||

_, err := rand.Read(buf)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

messageId := fmt.Sprintf("<%x@%s>", buf, domain)

|

||||

|

||||

mail := []byte(fmt.Sprintf("To: %s\r\n"+

|

||||

"From: %s<%s>\r\n"+

|

||||

"Subject: %s\r\n"+

|

||||

"Message-ID: %s\r\n"+ // add Message-ID header to avoid being treated as spam, RFC 5322

|

||||

"Date: %s\r\n"+

|

||||

"Content-Type: text/html; charset=UTF-8\r\n\r\n%s\r\n",

|

||||

receiver, SystemName, SMTPFrom, encodedSubject, content))

|

||||

receiver, SystemName, SMTPFrom, encodedSubject, messageId, time.Now().Format(time.RFC1123Z), content))

|

||||

auth := smtp.PlainAuth("", SMTPAccount, SMTPToken, SMTPServer)

|

||||

addr := fmt.Sprintf("%s:%d", SMTPServer, SMTPPort)

|

||||

to := strings.Split(receiver, ";")

|

||||

var err error

|

||||

|

||||

if SMTPPort == 465 {

|

||||

tlsConfig := &tls.Config{

|

||||

InsecureSkipVerify: true,

|

||||

|

||||

@@ -5,6 +5,7 @@ import (

|

||||

"encoding/json"

|

||||

"github.com/gin-gonic/gin"

|

||||

"io"

|

||||

"strings"

|

||||

)

|

||||

|

||||

func UnmarshalBodyReusable(c *gin.Context, v any) error {

|

||||

@@ -16,7 +17,13 @@ func UnmarshalBodyReusable(c *gin.Context, v any) error {

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

err = json.Unmarshal(requestBody, &v)

|

||||

contentType := c.Request.Header.Get("Content-Type")

|

||||

if strings.HasPrefix(contentType, "application/json") {

|

||||

err = json.Unmarshal(requestBody, &v)

|

||||

} else {

|

||||

// skip for now

|

||||

// TODO: someday non json request have variant model, we will need to implementation this

|

||||

}

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

111

common/image/image.go

Normal file

@@ -0,0 +1,111 @@

|

||||

package image

|

||||

|

||||

import (

|

||||

"bytes"

|

||||

"encoding/base64"

|

||||

"image"

|

||||

_ "image/gif"

|

||||

_ "image/jpeg"

|

||||

_ "image/png"

|

||||

"net/http"

|

||||

"regexp"

|

||||

"strings"

|

||||

"sync"

|

||||

|

||||

_ "golang.org/x/image/webp"

|

||||

)

|

||||

|

||||

// Regex to match data URL pattern

|

||||

var dataURLPattern = regexp.MustCompile(`data:image/([^;]+);base64,(.*)`)

|

||||

|

||||

func IsImageUrl(url string) (bool, error) {

|

||||

resp, err := http.Head(url)

|

||||

if err != nil {

|

||||

return false, err

|

||||

}

|

||||

if !strings.HasPrefix(resp.Header.Get("Content-Type"), "image/") {

|

||||

return false, nil

|

||||

}

|

||||

return true, nil

|

||||

}

|

||||

|

||||

func GetImageSizeFromUrl(url string) (width int, height int, err error) {

|

||||

isImage, err := IsImageUrl(url)

|

||||

if !isImage {

|

||||

return

|

||||

}

|

||||

resp, err := http.Get(url)

|

||||

if err != nil {

|

||||

return

|

||||

}

|

||||

defer resp.Body.Close()

|

||||

img, _, err := image.DecodeConfig(resp.Body)

|

||||

if err != nil {

|

||||

return

|

||||

}

|

||||

return img.Width, img.Height, nil

|

||||

}

|

||||

|

||||

func GetImageFromUrl(url string) (mimeType string, data string, err error) {

|

||||

// Check if the URL is a data URL

|

||||

matches := dataURLPattern.FindStringSubmatch(url)

|

||||

if len(matches) == 3 {

|

||||

// URL is a data URL

|

||||

mimeType = "image/" + matches[1]

|

||||

data = matches[2]

|

||||

return

|

||||

}

|

||||

|

||||

isImage, err := IsImageUrl(url)

|

||||

if !isImage {

|

||||

return

|

||||

}

|

||||

resp, err := http.Get(url)

|

||||

if err != nil {

|

||||

return

|

||||

}

|

||||

defer resp.Body.Close()

|

||||

buffer := bytes.NewBuffer(nil)

|

||||

_, err = buffer.ReadFrom(resp.Body)

|

||||

if err != nil {

|

||||

return

|

||||

}

|

||||

mimeType = resp.Header.Get("Content-Type")

|

||||

data = base64.StdEncoding.EncodeToString(buffer.Bytes())

|

||||

return

|

||||

}

|

||||

|

||||

var (

|

||||

reg = regexp.MustCompile(`data:image/([^;]+);base64,`)

|

||||

)

|

||||

|

||||

var readerPool = sync.Pool{

|

||||

New: func() interface{} {

|

||||

return &bytes.Reader{}

|

||||

},

|

||||

}